🎓 122/2

This post is a part of the Time series & applications educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Time series analysis is one of the cornerstones of data science and machine learning, particularly useful in fields such as finance (stock price forecasting), supply chain management (inventory prediction), economics (macroeconomic indicators), climate science, and various engineering domains. A time series can be defined as a sequence of data points measured at consistent time intervals. The objective of time series analysis is often to understand patterns such as trends or seasonality and, ultimately, to generate forecasts into the future.

In modern data science, time series problems have grown in significance due to the abundance of chronological data from sensors, transactions, logs, and so on. The discipline involves a broad range of methods — from classical statistical techniques such as ARIMA, SARIMA, or Exponential Smoothing to advanced approaches, including Recurrent Neural Networks (RNN), LSTM, Transformers, etc.. Recent cutting-edge research has also explored combining time series with MLOps, deep learning, or advanced probabilistic modeling (Gaussian processes, deep state-space models, advanced generative models, etc.).

This article will guide you through core concepts, advanced techniques, pitfalls, and best practices in time series forecasting. We assume you have a substantial background in ML, so we will dive deep into theoretical underpinnings, references to relevant research, and code snippets illustrating the concepts in Python. Where possible, we use LaTeX notation for clarity of formulas and math.

2. Key concepts and components

When working with time series, it is helpful to decompose or conceptualize the data in terms of underlying structures. The main elements typically considered are:

- Stationarity: A process is (weakly or covariance) stationary if its mean and autocovariance remain the same over time. Nonstationarity is common in real-life data due to trends, seasonal fluctuations, and other effects.

- Trend: A relatively long-term increase or decrease in the data. A trend may be linear or nonlinear.

- Seasonality: Regular and periodic fluctuations that occur at a known frequency (e.g., monthly, weekly, daily). Seasonality is especially prominent in data related to climate, retail sales cycles, or daily website traffic.

- Cyclical patterns: Recurrent behavior, but not strictly tied to a fixed calendar-based frequency (unlike true seasonality). An economic cycle (e.g., expansions and recessions) might be cyclical.

- Noise: The random component, or remainder term, capturing fluctuations not explained by the main structure (trend, seasonality, cycles) or by known exogenous variables.

2.1. Formalizing a time series

Mathematically, we can treat a univariate time series as an ordered set of data , where is the observation at time . Often, we incorporate exogenous variables, forming a multivariate time series. Let be the vector of exogenous covariates at time . Then a typical time series model might be:

Here, is some function (linear or nonlinear) with parameters , and is a noise or error term (often assumed to be white noise or having certain autocorrelation properties).

2.2. Notions of stationarity

A process is strictly stationary if the joint distribution of does not depend on time . In practice, we often adopt weak stationarity (covariance stationarity) which requires:

- (constant mean),

- (constant variance),

- depends only on , not on .

Classical time series approaches (like ARIMA) often rely on stationarity assumptions. Common transformations to achieve stationarity include differencing or applying a Box-Cox transformation (e.g., log transform).

2.3. Role of trend, seasonality, and cycles

A common decomposition of time series is:

Additive decomposition

where denotes trend, denotes seasonality, and is the remainder component.

Multiplicative decomposition

used when the amplitude of seasonal fluctuations grows with the level of the series. One might also do partial transformations (e.g., log) if the multiplicative form is more appropriate.

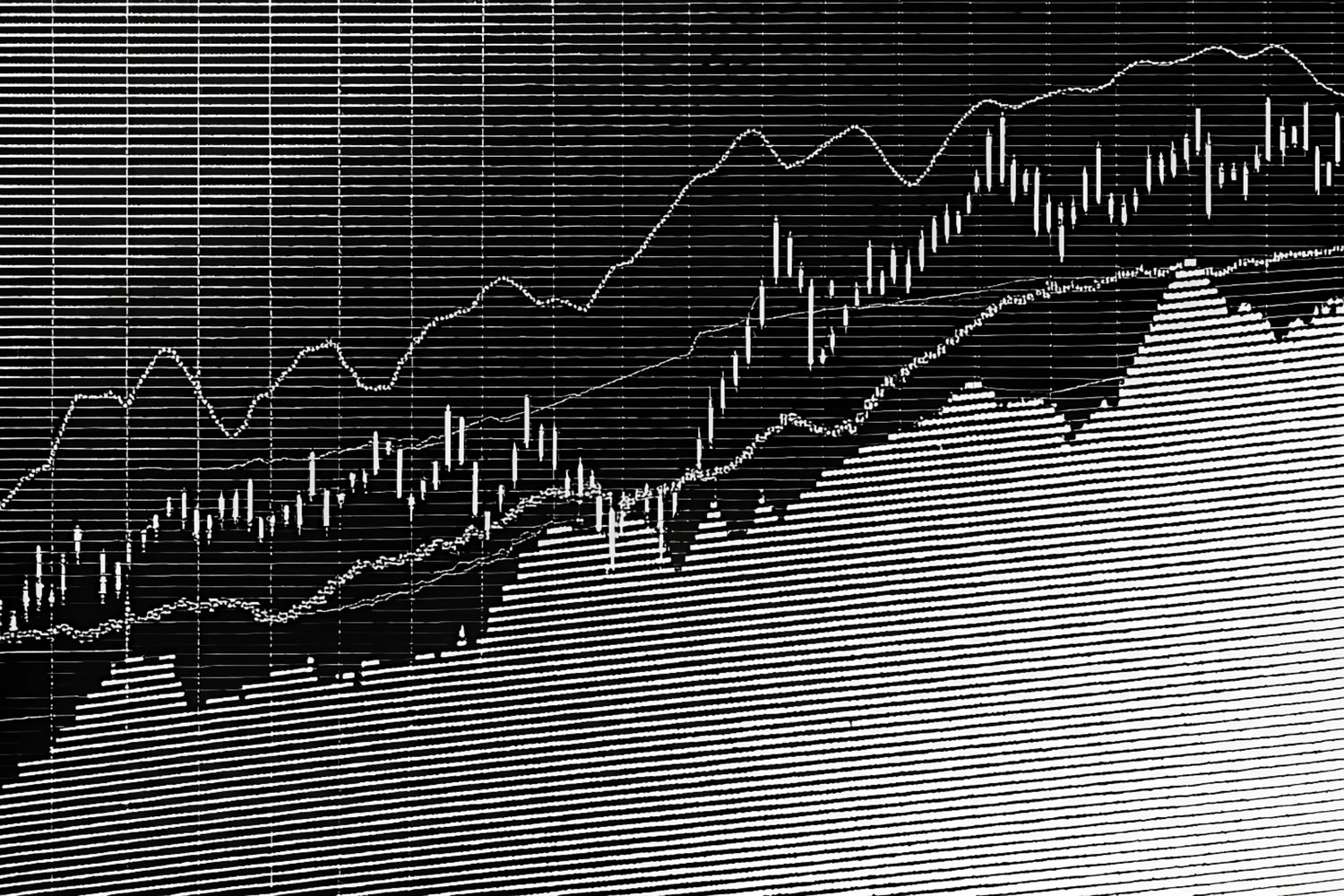

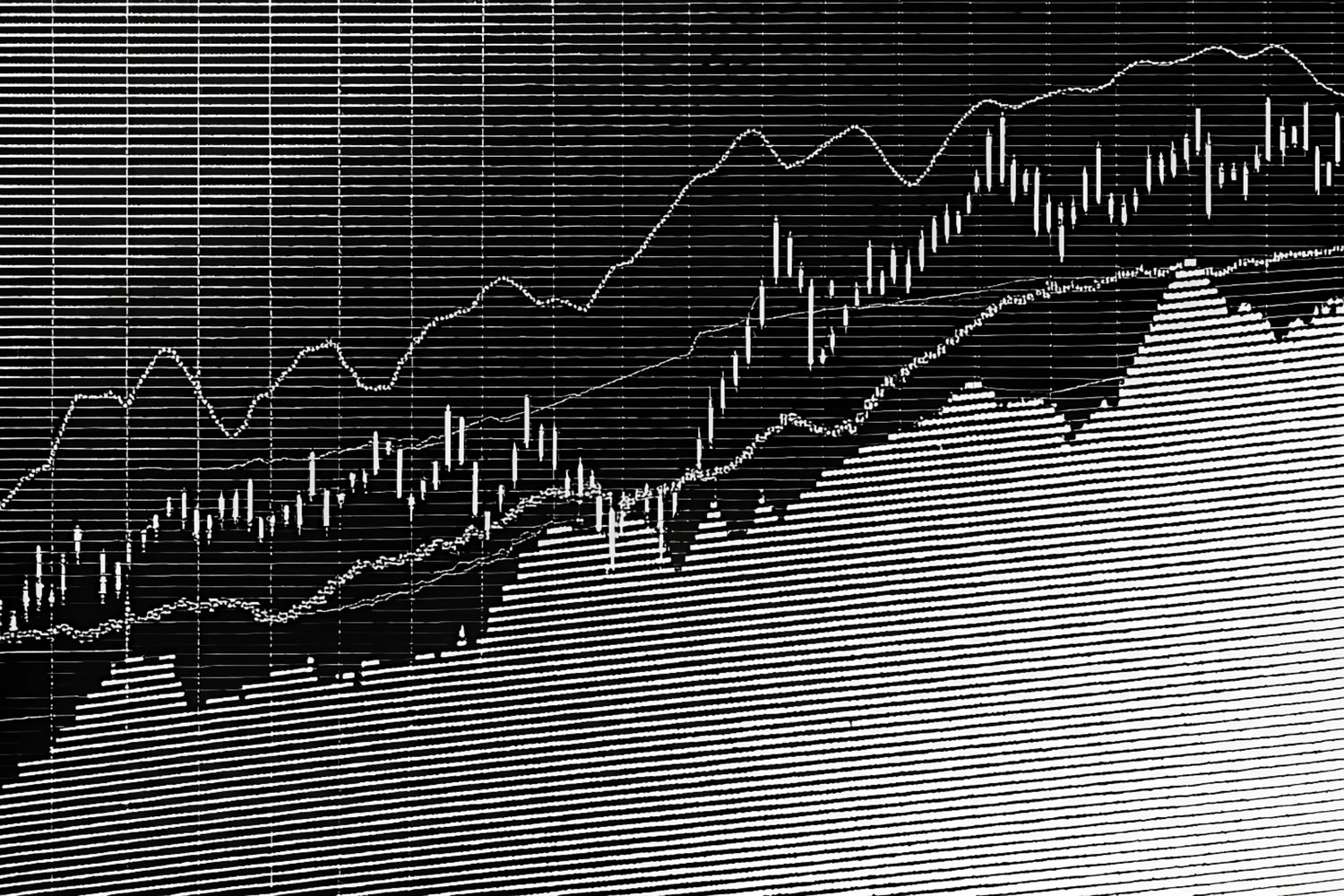

2.4. Example: Stock price time series

Consider daily stock price data for a particular company. We might observe cyclical patterns associated with economic expansions, short-term trends triggered by earnings announcements, weekly seasonality due to Monday vs. Friday trading volumes, or daily changes from news events (noise). Stationarity rarely holds in raw price data. Often, we take daily returns:

(or ) to attempt stationarity.

3. Exploratory data analysis

Exploratory analysis of a time series is crucial. We want to examine patterns, outliers, missing data, and other features that might influence model selection or preprocessing steps.

3.1. Visualizing time series

A well-chosen set of plots can reveal important structure. Some popular approaches:

- Line plot over time (the standard approach).

- Seasonal plot: For instance, group data by month to see seasonal fluctuations or produce a "seasonal subseries plot."

- Autocorrelation plot (ACF): Correlation of a series with its own past values. Helps detect seasonality or correlation structure.

- Partial autocorrelation plot (PACF): Helps identify autoregressive terms.

- Lag scatter plots: Plot vs. , vs. , etc.

- Box plot by season: e.g., separate box plots for each month to show distribution and outliers.

An image was requested, but the frog was found.

Alt: "Example of a time series line plot"

Caption: "Line plot of a monthly data series highlighting seasonal peaks"

Error type: missing path

Below is an example code snippet in Python (using pandas and matplotlib) to visualize a univariate time series and its autocorrelation:

<Code text={`

import pandas as pd

import matplotlib.pyplot as plt

from pandas.plotting import lag_plot

from statsmodels.graphics.tsaplots import plot_acf

# Suppose we have a CSV file with a Date column and a Value column

df = pd.read_csv('time_series_data.csv', parse_dates=['Date'], index_col='Date')

series = df['Value']

# 1) Basic line plot

plt.figure(figsize=(10,4))

plt.plot(series, label='Time series')

plt.title('Line plot of time series')

plt.legend()

plt.show()

# 2) Lag scatter plot

plt.figure()

lag_plot(series)

plt.title('Lag plot at lag=1')

plt.show()

# 3) Autocorrelation plot

plt.figure()

plot_acf(series, lags=30)

plt.show()

`} />3.2. Statistical metrics (mean, variance, autocorrelation, partial autocorrelation)

Basic descriptive statistics such as mean, median, variance, minimum, and maximum across the time dimension can yield valuable insight, especially to check if the data is stationary or if it has noticeable trends. The autocorrelation function (ACF) is a key concept. For a lag , the sample autocorrelation is approximately:

" />

The partial autocorrelation function (PACF) measures correlation between and once we remove the influence of other lags in-between.

3.3. Handling missing data and outliers

Time series often have missing timestamps. Strategies include:

- Imputation with forward fill or backward fill.

- Interpolation (linear, spline) if that suits the domain.

- More sophisticated approaches using domain knowledge.

Outliers may result from sensor failures, data entry errors, or actual anomalies. Some practitioners prefer robust models or robust decomposition (like STL with a robust setting) to mitigate outlier influence. Alternatively, certain advanced methods (e.g., LOF-based anomaly detection, or isolation forests) can be used to systematically identify and handle outliers.

4. Classical forecasting methods

Classical forecasting methods remain widely used, especially where interpretability and transparency are paramount. They often serve as strong baselines that are easily explainable to stakeholders.

Common "classical" approaches:

- Moving average and exponential smoothing: Techniques that produce smoothed versions of past data and extrapolate.

- Simple regression-based methods: Linear or polynomial regression on time index or other factors.

- Decomposition-based forecasting: Separate out trend and seasonality from data, then forecast the remainder.

4.1. Moving average (MA) and exponential smoothing (ES)

4.1.1. Moving average

A simple moving average of order for time step is:

The forecast for time is simply . This is a naive smoothing approach, ignoring seasonality or other complexities.

4.1.2. Exponential smoothing

Exponential smoothing assigns exponentially decreasing weights over time. The simplest form, simple exponential smoothing, is:

where . More advanced versions (Holt's linear trend method, Holt-Winters method) incorporate trend and seasonality. For example, the Holt-Winters additive model can be written as:

Here, is the level at time , is the slope or trend, is the seasonal component, and is the seasonal period. The parameters are smoothing coefficients.

4.2. Simple regression-based approaches

One might regress on:

- The time index (for a global trend).

- Categorical dummy variables for each season (e.g., months).

- Additional exogenous variables (like ).

For example, a simple additive model:

where are seasonal dummy variables (1 for season j at time t, 0 otherwise). This might be extended with polynomial terms or splines for the trend.

4.3. Decomposition-based forecasting

If we have an additive decomposition , a naive approach is:

- Estimate and from historical data (e.g., by a decomposition such as STL).

- Forecast the trend part with a method for non-seasonal data (like an AR(1) or simple exponential smoothing).

- Forecast the seasonal part by reusing the last observed season or by some smoothing across years.

- Combine them to get the final forecast: .

This approach can perform well in many practical settings but might underfit abrupt changes or more complex relationships.

5. ARIMA models

5.1. Autoregressive (AR) process

An AR(p) model uses lags of the time series as predictors:

Here, are parameters, is a constant, and .

5.2. Moving average (MA) process

An MA(q) model uses lags of the noise or error:

In essence, the new observation is a linear combination of white noise terms.

5.3. Combined ARMA and ARIMA

By combining AR(p) and MA(q), we get ARMA(p, q):

However, real data often exhibit nonstationary behaviors (trend or random walk). By differencing the series times, we can (hopefully) achieve stationarity. This leads to ARIMA(p, d, q), meaning an ARMA model on the differenced data:

- Let denote differencing times (with ).

- Then an ARIMA(p, d, q) model satisfies: ."/>

5.4. Parameter selection and model diagnostics

Selecting can be done via:

- Looking at ACF/PACF plots.

- Minimizing information criteria (AIC, BIC).

- Using auto.arima-type procedures in software packages (e.g.,

pmdarimain Python orforecastin R).

After fitting, one checks diagnostics:

- Residuals should be white noise (check ACF of residuals).

- Model should not be overfit or underfit (use AIC/BIC or cross-validation for out-of-sample performance).

6. SARIMA and seasonal extensions

Seasonality means a repeated pattern every observations. For monthly data, ; for quarterly data, ; for daily data with weekly patterns, .

6.1. SARIMA structure

Seasonal ARIMA is typically written as: . For seasonal data, we do both regular differencing and seasonal differencing . The model integrates the idea of ARIMA at two levels: the standard "non-seasonal" part plus a "seasonal" part.

The general form is:

where is the lag operator (), is the non-seasonal AR polynomial, is the seasonal AR polynomial, and are the non-seasonal and seasonal MA polynomials, respectively.

6.2. Identifying seasonality parameters

Similar to identifying , we use:

- Seasonal differencing if there is an apparent seasonal pattern that is not stable in level.

- Seasonal ACF/PACF for initial guesses of and .

- Automated selection by AIC/BIC or heuristics in packages.

6.3. Model selection criteria (AIC, BIC)

AIC: Akaike Information Criterion,

where is the number of estimated parameters and is the maximum value of the likelihood function.

BIC: Bayesian Information Criterion,

A lower AIC/BIC indicates a better trade-off between model fit and complexity.

6.4. Residual analysis

Once the model is fit, we check the residuals:

- Ideally, residuals are uncorrelated and exhibit constant variance.

- Plot ACF of residuals. No significant spikes at any lag implies good fit.

- Perform Ljung-Box test to see if significant autocorrelations remain.

7. Advanced time series approaches

While ARIMA and SARIMA remain mainstays, more advanced or specialized approaches can outperform them in certain contexts.

7.1. Vector autoregression (VAR)

When dealing with multivariate time series with potential interdependencies, Vector Autoregression extends AR by modeling multiple time series simultaneously:

Here, is a vector of multiple variables, and are coefficient matrices.

7.2. State space models and Kalman filters

State space formulations represent time series via latent (unobserved) states that evolve over time, plus an observation model:

where is the hidden state. The Kalman filter is a recursive procedure for linear Gaussian state space models, extended to nonlinear cases as the Extended or Unscented Kalman Filter. State space models can incorporate time-varying parameters, handle missing data elegantly, and capture complex behaviors.

7.3. Long Short-Term Memory networks (LSTM): motivation

In deep learning, LSTM networks (Hochreiter & Schmidhuber, 1997) overcame the vanishing gradient problem of standard RNNs, making it possible to capture long-range dependencies in sequences. Although we have a dedicated article on LSTMs, the fundamental motivation is that many time series tasks require memory of events far in the past (like multi-step seasonal or cyclical phenomena). LSTMs handle that by gating mechanisms.

In practice, LSTMs (and GRUs) are widely used for forecasting tasks, especially for complex real-world data with multiple related series. Extensions, such as Seq2Seq or Encoder-Decoder architectures, further empower multi-step or variable-length forecasting.

7.4. Prophet and other modern libraries

Prophet, developed by Meta (Facebook) researchers, is a library focusing on a parametric approach:

where is a piecewise linear or logistic growth curve, is a sum of Fourier series modeling seasonality, and is special holiday effects. Prophet automates hyperparameter selection, handles missing data, and can be quite robust to outliers or sudden changes.

Meanwhile, modern open-source frameworks like GluonTS (by Amazon) or Orbit (by Uber) also incorporate advanced Bayesian or deep learning methods.

8. Time series clustering

Time series clustering is an unsupervised task to group similar time series. The challenge is the definition of "similarity." Approaches include:

- Using Dynamic Time Warping (DTW) as a distance metric, allowing for warping along the time dimension. Then apply standard clustering (k-means with a custom distance, hierarchical clustering, DBSCAN, etc.).

- Feature-based methods: engineer features (mean, standard deviation, number of peaks, etc.) to embed each series in a fixed dimension, then apply standard clustering. Or use shapelets or wavelet-based features.

For instance, Parkinson's disease data or speech data clustering are popular use-cases for DTW-based clustering.. Also, advanced methods use neural embeddings (like an autoencoder) to represent each series in a latent space, then cluster in that space.

8.1. Similarity measures (DTW, Euclidean distance)

- Euclidean: Quick, but sensitive to phase shifts or varying lengths.

- DTW: Allows time distortions. Very popular, albeit more computationally expensive.

- Correlation-based: If the shape is relevant more than amplitude, correlation-based distance can be used.

8.2. Clustering algorithms (k-means, hierarchical, spectral) applied to time series

- k-means: Minimizes within-cluster variance, but must handle custom distance carefully (like DTW barycenter averaging (DBA)).

- Hierarchical: Builds a dendrogram, merges or splits clusters. Flexible but can be slow for large datasets.

- Spectral: Uses graph-based representation from pairwise similarity. Potentially captures complex cluster shapes.

8.3. Applications and interpretation

Time series clustering is used in marketing (grouping customer usage patterns), anomaly detection (clustering normal vs. abnormal time series), medical signals, IoT sensor groupings, etc.

9. Time series cross-validation

Time series cross-validation differs from standard cross-validation because data is time-ordered. We generally avoid random splits that mix future data into the training set.

9.1. Rolling window (sliding) validation

Split the time series into training and validation sets in a rolling fashion:

- Fit on , validate on .

- Move forward, fit on , validate on .

- Repeat until we run out of data.

This allows each test set to be strictly after the training set in time.

9.2. Expanding window validation

Similar to rolling, but each step expands the training window forward (never dropping early data). For example:

- Fit on , predict .

- Fit on , predict .

- etc.

9.3. TimeSeriesSplit in practice

In scikit-learn, TimeSeriesSplit splits the data according to time order. Alternatively, specialized frameworks like statsmodels or pmdarima have built-in time-series cross-validation tools.

9.4. Evaluating model performance over time

Often we compute metrics like RMSE or MAPE across multiple forecast horizons. We might also examine how errors change over time, or for different sub-seasons.

One must also note the difference between 1-step vs. multi-step ahead forecasting. For multi-step, repeated rolling or expanding windows are typical to measure performance thoroughly.

10. Practical considerations and best practices

10.1. Data preprocessing and feature engineering

- Normalization or scaling can help models converge, especially neural networks.

- Fourier or wavelet transforms to capture cyclical or seasonal patterns, if relevant.

- Date/time features: For instance, day of week, month of year, holiday indicators. These features can be very informative.

10.2. Dealing with non-stationarity and transformations

Many forecasting models (ARIMA, etc.) require or prefer stationarity. Techniques:

- Differencing: Subtract or integrate.

- Detrending: Fit a trend, subtract it out.

- Box-Cox or log transform: Stabilizes variance or turns multiplicative relationships into additive.

10.3. Handling large-scale datasets

For big time series or many parallel series, we might need distributed computing (Spark, Dask) or online algorithms that update incrementally. Modern cloud-based pipelines can handle partial fitting or streaming data in real time.

10.4. Model deployment and maintenance

Time series models degrade over time due to concept drift. Best practices:

- Implement an automated monitoring system to track forecast errors.

- Retrain periodically with recent data or use online learning approaches.

- Archive old models for reproducibility and potential ensemble methods.

Extra chapters and deeper expansions

Although not explicitly required, let's add more content to fill out theoretical and state-of-the-art aspects beyond the main outline. These expansions will help achieve a deeper, advanced-level discussion and ensure coherence with the rest of the course.

11. Hierarchical time series forecasting

In business contexts, we often have data aggregated at multiple levels (e.g., total sales vs. product-level sales). Hierarchical forecasting reconciles predictions across these groupings. A widely cited approach is the hierarchical reconciliation method by Hyndman and gang (2008, JASA), where bottom-up or top-down or middle-out techniques are used, or the well-known MinT approach.

12. Transfer learning for time series

A cutting-edge area: large, pre-trained neural networks for time series. Research at NeurIPS and ICML has explored how to train a big model on diverse time series from multiple domains, then fine-tune on a new dataset. This is reminiscent of large language models in NLP, though the domain constraints differ.

13. Interpretable time series modeling

Various methods strive to preserve interpretability:

- Additive models (like Prophet) are relatively interpretable because each component is isolated.

- Shapelet-based methods in classification tasks.

- Attention-based neural networks (like Transformers) can highlight parts of the input that contributed strongly to the forecast, though interpretability is partial.

14. Causality vs. correlation in time series

Forecasting does not necessarily require causal relationships. However, advanced approaches like Granger causality testing or structural time series can glean causal insights. Bayesian networks or do-calculus methods are sometimes used for causal modeling in time series, though it remains a complex topic.

15. ESG-based forecasting or sustainability contexts

Another emergent domain is environment, social, governance (ESG) analytics. For example, forecasting carbon emissions or measuring ESG metrics for companies often requires advanced time series and exogenous regressors. Some specialized transformations or domain-specific constraints might be relevant.

Illustrative Python code examples

To clarify various steps, here is an integrated example that uses statsmodels for ARIMA and pmdarima for auto-ARIMA, plus an example of an LSTM-based model using Keras, all applied to a sample dataset. These code snippets are simplified; in practice, you might structure your code differently or incorporate more robust data preparation.

15.1. ARIMA with cross-validation

<Code text={`

import numpy as np

import pandas as pd

from statsmodels.tsa.arima.model import ARIMA

from sklearn.metrics import mean_squared_error

import math

# Example: monthly data

df = pd.read_csv('monthly_data.csv', parse_dates=['Month'], index_col='Month')

series = df['value']

# Train/test split

train_size = int(len(series) * 0.8)

train, test = series.iloc[:train_size], series.iloc[train_size:]

history = train.tolist()

predictions = []

for t in range(len(test)):

model = ARIMA(history, order=(2,1,2))

model_fit = model.fit()

yhat = model_fit.forecast(steps=1)

predictions.append(yhat[0])

obs = test.iloc[t]

history.append(obs)

rmse = math.sqrt(mean_squared_error(test, predictions))

print("Test RMSE:", rmse)

`} />15.2. Automated SARIMA

<Code text={`

import pmdarima as pm

import pandas as pd

df = pd.read_csv('monthly_data.csv', parse_dates=['Month'], index_col='Month')

series = df['value']

# auto_arima can figure out p, d, q, P, D, Q, m, etc. based on AIC

model = pm.auto_arima(series, seasonal=True, m=12,

start_p=1, start_q=1, max_p=5, max_q=5,

start_P=0, start_Q=0, max_P=5, max_Q=5,

d=None, D=None,

trace=True,

error_action='ignore',

suppress_warnings=True,

stepwise=True)

print(model.summary())

# Forecast the next 12 months

forecast, conf_int = model.predict(n_periods=12, return_conf_int=True)

`} />15.3. LSTM example for univariate series

<Code text={`

import numpy as np

import pandas as pd

from keras.models import Sequential

from keras.layers import LSTM, Dense

from sklearn.preprocessing import MinMaxScaler

df = pd.read_csv('daily_data.csv', parse_dates=['Date'], index_col='Date')

values = df['value'].values.reshape(-1,1)

# Scale data

scaler = MinMaxScaler(feature_range=(0,1))

scaled = scaler.fit_transform(values)

# Transform to supervised problem

def series_to_supervised(data, n_in=1, n_out=1):

X, y = [], []

for i in range(len(data) - n_in - n_out + 1):

X.append(data[i:(i+n_in), 0])

y.append(data[(i+n_in):(i+n_in+n_out), 0])

return np.array(X), np.array(y)

n_lag = 3

n_out = 1

X, y = series_to_supervised(scaled, n_lag, n_out)

train_size = int(len(X)*0.8)

X_train, X_test = X[:train_size], X[train_size:]

y_train, y_test = y[:train_size], y[train_size:]

# Reshape for LSTM [samples, timesteps, features]

X_train = X_train.reshape((X_train.shape[0], X_train.shape[1], 1))

X_test = X_test.reshape((X_test.shape[0], X_test.shape[1], 1))

model = Sequential()

model.add(LSTM(50, input_shape=(n_lag, 1), activation='relu'))

model.add(Dense(n_out))

model.compile(optimizer='adam', loss='mse')

model.fit(X_train, y_train, epochs=20, batch_size=32, verbose=1)

# Make predictions

preds = model.predict(X_test)

preds_rescaled = scaler.inverse_transform(preds)

# Evaluate

y_test_rescaled = scaler.inverse_transform(y_test)

`} />One can expand upon this to do multi-step forecasts, incorporate more features, or build more sophisticated neural network architectures like CNN-LSTMs, Transformers, or advanced "N-BEATS" approaches (Oreshkin and gang, 2020, ICLR) for time series forecasting.

References

Below is a partial list of references and further reading:

- Cleveland, R. B., Cleveland, W. S., McRae, J. E., & Terpenning, I. (1990). "STL: A Seasonal-Trend Decomposition Procedure Based on Loess." Journal of Official Statistics, 6, 3–73.

- Hyndman, R. J., & Athanasopoulos, G. (2021). Forecasting: principles and practice (3rd ed). OTexts.

- Box, G. E. P., Jenkins, G. M., & Reinsel, G. C. (2015). Time Series Analysis: Forecasting and Control. John Wiley & Sons.

- Hochreiter, S., & Schmidhuber, J. (1997). "Long Short-Term Memory." Neural Computation, 9(8), 1735–1780.

- Oreshkin, B. N., Carpov, D., Chapados, N., & Bengio, Y. (2020). "N-BEATS: Neural basis expansion analysis for interpretable time series forecasting." International Conference on Learning Representations (ICLR).

- Taylor, S. J., & Letham, B. (2018). "Forecasting at Scale." The American Statistician, 72(1), 37–45.

- Durbin, J., & Koopman, S. J. (2012). Time Series Analysis by State Space Methods. Oxford University Press.

- Athanasopoulos, G., Hyndman, R. J., Song, H., & Wu, D. C. (2011). "The tourism forecasting competition data." International Journal of Forecasting, 27(3), 822–832.

- Seeger, M., Salinas, D., & Flunkert, V. (2017). "Bayesian Intermittent Demand Forecasting for Large Inventories." NeurIPS.

- Salinas, D., Flunkert, V., Gasthaus, J., & Januschowski, T. (2020). "DeepAR: Probabilistic forecasting with autoregressive recurrent networks." International Journal of Forecasting, 36(3), 1181–1191.

Conclusion

Time series analysis and forecasting is a rich field with broad applications and a large toolbox of methods. Classical approaches like ARIMA or exponential smoothing remain widely used and are important references for interpretability and reliability. However, data scientists now also have access to advanced approaches (state space models, vector autoregression for multivariate, deep learning architectures such as LSTM, CNN-LSTM, Transformers, etc.).

No single method universally outperforms all others; success depends heavily on domain knowledge, data characteristics (trend, seasonality, outliers, missing values), the forecast horizon, and evaluation criteria. Cross-validation designed specifically for time series is crucial for robust performance estimation. Always start with an understanding of your data and domain context, build baseline models for reference (like naive or basic exponential smoothing), then iterate with more sophisticated approaches, carefully validating and monitoring your forecasts over time.

In professional settings, consider the full lifecycle of time series solutions: data ingestion, cleaning, model building, validation, deployment, monitoring, and retraining. With thorough exploratory data analysis, well-chosen methods, and a systematic approach to validation, you can produce accurate and trustworthy time series forecasts that drive valuable decisions and insights.