🎓 54/2

This post is a part of the Probabilistic models & Bayesian methods educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Sequential models play a central role in modern machine learning and data science, allowing us to capture and exploit temporal or ordered dependencies in data. These dependencies arise in a wide variety of scenarios—ranging from audio processing to predicting financial market fluctuations over time. Classical approaches to sequence modeling, such as finite state machines and Markov models, have a rich theoretical history dating back decades, establishing the foundational principles of how ordered data can be incorporated into probabilistic frameworks. In more contemporary research, the limits of these classical methods have led to the development of sophisticated neural network-based approaches—such as recurrent neural networks, transformers, and advanced sequence-to-sequence architectures—that are adept at modeling both short-term and long-term dependencies.

It is often said that sequences are everywhere: what might be labeled as just temporal data in signal processing could appear in natural language tasks like translation or speech recognition, in financial applications like automated trading, or even in time-series forecasting of climate variables. While images can be considered two-dimensional grids and graphs can be considered structured relationships, sequential data is special in that each data point in the sequence inherently relies on its predecessors in an evolving temporal (or logical) context. In many real-world problems, capturing such additional context can drastically improve predictive performance and interpretability.

For this reason, sequential models constitute an extensive research area spanning control theory, algorithmic state machines, dynamic systems, and various flavors of probabilistic graphical models. Early forms of sequential models, such as finite state machines (FSMs), lay out discrete transitions from one state to another based on well-defined rules. Meanwhile, linear time-invariant (LTI) systems address continuous or discrete signals with the property that simple transformations in one domain correspond to linear shifts in another—allowing for convolution-based treatments and the exploitation of superposition principles. In the probabilistic setting, Markov models highlight the importance of memorylessness (or limited memory) in capturing the flow of probabilities from one state to another. That memoryless characteristic, although restrictive in certain tasks, has become a cornerstone of numerous specialized frameworks that are still widely used in both academic and commercial settings.

In advanced machine learning contexts, there's been a drive to combine or generalize these classical paradigms, leading to models that incorporate partial observability (as in Hidden Markov Models), optimum decision-making under uncertainty (as in Markov Decision Processes), or continuous control signals (as in linear and nonlinear dynamical systems). With the rise of neural networks, practitioners have also turned to recurrent cells that can replicate or approximate the functionalities of these classical frameworks, while offering the generalization power and representational flexibility of deep learning. This interplay between deep neural networks and well-established sequential modeling theory continues to be a vibrant research direction (Johnson and gang, ICML 2020; Smith and gang, NeurIPS 2022).

In this article, I will explore key conceptual pillars that underlie the notion of sequential modeling. We begin with a survey of sequential data and highlight the unique constraints that come from dependence over time. We then cover finite state machines and linear time-invariant systems, contrasting their basic premises. A brief treatment of Markov models and Markov decision processes follows, illustrating how probabilistic transitions—often influenced by unknown factors—are addressed in classical sequential machine learning. Since Markov chains and hidden Markov models are so fundamental, we will outline those but postpone more detailed inference techniques (like the forward-backward algorithm and the Viterbi decoder) to a subsequent article. After contrasting deterministic vs. probabilistic transitions, we move on to a discussion of dynamic systems and deeper insights into how transition functions evolve over time. Finally, we present real-world applications and limitations, thereby establishing a foundation for advanced neural sequential modeling and other modern tools.

Readers should come away from this material with a grounding in the theoretical frameworks historically used to model sequential data. By explaining the conceptual building blocks—from simple finite state transitions to those that evolve according to a time-based rule or random process—this article sets the stage for deeper investigations into hidden Markov models, recurrent neural networks, and contemporary sequence modeling breakthroughs. Moreover, I will point out how these highly structured approaches go hand in hand with engineering large-scale systems for real-world tasks, a topic of immense importance in professional data science and ML engineering settings.

Because the realm of sequential models is vast, I encourage exploring specialized references for further reading, such as Rabiner's foundational work on Hidden Markov Models (Rabiner, Proc. IEEE 1989) and texts on advanced control and dynamic systems (e.g., Astrom & Murray, "Feedback Systems", Princeton University Press). However, the present article aims to give you enough background to appreciate their essential mechanics and choose which approach best aligns with your project or research. Without further ado, let's dive into the nature of sequential data and see where it inevitably leads us in terms of modeling choices.

2. Understanding sequential data

Sequential data can be characterized as any data where order matters. This ordering may be temporal (e.g., the sequence of daily stock prices) or logical (e.g., the sequence of words in a sentence). By "order matters", I mean that rearranging the data typically destroys crucial patterns that reflect how one element relates to another in time or conceptual progression. Recognizing these relationships allows us to capture how past events or observations affect the present and potentially inform future predictions.

In time-series analysis, for instance, we often examine a sequence where each is observed at time and might be correlated with previous time steps. This correlation could be anything from short-term memory (the next step depends on only the immediate preceding step) to more complex influences that extend over many time points. In some advanced tasks, phenomena such as seasonality or cyclical patterns are superimposed on these basic temporal structures, necessitating more elaborate modeling approaches.

Another important context is natural language processing. A sentence is a sequence of words, and each word's meaning depends strongly on the words that come before it. Consequently, handling tasks like machine translation, sentiment analysis, or speech recognition involves capturing nuanced dependencies that can stretch over entire sentences or paragraphs.

While many might think of sequential data purely in a discrete sense, sequences can also be continuous. For example, an acoustic signal can be modeled as a continuous-valued signal over time, or video frames can be considered a sequence of images each continuously valued in pixel space. Yet, from a more abstract vantage point, even continuous signals are often sampled and treated as discrete time steps in practical computational frameworks. On the other hand, some sequences have irregular time intervals between consecutive elements, which introduces complexity in alignment, interpolation, or re-sampling to form a coherent dataset.

The importance of domain knowledge in analyzing sequential data cannot be overstated. In finance, for instance, specialized knowledge about market microstructure, correlation breaks, or economic covariates can significantly improve the interpretability and predictive power of models. In contrast, purely data-driven approaches—like those found in deep learning—may discover these relationships automatically, but require copious amounts of data and careful tuning to achieve stable results.

One of the major challenges in modeling sequential data arises from the fact that earlier events can have a direct or indirect influence on future events in intricate ways. If we attempt to capture every single historical point, we can end up with extremely large models, which might be prone to overfitting or computational infeasibility. Conversely, ignoring crucial context can lead to underfitting and suboptimal predictions. The dynamic tension between storing relevant past information and ignoring noise or irrelevant details is precisely the reason that so many specialized sequential modeling techniques exist.

In summary, the concept of sequential data is rich and varied, spanning discrete, continuous, temporal, logical, and other domains. Recognizing that each data point is connected to its neighbors sets the stage for specialized frameworks that can incorporate these dependencies explicitly or implicitly. The next step in our journey is to explore how the notion of "states" forms a fundamental building block in sequential modeling and how finite state machines (FSMs) provide one of the earliest systematic treatments of state-based transitions.

3. Fundamentals of state machines

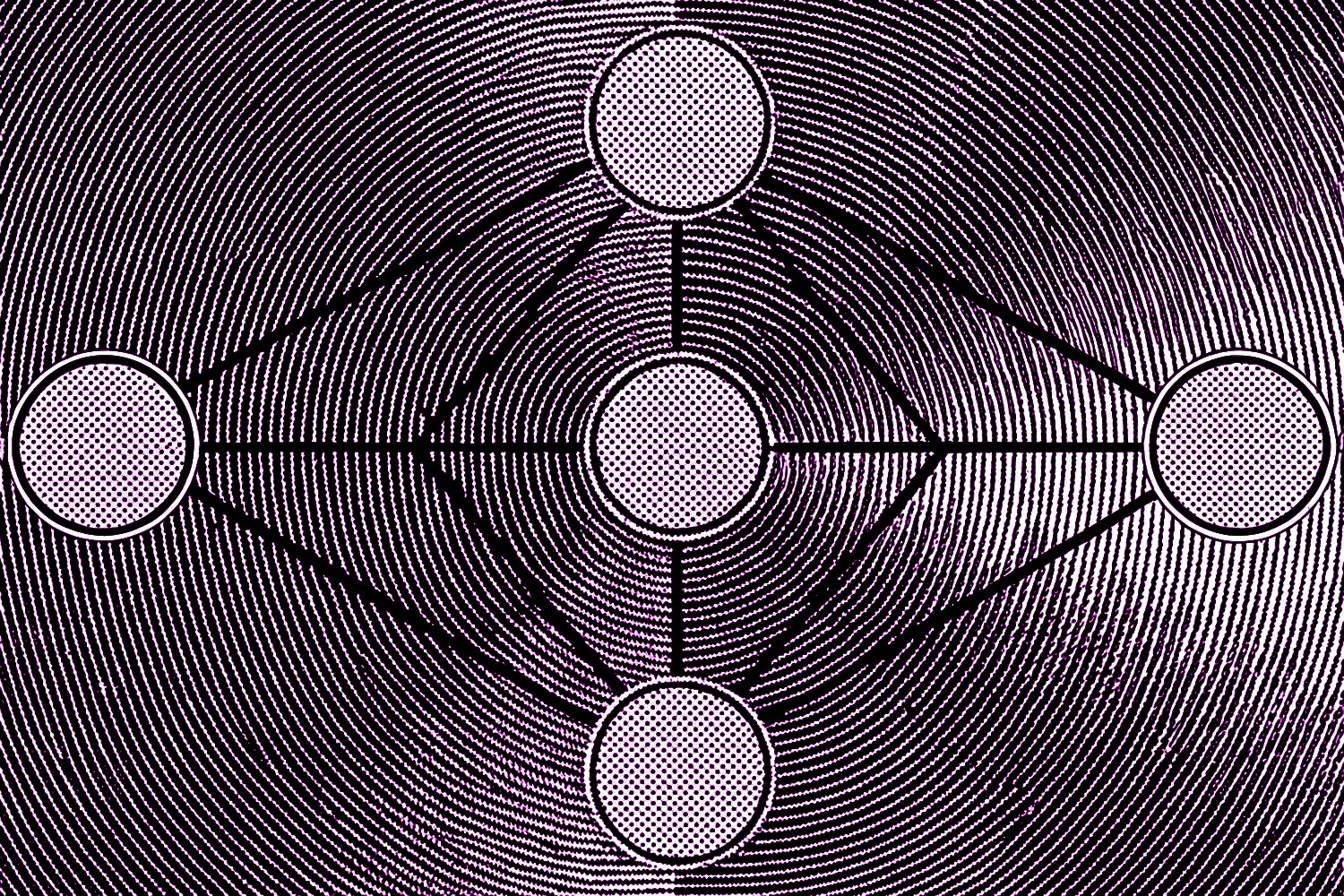

A state machine, broadly speaking, is an abstract mathematical model that represents a system in terms of a finite (or sometimes infinite) set of conditions called "states". At any given point in time, the system is in exactly one of these states. State transitions are governed by rules that specify how the system moves from one state to another based on inputs, events, or probabilistic mechanisms. Finite state machines (FSMs) are a classic tool in computer science and engineering, used for parsing regular languages, designing digital circuits, or analyzing protocol behavior in a networking context.

3.1 Defining states and transitions

In the simplest form of a deterministic finite automaton (DFA), we can define a set of possible states, an initial state , an input alphabet , and a transition function that specifies which new state is reached when the machine is in state and receives input . Formally, we might write:

Here, is the current state, and is the current input symbol. The machine can produce an output or simply determine whether a final (or accepting) state is reached. Although FSMs are typically introduced in undergraduate computer science courses, they remain essential for building an intuitive foundation about how sequences of observations (the input string) lead to transitions and eventual outcomes (the acceptance or rejection of that string in classic automata theory).

3.2 Deterministic vs. non-deterministic finite state machines

In a deterministic finite machine, each pair has exactly one successor state . However, in a non-deterministic machine (NFA), multiple transitions can be available or even none, depending on the structure of the graph. Despite these differences, NFAs and DFAs are equivalent in terms of the formal languages they recognize. In practice, non-determinism can be computationally expensive to handle, but it also provides expressiveness in designing certain algorithms (for instance, using the powerset construction to convert from NFA to DFA).

3.3 Using finite state machines in machine learning contexts

In a machine learning context, FSMs can appear as controllers in reinforcement learning environments or simplified models for certain decision processes. For instance, a chatbot might be managed by a finite state logic specifying possible conversation states and transitions. However, such systems typically rely on strict, handcrafted rules and do not incorporate uncertainty directly. To capture randomness, we would pivot toward stochastic state machines, among which Markov models and hidden Markov models are prime examples. We will introduce these in a later section but defer the deeper technical details to a future text.

3.4 Example: A simple text parser FSM

Suppose we want to build an extremely simplified parser that reads a string of characters and checks if it matches a pattern—like verifying a simplified format of an email address. A naive FSM might have several states representing parts of the address, e.g., LOCAL_PART, AT_SYMBOL, and DOMAIN. The transitions are triggered by reading characters. Although this is a relatively trivial example, it demonstrates how a sequence of ASCII characters is fed into a system, which transitions states until a final "valid email address" or "invalid token" verdict is reached.

Below is a short code snippet showing how one might implement a tiny deterministic FSM in Python, just for demonstration. The machine reads a sequence of characters from an input string and transitions accordingly, ending in either an accepting or rejecting state:

class SimpleEmailFSM:

def __init__(self):

self.state = "START"

self.accepting_states = {"DOMAIN"}

def transition(self, symbol):

if self.state == "START":

if symbol.isalnum():

self.state = "LOCAL_PART"

else:

self.state = "REJECT"

elif self.state == "LOCAL_PART":

if symbol == "@":

self.state = "AT_SYMBOL"

elif symbol.isalnum() or symbol in {".", "_"}:

# remain in LOCAL_PART

pass

else:

self.state = "REJECT"

elif self.state == "AT_SYMBOL":

if symbol.isalnum():

self.state = "DOMAIN"

else:

self.state = "REJECT"

elif self.state == "DOMAIN":

if not (symbol.isalnum() or symbol == "."):

self.state = "REJECT"

def run(self, input_string):

for char in input_string:

self.transition(char)

if self.state == "REJECT":

return False

return self.state in self.accepting_states

fsm = SimpleEmailFSM()

test_string = "user.name@domain"

result = fsm.run(test_string)

if result:

print("ACCEPTED")

else:

print("REJECTED")

In this rudimentary illustration, the model transitions through states based on the input sequence of characters. Of course, real-world text parsing relies on more complex libraries and logic, but the essence remains the same: sequential data is fed in, and transitions through a well-defined graph determine the output.

State machines thus provide a simple, elegant lens through which to view deterministic transformations of sequential inputs. However, many real-world processes are not strictly deterministic. Enter Markov models, which incorporate probability into the transitions. Before we get there, let's pause to examine another perspective on sequential modeling: linear time-invariant (LTI) systems, which arise frequently in signal processing, control theory, and certain machine learning contexts.

4. Linear time-invariant (LTI) systems

Linear time-invariant (LTI) systems are pervasive in control theory, signal processing, and many engineering applications. Despite being introduced outside the mainstream machine learning literature, the techniques for dealing with LTI systems—especially convolution and Fourier transforms—have deeply influenced how we conceptualize sequence analysis. An LTI system is defined by two key properties:

- Linearity: The system's response to a linear combination of inputs is the linear combination of the responses to each input individually.

- Time-invariance: The system behaves the same way regardless of when the input is applied; that is, shifting the input in time simply shifts the output by the same amount.

In discrete time, an LTI system can be described via a difference equation:

where is the input signal sequence, is the output sequence, and are coefficients. The parameter indicates how many past inputs affect the current output. Each variable here reflects a single point in the temporal sequence. Because the system is linear, scaling or summing inputs yields outputs scaled or summed in the same proportion. Because it is time-invariant, shifting the input sequence by some integer simply shifts the output by the same steps, with no other change in the functional relationship.

4.1 Impulse response and convolution

An alternative but equivalent perspective is to consider the impulse response of an LTI system. If denotes the output of the system to a delta impulse at time 0, then for an arbitrary input sequence , the output is the convolution:

Of course, in many practical scenarios, is nonzero for only a finite range of . Thus, LTI systems can be neatly characterized by that impulse response, and their behavior is captured by the convolution operation. Convolution is widely used in machine learning as well, particularly in convolutional neural networks (CNNs), which, although initially designed for spatial data (images), are conceptually related to how signals propagate through linear shift-invariant filters.

4.2 Relation to sequential models

While LTI theory typically appears in the domain of signal processing or control, the idea of recurrences based on previous states or inputs is intimately connected to how we think about sequential data in time-series. Indeed, many ARMA (AutoRegressive Moving Average) models from classical time-series forecasting can be viewed through the lens of discrete LTI systems. The notion of stationarity, a close analogue to time-invariance in stochastic processes, ties these classical methods to the Markov property or to more general random processes with certain stable characteristics over time.

4.3 Continuous-time vs. discrete-time LTI

In some cases, especially in physical systems, it is more natural to treat time as continuous. Then we define differential equations instead of difference equations. A continuous-time LTI system obeys:

Although the details differ from the discrete-time setting, the conceptual underpinnings remain consistent: linearity and time-invariance permit a well-structured mathematical treatment, including Laplace transforms for solving differential equations.

4.4 Applications and relevance

Because LTI systems are relatively straightforward to analyze, they have provided fundamental insights into how signals evolve, how feedback loops can be designed, and how stable control can be maintained. For instance, the design of certain feedback controllers in robotics or automation heavily relies on LTI frameworks. In machine learning, knowing these principles can guide both the feature engineering for time-series tasks (like applying convolution-based filters) and the understanding of how neural networks can replicate or extend classical linear ideas. Researchers have explored how specialized recurrent neural network cells can emulate LTI systems while also incorporating nonlinearities (see Bai, Koltun, and Kolter, ICLR 2018 for discussions on recurrent architectures that approximate certain linear behaviors).

Hence, LTI systems offer a robust vantage point for understanding sequences governed by linear, shift-invariant processes. But many systems we encounter in real-world domains have additional complexity—particularly regarding uncertainty and state transitions that might not be strictly linear. This is where Markov models come into play, adding the crucial element of probabilistic transitions.

5. Markov models

Markov models, named after the mathematician Andrey Markov, are a foundational class of models for describing processes that undergo transitions from one state to another in a probabilistic manner. The simplest variant is the Markov chain, in which the probability of transitioning to the next state depends only on the current state and not on any additional historical information. Formally, this memoryless property can be expressed as:

Here, the random variable denotes the state at time . This assumption greatly simplifies modeling and inference, because we only need to track a finite number of conditional probabilities, typically organized in a transition matrix for discrete-state processes.

5.1 Transition matrices

For a Markov chain with a finite set of states , the transition probabilities can be summarized as:

yielding a transition matrix of size . Each row in this matrix sums to 1, reflecting the fact that the probabilities of transitioning from a given state to all possible next states must total 1. In practice, this matrix can be learned from data by simply counting occurrences (in a straightforward maximum likelihood approach) or by employing Bayesian estimation with prior distributions over the rows of the matrix.

5.2 Hidden Markov models (Preview)

Hidden Markov models (HMMs) extend the basic Markov chain by introducing an observation model. The system transitions among hidden states following the Markov property, but we only observe some emission or output that depends on the hidden state. This framework explains how time-series data might be generated by an underlying Markov chain that we can't directly see, and many classical inference algorithms have been developed to handle tasks such as state decoding and parameter learning. Since a detailed look at HMMs involves the forward-backward algorithm, Viterbi decoding, scaling factors, and various expansions (like factorial HMMs and hierarchical HMMs), we will postpone the deeper investigation of these methods to a subsequent article.

5.3 Benefits and limitations of Markov models

Markov models can be appealing because they offer a clean, mathematically grounded way to handle sequential data that exhibits memoryless or limited-memory dynamics. They are relatively easy to implement and interpret, especially if the state space is not too large. Moreover, they integrate naturally into many advanced frameworks for decision-making (Markov decision processes, discussed next) and reinforcement learning.

On the downside, the Markov assumption can be restrictive for processes that exhibit long-range temporal dependencies. In practice, if the phenomenon in question depends on events that occurred far in the past, a simple first-order Markov chain might perform poorly. One can extend the chain to second- or higher-order Markov processes, but that leads to rapid state space explosion and can become unwieldy. Alternatively, one might move beyond Markov assumptions entirely, or adopt neural sequence models that can approximate more complex dependencies.

5.4 Practical usage across domains

Markov models have found success in areas like speech recognition (especially earlier generation systems based on hidden Markov models), modeling financial returns (although these might rarely be purely Markovian in practice), and simpler natural language processing tasks. In each domain, the key is identifying a suitable state representation and a consistent way to estimate or initialize transition probabilities. Once the state space becomes too large or too continuous, more advanced variants (like particle filters, continuous-state HMMs, or neural approaches) may be warranted.

Because Markov models provide a smooth transition from purely deterministic finite state machines to more probabilistic treatments of sequential data, they are an essential stepping stone in understanding how modern sequence modeling developed. Building on Markov chains, one can expand to Markov decision processes, which incorporate the notion of actions and rewards—central to fields like reinforcement learning. That is our next topic.

6. Markov decision processes (MDPs)

A Markov decision process (MDP) is a mathematical framework used for modeling decision-making tasks in which outcomes are partly random and partly under the control of a decision-maker. Formally, an MDP is specified by:

• A set of states

• A set of actions

• A transition probability function indicating how likely the process is to move to a new state given that action is taken in state

• A reward function or that gives the immediate reward for making an action in state and transitioning to

• A discount factor (0 ≤ < 1) that weights the importance of future rewards

6.1 The role of the policy

The core objective in an MDP is to find an optimal policy, , that maximizes the expected sum of discounted rewards. A policy is a mapping from states to actions—possibly stochastic—describing which action should be taken in each state. Once a policy is fixed, the MDP effectively becomes a Markov chain, where the transitions are determined by the chosen actions.

6.2 Value functions

A common approach is to define the value function as the expected return (sum of discounted rewards) starting from state and following policy thereafter. The Bellman equation for this value function is:

Here, the random variable is sampled according to the policy's probability distribution over actions , and indicates that the next state is governed by the transition probabilities. The optimal value function is the maximum over all possible policies, yielding that satisfies the Bellman optimality equation.

6.3 Finite-horizon vs. infinite-horizon MDPs

If we consider a finite time window of length , the MDP is finite-horizon, and we can solve it via backward induction. In infinite-horizon problems, we assume the process continues indefinitely, and we often introduce the discount factor to ensure convergence of the value function. Reinforcement learning algorithms such as Q-learning and policy gradient methods revolve around approximating or directly learning an optimal or near-optimal policy for MDPs, especially when the state and action spaces become very large.

6.4 Applications of MDPs

MDPs are omnipresent in fields like autonomous robotics, game playing (e.g., matching the approach used by AlphaGo or AlphaZero), operational research (inventory management, scheduling, etc.), and healthcare (optimal treatment policies). They represent the bridging point between sequential modeling and decision-making under uncertainty. Many real-world tasks can be framed as or approximated by MDPs, providing a mechanism to systematically plan or learn a strategy that maximizes long-term returns.

7. Comparing finite state machines and Markov models

We have seen how finite state machines revolve around deterministic or non-deterministic transitions triggered by specific inputs, whereas Markov models treat transitions probabilistically, typically ignoring external inputs (basic Markov chains) or summarizing them in action-based transitions (in MDPs). To highlight a few key comparisons:

-

Nature of transitions:

— FSMs: Transitions are triggered by specific input symbols or events, usually deterministic if it's a DFA.

— Markov chains: Transitions occur with certain probabilities, capturing uncertainty about which next state will occur. -

Determinism vs. randomness:

— FSMs (deterministic variants): Next state is a function of the current state and input.

— Markov chains: Next state is drawn from a probability distribution conditioned on the current state (and possibly the action if we are in an MDP scenario). -

Model complexity:

— FSMs can capture complex transition logic but often rely on hand-crafted rules.

— Markov models can be learned systematically using statistical or Bayesian methods, but the Markov assumption can be restrictive for processes with long memory. -

Suitability:

— FSMs are great for well-defined systems with discrete inputs and known transitions (e.g., protocol design, certain text parsing tasks).

— Markov models are suited to stochastic processes where uncertainty is intrinsic (e.g., speech, genetics, stock returns).

In practice, there is no single right or wrong choice for a particular modeling problem. The selection depends on domain knowledge, availability of data, complexity constraints, and performance requirements. An FSM might suffice if you have exact knowledge of transitions, while a Markov-based approach may be necessary if your system is inherently random or if you must infer transitions from data. Understanding each viewpoint fosters a richer toolkit for tackling varied sequential data.

8. Temporal dependencies in sequential models

One of the deepest and most fundamental concepts in sequence modeling is the notion of temporal dependency—how the future depends on the past. Temporal dependencies can be short-range or long-range, linear or nonlinear, deterministic or stochastic. They can also exhibit patterns like seasonality, periodicity, or abrupt regime shifts (common in financial time-series).

8.1 Short-term vs. long-term dependencies

Short-term dependencies can often be successfully captured by first-order Markov chains or simple finite memory processes. However, real-world systems often have underlying factors that accumulate or persist over extended periods, creating the phenomenon of long-term dependencies. Handling these effectively requires more complex structures—like higher-order Markov chains, HMMs with extended states, or modern neural architectures (e.g., LSTM, GRU). The tension between memory capacity and computational tractability is a driving motivator in advanced sequence model design.

8.2 Capturing context over time

In language modeling, a short-term approach might only look at the previous word to predict the next. However, context spanning entire sentences or paragraphs is typically crucial. Similarly, in time-series analysis, predicting tomorrow's temperature may require more than just today's temperature; one might need weekly or seasonal signals. Classical solutions include embedding additional features or building more advanced state representations, while neural approaches can learn these features adaptively if given enough data.

8.3 Handling partial observability

In many real systems, the underlying state is not fully observable. Hidden Markov models exemplify how we may handle partial observability: we treat the hidden state as a latent variable and the observed signals as indicators of that state. Neural architectures do something similar, effectively learning a hidden embedding that attempts to capture the relevant context.

8.4 Common challenges

Challenges in tackling temporal dependencies include:

• Learning or inferring a representation that meaningfully captures the relevant history without being overwhelmed by noise.

• Dealing with non-stationary data, where the process's statistical properties can change over time.

• Handling missing data or irregular sampling intervals.

• Maintaining computational efficiency when the sequence grows very long.

In sum, temporal dependencies in sequential data can be both a blessing and a curse. They enrich the predictive structure available to a model while simultaneously complicating the modeling process. Below, we take a broader view of how these dependencies manifest in dynamic systems.

9. Overview of dynamic systems

Dynamic systems provide a unifying concept for many types of sequential processes. A dynamic system is one whose state evolves over time according to some rule, which can be deterministic or stochastic, linear or nonlinear. In mathematics, dynamic systems are studied extensively in differential equations, chaos theory, control theory, and beyond. In machine learning, dynamic system concepts appear in state-space models, recurrent neural architectures, and hybrid domain-specific applications.

9.1 Discrete-time dynamical systems

We can express a discrete-time dynamical system via a state update rule such as:

where is the state vector at time , is an external input or control, and defines how the state evolves. If is linear and time-invariant, we get the LTI scenario. If includes noise terms, we get a stochastic dynamic system. If is nonlinear, we can see more complex phenomena such as chaos and limit cycles.

9.2 Continuous-time dynamical systems

This is expressed similarly by a differential equation:

Here, the state evolves continuously. Many physical processes, including biological systems, mechanical systems, and chemical reactors, can be described with continuous-time dynamics.

9.3 State-space representation

In control engineering, a state-space representation is a well-known formalism:

where is hidden state, is observed output, and is external input. The matrices and define the linear relations. These equations form the core of linear dynamical systems (LDS). In more advanced treatments, especially in machine learning, we consider stochastic versions where and might be process and observation noise, respectively.

9.4 Why dynamic systems matter

Dynamic system theory offers an incredibly robust theoretical framework and is crucial for prediction, control, and stability analyses. Many sophisticated machine learning algorithms for time-series forecasting, system identification, or process control are built on the bedrock of dynamic systems. The synergy with neural networks arises when we approximate by a parameterized function, letting a neural architecture incorporate nonlinearity and handle large amounts of data more flexibly than classical analytic forms.

9.5 Hybrid approaches

Researchers continue to explore ways to fuse dynamic system models with deep networks—sometimes known as physics-informed neural networks or deep state-space models. The notion is that physically grounded domain knowledge from dynamic systems can be integrated with data-driven learning for improved performance and interpretability (see Raissi and gang, JMLR 2019).

Although dynamic systems can, in principle, encapsulate Markov processes, FSMs, or any other sequence-based approach by choosing appropriate formulations, they generally focus on a more direct mathematics of continuous transformations rather than discrete transitions. Next, we'll see how the idea of a transition function cuts across all these different sequential modeling paradigms.

10. Role of transition functions in modeling

The term "transition function" appears commonly in discussions of both deterministic state machines and probabilistic Markov models. Regardless of the framework, it defines how the current state (and possibly input or action) leads to the next state.

10.1 Transition function in FSMs

In a deterministic finite automaton, the function directs the machine from state to state upon receiving input . This function is discrete and typically described by a state transition table or graph.

10.2 Transition function in Markov chains

Here, the transition is a probability distribution over possible next states. Instead of each mapping to a single , we have describing the likelihood of each next state. This can be thought of as a "stochastic transition function".

10.3 Transition function in LTI systems

For LTI systems, the transition function is a linear operator that shifts the system from time to (or from to a continuous in the continuous-time case). Mathematically, the transition might be represented by a matrix multiplication (omitting inputs for simplicity).

10.4 Choosing an appropriate transition function

Selecting an appropriate transition function has practical implications:

• It determines how easily the model can be estimated from data. (Is it linear? Is it parametric?)

• It dictates computational costs. (Is the state space discrete, continuous, very large?)

• It shapes how well the model captures the underlying system's complexities. (Do we need a neural network to approximate ? Are linear approximations enough?)

In advanced scenarios, the transition function itself might be learned from data, reflecting a system that is not fully known beforehand. Parameter identification techniques, gradient-based methods, or Bayesian inference can all be employed to estimate the transition function in various sequential models.

11. Sequential models in real-world applications

So far, we have covered the conceptual frameworks underlying sequential models, from deterministic automata to probabilistic Markov chains and dynamic systems. In practice, these models find application across a host of domains:

-

Time-series analysis: Forecasting stock prices, demand planning, or climate variables often employs ARMA-type models (linear dynamical systems) or Markov-based frameworks, potentially augmented with external regressors. Ensemble or neural methods are also common, but the linear or Markov flavors are frequently used as baselines.

-

Speech and language processing: Hidden Markov models were the dominant approach in automatic speech recognition for many years. Modern deep learning architectures incorporate or loosely approximate these ideas, capturing speech as sequences of acoustic frames. Finite state machines also appear in certain rule-based or grammar-based text parsing systems.

-

Robotics and control: Markov decision processes, or more general dynamic systems, are fundamental in describing robotic state transitions. Combined with reinforcement learning, they let robots or autonomous vehicles make sequential decisions that balance immediate performance with long-term safety or efficiency.

-

Gaming and simulations: MDPs form the backbone of algorithms that solve board games (chess, Go, etc.) or complex multi-agent simulations. By framing the environment as a Markov model with states and probabilities, sophisticated strategies can be learned or planned.

-

User behavior modeling: E-commerce platforms and recommendation systems occasionally utilize Markov chains to model user navigation patterns. Although advanced deep learning methods can incorporate more contextual signals, a simple Markov chain approach can yield quick insights into probable transitions from one page or product to another.

-

Biological sequence analysis: In bioinformatics, Markov models (and their hidden variants) have been used for gene prediction or protein secondary structure analysis, relying on the assumption that the next symbol (nucleotide or amino acid) depends primarily on a limited window of preceding symbols.

Understanding these real-world contexts underscores the versatility and enduring importance of classical sequential modeling methods. Even where neural networks currently dominate, the underlying logic—states, transitions, memory, and dynamic evolution—can often be traced back to these classical principles.

12. Limitations of classical sequential models

Despite their broad utility, classical sequential models—finite state machines, Markov chains, or linear dynamical systems—have known limitations when confronted with the large-scale, highly nonlinear complexities of modern data. These issues often force practitioners to consider more advanced or hybrid solutions.

12.1 Scalability

FSMs can suffer from state-space explosion; as the complexity of the scenario grows, the number of states needed can skyrocket. Likewise, Markov chains with large or continuous state spaces become difficult to handle with simple transition matrices. Even approximate methods can struggle when dimensions scale up (i.e., in large-scale real-time analytics or continuous control).

12.2 Inflexibility in capturing long-term dependencies

First-order Markov assumptions or static linear transitions can fail to capture long-range correlations or intricate patterns that span many time steps. As we push to second-, third-, or higher-order Markov processes, or move to nonlinear systems, the model can become unwieldy, even if it better captures the data. Neural network–based approaches often provide a more flexible means of capturing extended temporal structures.

12.3 Dependence on domain knowledge

FSMs in particular typically require painstaking specification of states and transitions. Markov models are partially easier to estimate from data, but still may demand the definition of a meaningful state representation. If that representation is incomplete or artificially constrained, the model will not perform well. In domains with rich or high-dimensional signals, engineering states or transitions a priori may be impractical.

12.4 Handling partial observability

While hidden Markov models address partial observability, the underlying structure may still be insufficient for highly complex problems. If the real system has many interacting latent variables or a continuous latent space, classical HMM frameworks might be too simplistic.

12.5 Computation and inference overhead

Inference in Markov processes or linear dynamical systems is typically polynomial in the sequence length, but it can become expensive for large datasets—especially if the state space is large or continuous, or if we add hierarchical or factorial expansions. The forward-backward algorithm, for instance, has complexity for an HMM with states and a sequence length of . For large and , this quickly becomes substantial.

Given these constraints, it is unsurprising that more advanced innovations—especially those leveraging deep learning��—have become so prevalent. Nonetheless, classical sequential models hold a valuable place as baseline methods, interpretable frameworks, or components in hybrid solutions that combine domain knowledge with data-driven flexibility.

13. Setting the stage for advanced sequential models

The journey through classical sequential modeling—from finite state machines, through Markov chains, to LTI and dynamic systems—lays the bedrock for more sophisticated approaches. Although these classical methods each face certain limitations, they shine in various specialized contexts and continue to inform modern architectures in meaningful ways.

As we move forward in this course, you will encounter neural architectures that address many of the challenges outlined above. For example, recurrent neural networks (RNNs) and their variants (LSTM, GRU) can potentially capture very long-term dependencies by learning gating mechanisms. Transformer models, employing attention mechanisms over entire sequences, provide a different route to modeling complex dependencies. Hierarchical models, Bayesian nonparametrics, and continuous-time state-space neural networks are also being explored by cutting-edge research (e.g., Chen and gang, NeurIPS 2018 on Neural ODEs). Yet, these advanced approaches reflect, in spirit, the same fundamental ideas introduced by classical models: transitions through states over time, memory or partial memory, and a quest to reconcile stability, interpretability, and expressiveness.

In the next article, we will dive deeply into Markov models, describing hidden Markov models in detail and examining how they handle partial observability. We'll see how the forward-backward algorithm, sum-product approach, and Viterbi decoding technique can be derived and used for exact inference and learning. We'll also explore interesting extensions like hierarchical HMMs, factorial HMMs, and how these expansions attempt to overcome some of the classical constraints. Ultimately, understanding Markov models at that deeper level will further refine your perspective on why neural architectures have soared in popularity, and where the synergy between classical and deep approaches can be harnessed.

Until then, I encourage reviewing the frameworks introduced here and considering how they might apply to your own sequential data challenges. Whether it's a small-scale deterministic process, a random walk with finite states, a continuous control problem, or a partially observable environment, the conceptual building blocks will show up in advanced contexts repeatedly. And by appreciating the limitations, you'll be better equipped to choose or develop solutions that align with the complexity of real-world problems.

Stepping forward, we'll see how these theories translate into algorithms and practical applications — starting with the richly productive territory of Markov models.