🎓 55/2

This post is a part of the Probabilistic models & Bayesian methods educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Markov models, in their many forms and varieties, have proven extraordinarily useful in theoretical research as well as in real-world applications across data science, machine learning, and other advanced engineering fields. The overarching notion that a system can be described where "future" states depend solely on the "present" state — while ignoring specific details of how the process arrived in that state — offers a mathematically appealing and computationally tractable way of modeling a wide array of phenomena. These phenomena range from language modeling in natural language processing to user behavior in recommender systems, and from weather forecasting to high-tempo financial markets transitions. The notion of Markov chains was originally introduced by the mathematician Andrey Markov in the early 20th century, and it has since blossomed into a family of techniques, collectively known as Markov models, utilized heavily in fields such as physics, statistics, and machine learning.

When I refer to the "Markov property," I am specifically talking about the idea that, at any given point, the future evolution depends only on the current state and not on the specific sequence of events or states that preceded it. This deceptively simple assumption begets a huge range of analytically approachable and computationally feasible models. The Markov property can be extended or relaxed in many ways, leading to partially observed or hidden Markov models, hierarchical or layered structures, or continuous-state Markov processes. In practice, an entire research subfield has expanded around the computational aspects of parameter estimation, state inference, alignment, and learning for Markov structures, highlighting their importance and versatility.

In operational contexts, Markov models appear in many guises. At times, they might be used purely to capture the sequence of events with no underlying hidden structure needed, as in an ordinary Markov chain. In other scenarios, the chain of observed events is thought to be generated by a hidden process, and we want to infer that underlying process. The latter scenario is covered by Hidden Markov Models (HMMs), which have been famously applied to speech recognition and many other time-series tasks. Indeed, the widespread success of HMMs in speech, language modeling, and bioinformatics has established these models as a canonical approach for problems where the data is sequential or temporal in nature. Not surprisingly, modern deep learning pipelines also frequently incorporate Markov assumptions or Markov-inspired modules, though they might wrap them in more complex function approximators like recurrent neural networks or attention-based transformers. Nonetheless, it is still extremely useful to become intimately familiar with classic Markov and hidden Markov setups, both for fundamental insight and for potential integration into advanced systems.

In this article, I will present a fairly thorough introduction to Markov chains and Hidden Markov Models, then segue into more advanced or extended Markov model architectures, concluding with practical examples in Python that illustrate how these models might be coded up. The text will be long and detailed, particularly to serve as a deep resource for readers already experienced in machine learning or data science but eager to dive more rigorously into Markov-based frameworks.

1.1 Historical background

The origin of Markov models traces back to Andrey Markov's 1906 paper studying sequences of dependent trials. Markov's approach was initially overshadowed by more prominent research in probability theory that focused on independence assumptions. However, over the decades, researchers recognized the modeling power that Markov processes provided for systems with a certain dependence structure: only adjacent or local influences matter. By the mid-20th century, Markov chains were being analyzed extensively in the context of both pure probability theory (e.g., recurrent vs. transient states) and in applied contexts such as queueing theory, population genetics, and random walks.

Building upon these classical Markov chains, the notion of Hidden Markov Models (HMMs) came to fruition in the 1960s and 1970s, spurred largely by the needs of speech recognition research. A pivotal moment occurred when Leonard E. Baum and gang proposed rigorous methods for training HMMs, culminating in the Baum-Welch algorithm. Later, Lawrence R. Rabiner (Rabiner, 1989) provided a well-known tutorial that introduced a generation of engineers and computational linguists to the intricacies of HMMs. Since then, HMMs have been canonized in machine learning textbooks (Bishop, 2006; Murphy, 2012) as standard models for analyzing sequential data, inspiring numerous generalizations and specialized variations.

1.2 The concept of the Markov property

The formal Markov property can be summed up quite succinctly: if is a sequence of random variables describing the state of a process at each discrete time step t, then the property states:

This is easy to read as: given the present state , the next state's distribution does not depend on the previous states . The reason the Markov property is so important is that it drastically simplifies the modeling of dynamics. Instead of an explosion of combinatorial complexity in the full history of states, we only need to keep track of the current state and a finite set of parameters (such as transition probabilities) that describe how we move from one state to another.

One of the underpinnings of successful Markov modeling is to define the "state" in such a way that the Markov property actually holds. In real-world problems, a naive definition of the system's state might lead to memory effects that violate the property, requiring a more refined or complex definition of what it means to be in a given state. Despite these considerations, Markov models remain elegantly simple and powerful in their pure form.

2. Markov chains

The purest form of a Markov model is the Markov chain (MC). This typically refers to a discrete-time, discrete-state process (originally, many works started indexing at t=0 or t=1, but it does not really matter for the theory). Each random variable takes values in a state space S, which is finite or countably infinite for discrete Markov chains (there are continuous-state extensions, but let's put that aside for the moment).

2.1 Formal definition

A discrete-time Markov chain is a sequence of random variables that satisfies the Markov property:

for any states i, j in the state space S. The probabilities can be gathered into a transition probability matrix when S is finite. Denote this matrix by with entries:

Because these probabilities do not depend on t (time-homogeneous chain) in the simplest scenario, the same matrix P is used repeatedly for all time steps.

2.2 Transition probability matrix

When the Markov chain has a finite state space S = {1, 2, …, N}, we can write:

where each row i of P sums to 1, reflecting that from state i, the chain must transition to one of the N possible states (including possibly staying in state i). These matrix elements capture the entire dynamics of the Markov chain in the homogeneous case. Non-homogeneous Markov chains, where the transition probabilities can depend on t, do exist but are often more complicated to work with. For the majority of standard usage in data science or ML contexts, we will typically assume stationarity of transition probabilities.

2.3 Classification of states

The classification of states in a Markov chain is a foundational concept that reveals a great deal of the chain's long-term behavior. If you consider a finite Markov chain, states can be:

- Transient: A state i is transient if, starting from i, there is a non-zero probability of never returning to i in the future. Intuitively, you can "escape" from i and never come back in some fraction of possible trajectories.

- Recurrent: A state i is recurrent if you will, with probability 1, eventually come back to i infinitely many times. Recurrent states can be further classified into:

- Null recurrent (expected return time is infinite).

- Positive recurrent (expected return time is finite).

- Absorbing: A special type of recurrent state that, once entered, cannot be left. For instance, if and for j ≠ i, state i is absorbing.

A helpful way to analyze the chain is by constructing the transition graph, where each state is a node and edges connect states i→j with weight . Then, each strongly connected component in this graph corresponds to a subset of states among which the chain can hop around indefinitely. It is within these strongly connected components that we can find cycles whose presence or absence can drastically affect the chain's limiting behavior.

2.4 Fundamental limit theorems and stationary distributions

One of the great joys of Markov chain theory is the existence of clean results about long-run equilibria and convergence under fairly mild conditions. If a Markov chain is irreducible (meaning you can get from any state to any other state in some number of steps) and aperiodic (meaning the chain does not operate in a strict cyclical pattern), then the chain is said to be ergodic. An ergodic Markov chain often has a unique stationary distribution , which is a row vector that satisfies:

Semantically, is a distribution over states that remains unchanged by the application of the transition matrix. If one starts the chain in the stationary distribution, it remains there for all time. For finite, irreducible, and aperiodic Markov chains, an equivalent statement is that for any initial distribution , we have:

This is a powerful result, illustrating that the chain "forgets" its starting point in the long run, converging to the same stationary behavior no matter where it began. The existence of a limiting distribution has been leveraged in algorithms like Google's PageRank, which is essentially a Markov chain on the directed web graph. In PageRank, the stationary distribution of the chain indicates the proportion of time spent on each node (webpage) in the infinite time horizon, thus providing a measure of relative importance.

2.5 Advanced topics in Markov chain theory

From a machine learning vantage point, there are numerous advanced aspects of Markov chain theory that can be relevant for sampling, optimization, and more. Some topics that appear in cutting-edge research and might be of interest are:

- Mixing times and convergence rates: Just because a chain has a stationary distribution does not necessarily mean it converges quickly. The mixing time quantifies how many steps are necessary for a chain to be "close" to its stationary distribution under some metric. This topic is crucial in Markov chain Monte Carlo (MCMC) methods for Bayesian inference.

- Coupling arguments: The technique of coupling two Markov chains of interest can be used to derive bounds on mixing rates or to prove advanced properties in probability theory.

- Spectral gap and eigenvalue analysis: The transition matrix P has eigenvalues that govern the chain's convergence behavior. The spectral radius typically encodes how quickly or slowly a chain approaches stationarity.

All of these advanced topics build on the fundamental notion of how a Markov chain transitions among states and how that structure yields well-defined long-term behaviors.

3. Hidden Markov Models

While Markov chains often assume that the state is fully observable at each time step, many real-world sequences are only partially observable. We instead see an output or emission from each hidden state. Hidden Markov Models (HMMs) incorporate the Markov property within a latent (unobserved) state sequence and specify an emission process for the observed data. Formally:

- We have hidden states that form a Markov chain.

- At each time step t, a random output is generated according to some conditional distribution .

In many textbooks, you will see the standard HMM defined by three probability distributions:

- The initial state distribution .

- The transition distribution .

- The emission distribution .

3.1 Definition and structure

Concretely, an HMM can be represented with:

where the observed sequence is dependent on but not on except through . The Markov assumption is on only, which is hidden, hence the name hidden Markov model. This is different from a typical Markov chain in that the states are not directly observable; we only see noisy or partial observations of that state.

HMMs are widely used in sequence labeling tasks. For instance, in part-of-speech tagging in natural language processing, the hidden states might be the syntactic category labels (noun, verb, adjective, etc.) at each word token, and the emissions are the actual observed words. The Markov assumption posits that the sequence of tags adheres to a Markov chain, while each tag stochastically emits word tokens.

3.2 Forward-backward algorithm

One of the hallmark results in the theory of HMMs is the forward-backward algorithm, which efficiently computes the posterior probabilities of hidden states at each time step, given a sequence of observations . The forward-backward algorithm is essentially a dynamic programming routine that iterates forward over time collecting partial probabilities (the "forward" pass) and then backward over time (the "backward" pass), ensuring an overall complexity for an N-state HMM over T timesteps.

For each time step t and state i, define the forward variable:

and the backward variable:

Then, the posterior probability that given the entire observation sequence is:

Intuitively, measures how likely it is to arrive in state i at time t having generated the partial observation , while measures the probability of generating the remaining observations from i.

3.3 Viterbi decoding

In many scenarios, we want the single best hidden state sequence that explains the observation sequence in a maximum a posteriori (MAP) sense. The Viterbi algorithm solves this by employing a similar dynamic programming approach, but instead focuses on maximizing over all possible hidden state paths. The typical recurrence for the Viterbi algorithm is:

where is the probability (or log-probability if we take logs) of the most likely path that ends in state j at time t. Then, by tracking the maximizing index i at each step, we can backtrack at the end of the sequence to recover the best hidden state path. Viterbi decoding is widely used in speech recognition, in bioinformatics (gene sequence alignment), and in many other labeling and segmentation tasks.

3.4 Baum-Welch and parameter estimation

An essential piece of HMM modeling is parameter estimation. Often, we do not know the transition matrix a \) and emission probabilities b \). The Baum-Welch algorithm is the standard expectation-maximization (EM) procedure that tries to maximize the likelihood of the observed sequence wrt the model parameters. Each iteration consists of:

- An expectation step (E-step), in which we compute certain expected counts of transitions i→j and expected counts of emissions from states to observations using the forward-backward procedure.

- A maximization step (M-step), in which we update the transition and emission probability estimates by normalizing these expected counts.

This iterative approach, while not guaranteed to find the global maximum, typically converges to a local maximum of the data likelihood. Once an HMM is trained, it can be used for tasks such as decoding (finding the best hidden sequence) or evaluating the probability of newly observed sequences, as is common in anomaly detection or read alignment tasks.

4. Advanced or extended Markov models

Although discrete-time, discrete-state Markov chains and hidden Markov models are extremely common, the scope of Markov modeling is far broader. A number of generalizations or extended frameworks exist, each addressing modeling challenges in complex real-world systems:

4.1 Partially observed Markov decision processes (POMDPs)

When there is an agent that can take actions, transitions happen in the environment according to Markovian dynamics, and we only partially observe the underlying system state, we arrive at the domain of partially observed Markov decision processes (POMDPs). POMDPs are a central model in reinforcement learning for tasks involving uncertainty about the agent's true state. Typically, the agent tries to choose actions that maximize expected future reward, while also reducing uncertainty via observations. Albeit more cognitively complex, a POMDP is essentially an HMM combined with a decision-making component.

4.2 Hierarchical hidden Markov models

In some applications, we might want to group consecutive states into higher-level meta-states or incorporate multiple layers of hidden variables, leading to hierarchical hidden Markov models. For instance, if you are modeling a user browsing behavior, you might define a higher-level hidden state that corresponds to the user's broad intent or interest, and then a lower-level chain that describes specific page-to-page transitions. Hierarchical HMMs can capture these multi-level phenomena with layered Markov assumptions, although they often require more elaborate parameterization and inference algorithms.

4.3 Continuous-state Markov models

In certain applications (e.g., modeling stock prices or physical systems), the underlying states are better represented as continuous variables. A standard approach is to move from discrete Markov chains to continuous-state Markov processes (like the Ornstein-Uhlenbeck process or the Wiener process), or to use HMMs with continuous emission densities, typically parameterized by Gaussian mixtures. The same Markov assumption remains, but we replace discrete distributions with differential equations or continuous probability density functions. This also raises new inference challenges, often tackled by variants of Kalman filters or particle filters, which are effectively continuous-state analogs of HMM-like reasoning.

4.4 Advanced research directions

Modern research has elaborated on Markov models in numerous ways. Some approaches combine neural networks with Markov frameworks. For instance, you might see recurrent neural networks that incorporate a latent Markov chain to regularize transitions. Another direction is to explore deeper architectures of Markov processes for unsupervised representation learning. As a demonstration of their sustained relevance, advanced Markov-based solutions regularly appear in top conferences (NeurIPS, ICML) addressing tasks such as time-series forecasting, robust anomaly detection, or generative sequence modeling. While many neural approaches can overshadow classical Markov models in raw predictive power, the interpretability and the explicit state-based structure of Markov models remain compelling.

5. Implementation details

Below, I show some basic code snippets in Python to emphasize how one might implement Markov chains and hidden Markov models from scratch. There are of course widely used libraries (like hmmlearn, or more advanced frameworks in PyTorch or TensorFlow Probability), but it is often instructive to see the mechanics directly.

5.1 Basic Markov chain implementation

Let's begin with a simple Markov chain that transitions among three states: A, B, and C. We will define a transition matrix and a function to sample from this chain.

import numpy as np

def generate_markov_chain(transition_matrix, states, start_state, n_steps=10):

"""

Generate a sequence of states from a discrete Markov chain.

Parameters

----------

transition_matrix : np.ndarray

Square matrix of transition probabilities, shape (num_states, num_states).

states : list

List of state labels, e.g. ['A', 'B', 'C'].

start_state : int

Index of the starting state in 'states'.

n_steps : int

Number of steps to generate.

Returns

-------

sequence : list

A list of states visited by the chain.

"""

current_state = start_state

sequence = [states[current_state]]

for _ in range(n_steps - 1):

probs = transition_matrix[current_state]

next_state = np.random.choice(len(states), p=probs)

sequence.append(states[next_state])

current_state = next_state

return sequence

if __name__ == "__main__":

# Example usage

states = ['A', 'B', 'C']

T = np.array([

[0.7, 0.2, 0.1],

[0.3, 0.4, 0.3],

[0.2, 0.5, 0.3]

])

start = 0 # start in state 'A'

chain = generate_markov_chain(T, states, start_state=start, n_steps=20)

print("Generated Markov chain:", chain)

In this straightforward code, we store the Markov chain's dynamics in the transition matrix T, and let the chain evolve for a specified number of steps. Each call to np.random.choice selects the next state based on the row of T that corresponds to the current state.

5.2 Hidden Markov model example

Now let us create a minimal hidden Markov model with two hidden states (H1 and H2) and a discrete set of possible observations (O1, O2). We'll illustrate how to do forward-backward computations to find the posterior probabilities of the hidden states for a given observation sequence.

import numpy as np

def forward_backward(obs_seq, pi, A, B):

"""

Forward-backward algorithm for a simple discrete HMM.

Parameters

----------

obs_seq : list

Observed sequence of emissions, each value is an index into the observation space.

pi : np.array

Initial state distribution, shape (num_states,).

A : np.array

Transition matrix, shape (num_states, num_states).

B : np.array

Emission matrix, shape (num_states, num_observations).

Returns

-------

gamma : np.ndarray

Posterior probabilities of hidden states at each time, shape (T, num_states).

"""

num_states = A.shape[0]

T = len(obs_seq)

# Forward pass

alpha = np.zeros((T, num_states))

alpha[0, :] = pi * B[:, obs_seq[0]]

for t in range(1, T):

for j in range(num_states):

alpha[t, j] = np.sum(alpha[t-1, :] * A[:, j]) * B[j, obs_seq[t]]

# Backward pass

beta = np.zeros((T, num_states))

beta[T-1, :] = 1.0

for t in range(T-2, -1, -1):

for i in range(num_states):

beta[t, i] = np.sum(A[i, :] * B[:, obs_seq[t+1]] * beta[t+1, :])

# Compute posterior gamma

gamma = np.zeros((T, num_states))

for t in range(T):

denom = np.sum(alpha[t, :] * beta[t, :])

for i in range(num_states):

gamma[t, i] = (alpha[t, i] * beta[t, i]) / denom

return gamma

if __name__ == "__main__":

# Example usage of HMM

# Suppose we have 2 hidden states, 2 possible observations

pi = np.array([0.6, 0.4]) # initial state distribution

A = np.array([[0.7, 0.3], # transition probs

[0.4, 0.6]])

B = np.array([[0.5, 0.5], # emission probs for state H1

[0.1, 0.9]]) # emission probs for state H2

# Observations are from {O1, O2}, encoded as {0,1}

obs_seq = [0, 0, 1, 1, 0] # O1, O1, O2, O2, O1

gamma = forward_backward(obs_seq, pi, A, B)

print("Posterior state probabilities per time step:")

print(gamma)

Here, is the initial distribution, A is the transition matrix among hidden states, and B is the emission matrix specifying how likely each observation is when in a certain hidden state. The forward_backward function returns a matrix gamma of shape (T, num_states), where each row corresponds to a time step, and each column is the posterior probability that the hidden state is i at that time.

This example is obviously quite simplified. Real-world scenarios might need more complex emission models (continuous distributions, large sets of discrete observations, or neural-based embeddings) as well as advanced training procedures (like Baum-Welch or more advanced optimization).

6. Illustrations

Below I include two image placeholders that might be conceptually helpful. One is a standard Markov chain diagram, the other is a standard Hidden Markov Model representation.

An image was requested, but the frog was found.

Alt: "A simple Markov chain diagram showing arrows from state to state"

Caption: "A Markov chain with three states A, B, C and transitions among them."

Error type: missing path

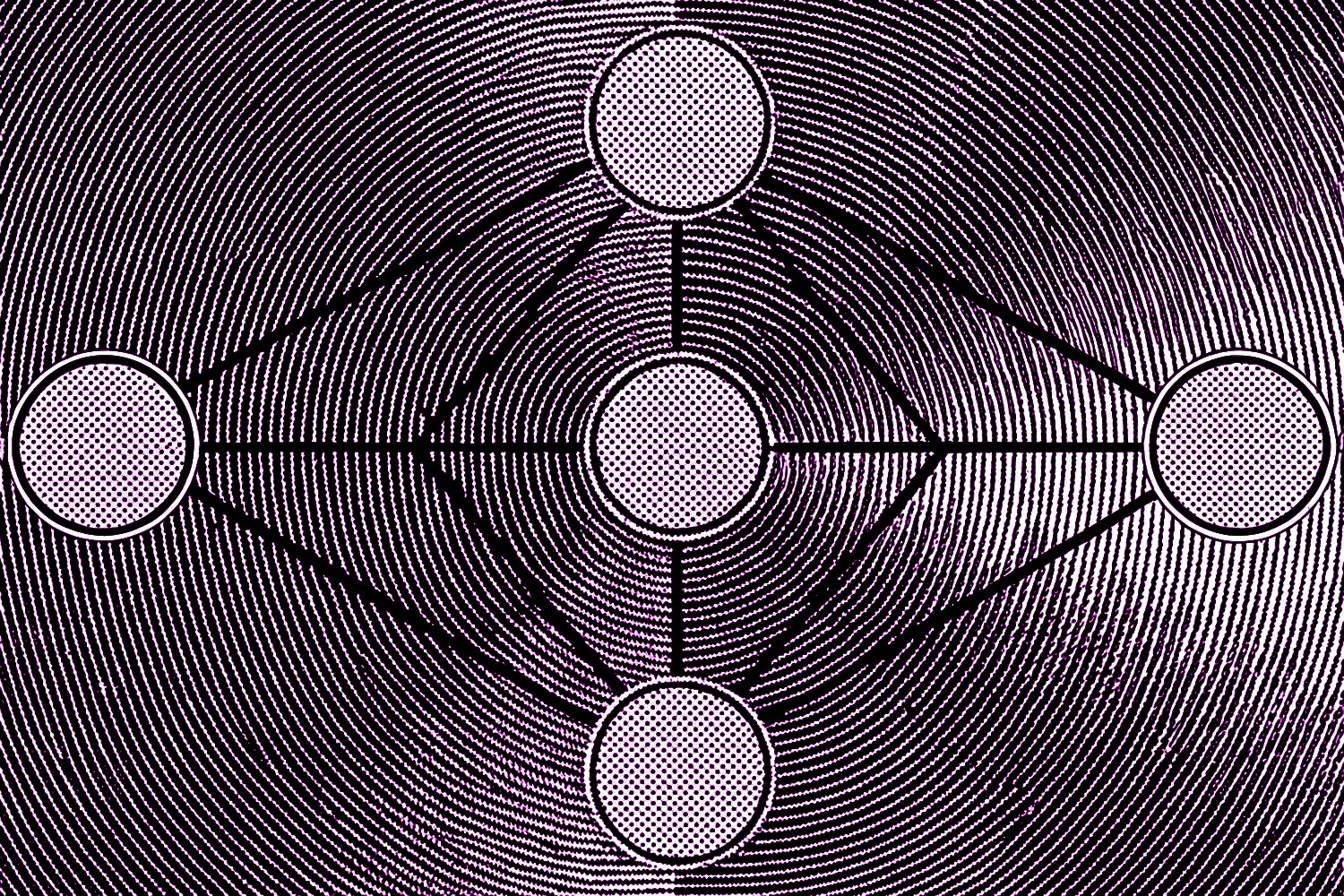

An image was requested, but the frog was found.

Alt: "A representation of a hidden Markov model with hidden states and observed emissions"

Caption: "A hidden Markov model with hidden states (Z1, Z2, etc.) and observed states (X1, X2, etc.)."

Error type: missing path

7. Concluding remarks

Markov models inhabit a foundational position in both theoretical and practical aspects of machine learning and data science. They elegantly capture processes governed by a localized form of dependence (the Markov property), paving the way for a host of simplified analyses, computationally tractable algorithms, and valuable interpretability. Markov chains themselves have found repeated success in use cases that include random walk analysis, PageRank, and time-series modeling. Meanwhile, Hidden Markov Models extend the concept into partially observed domains, excelling in tasks like speech recognition, part-of-speech tagging, and sequence alignment.

For many advanced practitioners and researchers, Markov models might feel relatively classical compared to the deep neural architectures dominating so many benchmarks. But the mathematics behind Markov processes continues to inspire new developments. POMDPs are a foundation of reinforcement learning, continuous-state Markov processes underlie many cutting-edge Bayesian filtering approaches, and HMM-like sequence constraints sometimes appear in large-scale industrial systems where interpretability and well-controlled uncertainty remain crucial. In fact, even in the realm of deep learning, you will find repeated references to Markov-based ideas, from approximate sampling algorithms to hybrid neural/HMM solutions in specialized domains. Sometimes, an HMM is simply the right practical tool, especially if your problem is small scale, has strong domain constraints, or demands an interpretable solution grounded in state-based reasoning.

I recommend that readers follow up this discussion by experimenting further with the provided code, or by investigating well-known libraries (like hmmlearn in Python) to handle more advanced tasks. If you wish to pursue a deeper theoretical understanding, classic references such as Rabiner (1989), Bishop (2006), and Murphy (2012) remain outstanding primers for the fundamental math. Beyond that, you can explore specialized topics such as hierarchical hidden Markov models, segmental hidden Markov models, or connect with ongoing research in partially observed decision processes if you are leaning toward reinforcement learning applications. In short, Markov models provide a cornerstone for modeling sequential or temporal phenomena, reminding us that the insight gleaned from a well-defined chain of states retains enduring significance in modern data science and machine learning.