🎓 102/2

This post is a part of the Computer vision educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Segmentation in the context of computer vision and image analysis is the process of partitioning an image into meaningful segments or regions, typically so that each region corresponds to a distinct object — or in some cases, a part of an object — based on shared visual characteristics such as color, texture, or semantic label. Unlike image classification, which assigns a single label to an entire image, or object detection, which seeks to place bounding boxes around discrete objects, segmentation drills down to a pixel-level assignment of labels. This pixel-level methodology allows us to visualize and comprehend the precise form, boundaries, and location of objects in an image.

Essentially, there are three major subcategories of segmentation tasks that researchers and practitioners often distinguish:

-

Semantic segmentation: Here, each pixel is assigned to one of several possible classes (e.g., background, car, pedestrian, road). Two separate objects of the same class, such as two different cars, will be labeled identically. You care about whether a pixel belongs to a "car" or a "tree", but do not necessarily distinguish between distinct cars or separate trees.

-

Instance segmentation: This approach not only marks each pixel with a class label, it also differentiates between distinct object instances of the same class. For example, if there are three cars, each car is segmented separately and is treated as a unique instance, even if they share the same label of "car".

-

Panoptic segmentation: Panoptic segmentation combines the objectives of semantic segmentation and instance segmentation. In other words, it assigns every pixel a class label (like semantic segmentation) while also making separate masks for individual object instances when applicable (like instance segmentation). It offers a unified approach for understanding both "things" (countable objects like people, cars, or animals) and "stuff" (uncountable concepts such as sky, grass, or road).

Image object segmentation is crucial in numerous real-world applications. In medical diagnostics, segmentation is employed for delineating tumors, organs, and tissues within medical scans, which then supports clinicians in making precise measurements or planning interventions. In autonomous driving, an accurate delineation of the road, pedestrians, street signs, and other traffic participants is essential for safe path planning. In image editing and design, having pixel-level information about object boundaries allows photo editors or content creators to manipulate and transform specific regions in a fine-grained manner (e.g., background removal or selective editing). Overall, segmentation stands as one of the most high-impact tasks in modern computer vision.

It is instructive to compare segmentation with both classification and detection to fully understand where segmentation lies on the continuum of computer vision complexity:

- Classification: You feed an entire image into a model and get a label such as "cat" or "dog".

- Detection: You find bounding boxes that localize each object instance in the image, often accompanied by the appropriate class label (e.g., a bounding box around a cat plus the label "cat").

- Segmentation: You go one step further to identify the precise spatial extent (boundary) of the object at the pixel level.

Different subfields of segmentation — semantic, instance, and panoptic — reflect these distinct but overlapping perspectives on how to parse an image. Modern segmentation solutions often require extremely large, meticulously labeled datasets, advanced neural architectures, and specialized training techniques. Over the past decade, deep learning approaches have largely outperformed traditional, handcrafted segmentation methods by leveraging convolutional neural networks (CNNs), fully convolutional architectures, and more recently, attention mechanisms and transformer-based models. In the sections that follow, I will trace the historical development of segmentation methods from classical graph-based and region-based algorithms to modern deep segmentation networks, then dive into their architectures, evaluation metrics, real-world deployment considerations, and the latest research directions.

historical background and evolution

traditional segmentation methods

Segmentation in its broad sense is an old problem in computer vision. Early segmentation approaches, such as thresholding, region growing, split-and-merge methods, or edge-based segmentation, date back to the foundational years of image processing. These techniques rely on heuristics around pixel intensities and spatial consistency to cluster pixels into coherent regions. Some iconic methods include:

-

Thresholding: A simple yet effective approach for certain controlled scenarios (e.g., binary segmentation of grayscale images). The famous Otsu's thresholding method computes an optimal threshold by maximizing the inter-class variance and minimizing the intra-class variance of pixel intensity distributions.

-

Edge-based segmentation: Canny edge detection or Sobel filters to highlight pixels where large intensity gradients occur. By connecting edges, one forms boundaries that demarcate potential objects.

-

Region-based segmentation: Region growing or region splitting and merging. The basic premise is grouping neighboring pixels that satisfy a certain homogeneity criterion (e.g., similar color, intensity, or texture).

-

Graph-based segmentation: Approaches that formalize the segmentation problem as a graph partition task. For instance, in graph-based segmentation, pixels are treated as graph vertices, with edges representing similarity or dissimilarity among neighboring pixels. Felzenszwalb and Huttenlocher's algorithm (2004) is a classic in this category.

Another advanced approach in traditional computer vision is normalized cuts, where one attempts to partition an image into subgraphs such that similarity within each subgraph is high while similarity between subgraphs is minimal. These methods often involve building large, sometimes fully connected graphs of image pixels (or superpixels) and then solving an optimization problem that can be computationally demanding, though approximate solutions — often based on spectral methods — can scale to moderate-size images.

transition to deep learning approaches

The advent of deep learning revolutionized the landscape of segmentation. Handcrafted features, once the mainstay of classical segmentation approaches, began to be replaced by automatically learned representations. With the success of convolutional neural networks (CNNs) — popularized by AlexNet (Krizhevsky and gang, NeurIPS 2012) for image classification — the computer vision community soon realized that CNNs could be adapted to solve segmentation tasks by generating dense, per-pixel predictions.

One pivotal breakthrough was the Fully Convolutional Network (FCN) architecture proposed by Long and gang (CVPR 2015). Instead of using fully connected layers that produce a single classification per forward pass, FCNs retained the convolutional layers but replaced the classification head with upsampling or deconvolution (transpose convolution) layers, enabling pixel-level output. This design provided the first end-to-end trainable deep network for semantic segmentation.

Shortly thereafter, the U-Net architecture was introduced for biomedical image segmentation by Ronneberger and gang (MICCAI 2015). U-Net employed an encoder-decoder framework, with the encoder capturing increasingly abstract features and the decoder reconstructing a spatially resolved segmentation map. Crucially, skip connections were introduced to bring high-resolution features from the encoder forward to the decoder, significantly improving the fine-grained accuracy of segmentation predictions.

overview of improvements over classical methods

Deep learning-based segmentation techniques offer a leap in performance compared to classical methods for several reasons:

- Learned features rather than handcrafted: Instead of relying on fixed gradients or intensity thresholds, deep networks learn meaningful patterns directly from data.

- Robustness and generalization: CNN-based methods can handle complex scenes, lighting changes, and object appearances more robustly when trained on large, diverse datasets.

- Better synergy with large datasets: As annotated datasets grew (e.g., PASCAL VOC, MS COCO), CNNs scaled well, continuing to improve performance with more data.

- Hardware acceleration: Modern GPUs and specialized accelerators provide the computational horsepower necessary to train large, deep architectures.

influence of hardware and datasets

Large-scale labeled datasets (e.g., MS COCO, Cityscapes for autonomous driving, LIDC/IDRI for lung CT segmentation) greatly accelerated progress. Similarly, developments in GPU hardware and distributed computing frameworks (PyTorch, TensorFlow, etc.) made training deeper and more capable segmentation networks feasible, spurring new lines of research in real-world segmentation tasks and advanced architectures.

core concepts and foundations

review of convolutional networks for image tasks

In standard computer vision tasks like classification, a convolutional neural network typically consists of:

- Convolutional layers that learn filters capturing local spatial patterns

- Pooling layers (such as max pooling) that reduce spatial resolution

- Nonlinear activation functions (e.g., ReLU) that enable complex learned representations

- Fully connected layers at the end to produce classification logits

In segmentation, an important tweak is that the final layers usually output maps that match or closely approach the resolution of the input image. Rather than a single probability distribution over classes, we produce a per-pixel probability distribution. This shift from classification to dense prediction is the essence of going from traditional CNNs to fully convolutional structures.

labeling and dataset preparation for segmentation

Preparing labeled data for segmentation can be far more labor-intensive than labeling data for classification or object detection. Instead of assigning a class label to an entire image or drawing bounding boxes, segmenters often need masks that indicate, for every pixel, which object or class it belongs to. In the case of instance segmentation, each instance in the image requires a separate mask or ID.

Common annotation workflows and tools:

- Polygon annotation: Tools like Labelbox, Supervisely, or CVAT let an annotator draw polygons around objects and fill them to form masks.

- Brush-based annotation: Some specialized software allows painting of object silhouettes in a manner reminiscent of digital painting.

- Interactive segmentation: Some advanced methods combine classical algorithms (like grab-cut) with minimal human input, e.g., scribbles or bounding boxes, to speed up annotation.

Careful labeling is critical because segmentation tasks can be unforgiving of even minor boundary errors, especially in domains like medical imaging. In addition to ground truth masks, other label formats include instance ID images (each instance has a unique integer ID) and single channel masks for each class or instance.

common evaluation metrics (iou, dice coefficient, pixel accuracy)

Measuring the performance of segmentation models requires specialized metrics that account for pixel-level matches or mismatches. The Intersection-over-Union (IoU) or Jaccard Index is perhaps the most commonly used for semantic and instance segmentation. It is computed for a given class (or instance) as:

- Intersection: number of pixels labeled as class X in both the prediction and ground truth.

- Union: total number of pixels labeled as class X in either the prediction or the ground truth.

In medical applications, the Dice coefficient (also known as F1 score in some contexts) is frequently used:

Where is the set of predicted pixels for a given class or instance, and is the set of ground truth pixels. Dice can be closely related to IoU but often is more prevalent in medical imaging literature.

We also often see pixel accuracy (the proportion of correctly labeled pixels out of total pixels), though it can be misleading if there is a large class imbalance (for example, images with a big background region overshadowing smaller but critical objects).

data splitting strategies (train/validation/test)

Just as with classification or detection tasks, it is vital to hold out a validation set and a final test set to mitigate overfitting. But an additional caution is that segmentation tasks are highly sensitive to distribution shifts. For instance, if you are building a medical segmentation model, ensuring that the images in the test set come from different patients, different scanners, or different clinical centers than those in the training set can help ensure that the resulting performance metrics are realistic. Cross-validation is also a popular approach in segmentation tasks, especially in fields with limited data availability (e.g., medical imaging).

deep learning-based segmentation approaches

encoder-decoder style models (review of autoencoder principles)

A broad class of segmentation solutions use encoder-decoder structures. In essence, an encoder compresses the spatial resolution — hopefully capturing meaningful abstract features — and the decoder uses these features to produce dense pixel-level predictions at high resolution.

Autoencoders in general attempt to compress an input to a latent representation (encoder) and then reconstruct that input from the latent vector (decoder). In segmentation networks, the principle is similar, except that the "reconstruction target" is not the raw input image but a segmentation mask. If you imagine an autoencoder for a segmentation mask, you see how the same pattern can be leveraged to create a mask from an input image.

basic cnn feature extraction recap

Although readers of this course have likely encountered standard CNN modules through object detection or classification, it is useful to keep in mind:

- Typical backbones: VGG, ResNet, DenseNet, MobileNet, etc. provide powerful feature extractors.

- Downsampling: Repeated convolution and pooling reduce the spatial size of feature maps.

- Decoding or upsampling: To get back to the original resolution, we need methods like bilinear upsampling, transpose convolution, or other variants (e.g., sub-pixel convolution).

In object detection, the final layers predict bounding box coordinates and classification scores. In segmentation, the final layers produce segmentation logits at each pixel location.

handling overfitting, data augmentation, and regularization

Segmentation models, with their large parameter counts and pixel-level predictions, are prone to overfitting — particularly if the dataset is small. Key strategies include:

- Data augmentation: Random crops, flips, rotations, color jitter, perspective warping.

- Regularization: Techniques like dropout, weight decay, and batch normalization can help.

- Patch-based training: In some settings, especially with large medical images or satellite imagery, random patches can be extracted during training to reduce memory usage and increase sample diversity.

transfer learning for segmentation

Many practitioners fine-tune a segmentation model from a classification checkpoint (e.g., a pre-trained ResNet) or from a detection checkpoint (e.g., a pre-trained model from MS COCO). This approach often yields faster convergence and higher accuracy, reflecting the fact that early convolutional layers learn generic features such as edges, corners, or textures that are beneficial across tasks.

segmentation architectures

u-net

Originally designed for biomedical image segmentation, U-Net remains a highly popular architecture for a variety of domains:

- Symmetric encoder-decoder: The left half of the U is the encoder, which typically uses standard convolutional blocks plus pooling to downsample. The right half is the decoder, which uses transpose convolutions or upsampling to restore spatial resolution.

- Skip connections: Each encoder block at a given resolution is connected to the corresponding decoder block at the same resolution. This helps the model recover spatial details that might otherwise be lost during downsampling.

U-Net has proven exceptionally successful in contexts where training data is limited, thanks to these skip connections and the ability to capture both local and global context. Its success has led to numerous variants: U-Net++ (Zhou and gang, 2018), Attention U-Net, V-Net (for volumetric data), etc.

An image was requested, but the frog was found.

Alt: "High-level schematic of a U-Net architecture"

Caption: "Basic structure of a U-Net with skip connections from encoder to decoder stages"

Error type: missing path

fpn (feature pyramid network)

Feature Pyramid Networks (Lin and gang, CVPR 2017) propose a method to fuse multi-scale features from different levels of a backbone CNN. FPN is not exclusively for segmentation — it is also used extensively in object detection (e.g., in Mask R-CNN). The central idea is that features from shallow layers capture high resolution but maybe less semantic depth, while deeper layers capture more abstract, semantic information at lower resolution. By creating a top-down pathway with lateral connections, FPN effectively fuses these multi-level feature maps, yielding a feature pyramid that has both semantically strong and high-resolution representations. For segmentation tasks, FPN can help localize small objects better while also capturing large objects and global context.

deeplabv3

DeepLab is a family of architectures (DeepLabv1, v2, v3, and v3+) that introduced and refined the concept of atrous (dilated) convolutions to capture multi-scale context without losing resolution through pooling. Specifically, DeepLabv3 introduced the Atrous Spatial Pyramid Pooling (ASPP) module, which applies dilated convolutions at different rates (scales), then pools and concatenates the results to handle objects of varying sizes. The effective receptive field of the convolution is broadened without increasing the number of parameters significantly. This approach has proven to be among the best for semantic segmentation in many benchmarks.

An image was requested, but the frog was found.

Alt: "Atrous Spatial Pyramid Pooling concept"

Caption: "Atrous convolutions with different rates (dilations) aggregated together to form a spatial pyramid"

Error type: missing path

In practice, these atrous convolutions can be arranged in parallel or in cascade, with the objective of allowing the network to see context from both near and far pixels. This multi-scale awareness is critical for images containing objects of significantly varying sizes — like a tiny person in the distance or a giant building occupying most of the image.

other noteworthy architectures (e.g., mask r-cnn, pspnet)

-

Mask R-CNN: This is an extension of Faster R-CNN for instance segmentation. After detecting bounding boxes, a small segmentation head is applied on the features inside each box to produce a mask for that instance. This approach naturally integrates detection and segmentation and has become a standard for instance-level tasks.

-

PSPNet (Pyramid Scene Parsing Network): This network includes a pyramid pooling module to gather context from different subregions of the feature map, enabling global context modeling. The final feature representation is then aggregated and combined to yield strong semantic segmentation outputs.

transformer-based segmentation models

Recently, Vision Transformers (ViT) have gained popularity for classification tasks. Researchers have adapted them for segmentation in multiple ways:

- SETR: The authors replaced the CNN backbone with a pure transformer for semantic segmentation. A convolution-free encoder is followed by a decoder that aggregates tokens back into a dense mask.

- Segmenter (Strudel and gang, ICCV 2021): Another approach harnessing transformers' self-attention for capturing long-range dependencies, potentially leading to better boundary delineation and more globally consistent segmentation.

Although these transformer-based models are still evolving and can be quite large, they are seen as a new frontier for potentially improved segmentation accuracy and a unified approach across different vision tasks.

implementation and practical considerations

data preprocessing with opencv

Preprocessing for segmentation typically includes:

- Resizing images to a consistent shape (if large variability in input dimensions exists).

- Normalization of pixel intensities or color channels. For instance, subtracting the mean and dividing by the standard deviation, or rescaling pixel values to [0,1].

- Color space transformations (e.g., converting from BGR to RGB) as required by certain frameworks or networks.

Using OpenCV, a typical preprocessing snippet in Python might look like this:

import cv2

import numpy as np

def preprocess(image, new_size=(512, 512)):

# Resize

image_resized = cv2.resize(image, new_size, interpolation=cv2.INTER_LINEAR)

# Convert to RGB if needed

image_rgb = cv2.cvtColor(image_resized, cv2.COLOR_BGR2RGB)

# Normalize by mean and std

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

# Scale to [0, 1]

image_rgb = image_rgb / 255.0

# Normalize

image_normalized = (image_rgb - mean) / std

# Reorder dimensions to channel-first

image_transposed = np.transpose(image_normalized, (2, 0, 1))

return image_transposed

frameworks and libraries (tensorflow, pytorch, keras)

Both TensorFlow (with Keras) and PyTorch are popular for building segmentation pipelines:

- PyTorch typically offers more "pythonic" dynamic graph building and a large ecosystem of open-source repositories (e.g., segmentation_models.pytorch).

- TensorFlow/Keras provides a higher-level API in many cases, with wide industrial adoption and integrated deployment solutions (TensorFlow Serving, TensorFlow Lite).

They both offer specialized layers for transpose convolution or specialized upsampling methods. High-level libraries (like MONAI for medical imaging or Albumentations for image augmentation) provide convenience functions for segmentation tasks.

training pipelines and hyperparameter tuning

Training a segmentation model typically follows the standard deep learning pipeline with specialized considerations:

- Batch size: Training in segmentation can be memory-intensive, because the input images or their feature maps can be large. Reducing batch size or cropping patches can help.

- Learning rate schedules: Common to use an initial learning rate with step decay or cosine annealing.

- Optimization: Standard optimizers like Adam or SGD with momentum are prevalent, but advanced optimizers (RAdam, LAMB) can also appear in large-scale training scenarios.

- Loss functions: Typically cross-entropy or variants (e.g., focal loss for class imbalance, Dice loss in medical imaging).

In practice, we might combine multiple losses — for instance, a weighted sum of cross-entropy and Dice loss — to better handle both large and small objects and mitigate class imbalance.

managing computational resources and gpu usage

Segmentation tasks are computationally heavy, especially for high-resolution images. Approaches include:

- Mixed-precision training: Using half-precision floats (FP16) for speed and memory gains.

- Gradient accumulation: Splitting a batch across multiple smaller micro-batches to effectively simulate a larger batch size on limited GPU memory.

- Distributed training: If multiple GPUs or multi-node clusters are available, frameworks such as PyTorch DistributedDataParallel or Horovod can parallelize training and handle large datasets more efficiently.

model deployment and optimization

Once a segmentation model is trained, real-world usage might require:

- Export to a lightweight format: ONNX or TensorFlow Lite for edge devices.

- Model quantization: 8-bit integer quantization can drastically reduce model size and improve inference speed (especially on specialized hardware) at a modest cost in accuracy.

- Pruning and channel reduction: Techniques that remove redundant parameters can allow real-time or near-real-time inference on embedded devices.

Such deployment optimizations are especially important in scenarios like robotics or mobile/AR applications, where latency and memory overhead must be carefully managed.

advanced techniques and variations

attention mechanisms in segmentation

Attention modules can help a segmentation model focus on the most relevant parts of the feature maps:

- Spatial attention: Learns an attention map that highlights relevant spatial locations.

- Channel attention: Learns how to reweight feature channels based on their importance.

Examples include Squeeze-and-Excitation (Hu and gang) or more advanced self-attention blocks reminiscent of transformers, but integrated into CNN-based segmentation architectures (e.g., DANet).

semi-supervised and unsupervised segmentation approaches

A major bottleneck in segmentation is the cost of obtaining per-pixel annotations. Recent research is exploring:

- Semi-supervised segmentation: Combining a small fully labeled dataset with a larger unlabeled dataset, e.g., via consistency regularization.

- Weakly supervised segmentation: Using bounding boxes, image-level tags, or scribbles to guide segmentation without full masks.

- Unsupervised segmentation: Approaches like clustering in the feature space can segment images without labels, although the accuracy for complex tasks might be limited.

real-time segmentation methods for edge devices

Real-time segmentation has gained momentum thanks to applications in robotics, AR/VR, and mobile:

- Lightweight backbones: E.g., MobileNet, ShuffleNet, or EfficientNet-based encoders.

- Fast decoders: Minimizing skip connections, using simpler upsampling blocks.

- Knowledge distillation: Transferring knowledge from a large teacher model to a smaller student for fast inference.

Popular real-time networks include ENet, ESPNet, and Fast-SCNN.

domain adaptation for specialized tasks

When deploying segmentation solutions across different domains (e.g., from synthetic data to real-world data, or from one medical imaging modality to another), domain gaps arise due to different lighting conditions, noise characteristics, etc. Domain adaptation techniques aim to align feature distributions across source and target domains. Some approaches incorporate adversarial training to make the source and target distributions indistinguishable at some latent feature level.

multi-task learning and joint training

Sometimes, segmentation is only part of the puzzle. One might also need depth estimation, surface normals, or optical flow. Training multiple tasks simultaneously with shared encoders can lead to performance improvements for all tasks due to shared feature representations. This synergy is especially helpful when the tasks are complementary (e.g., depth estimation can help the model learn shape cues that improve segmentation).

real-world applications

medical imaging (organ/tumor segmentation)

In medical imaging, segmentation of anatomical structures (brain tumors, lung nodules, cardiac chambers) is a cornerstone for diagnosis, surgical planning, and treatment monitoring. For instance, a hospital might want an automated pipeline that takes MRI scans of the brain and precisely delineates tumor boundaries. Deep segmentation approaches like U-Net, V-Net (for 3D volumes), and specialized transformer-based medical segmentation models have been widely adopted. Researchers continue to address challenges such as scarce labeled data, class imbalance (tumors might occupy a tiny fraction of the volume), and domain shifts across different imaging devices.

autonomous vehicles (lane and pedestrian segmentation)

For self-driving cars, pixel-level understanding of the scene is critical. Autonomous vehicles rely on segmentation networks to identify drivable regions, lane markings, pedestrians, cyclists, traffic signs, and more. This helps the downstream planning modules determine safe navigation paths. Datasets like Cityscapes or Mapillary Vistas specifically focus on street-level imagery with finely annotated masks, which has helped push the field forward.

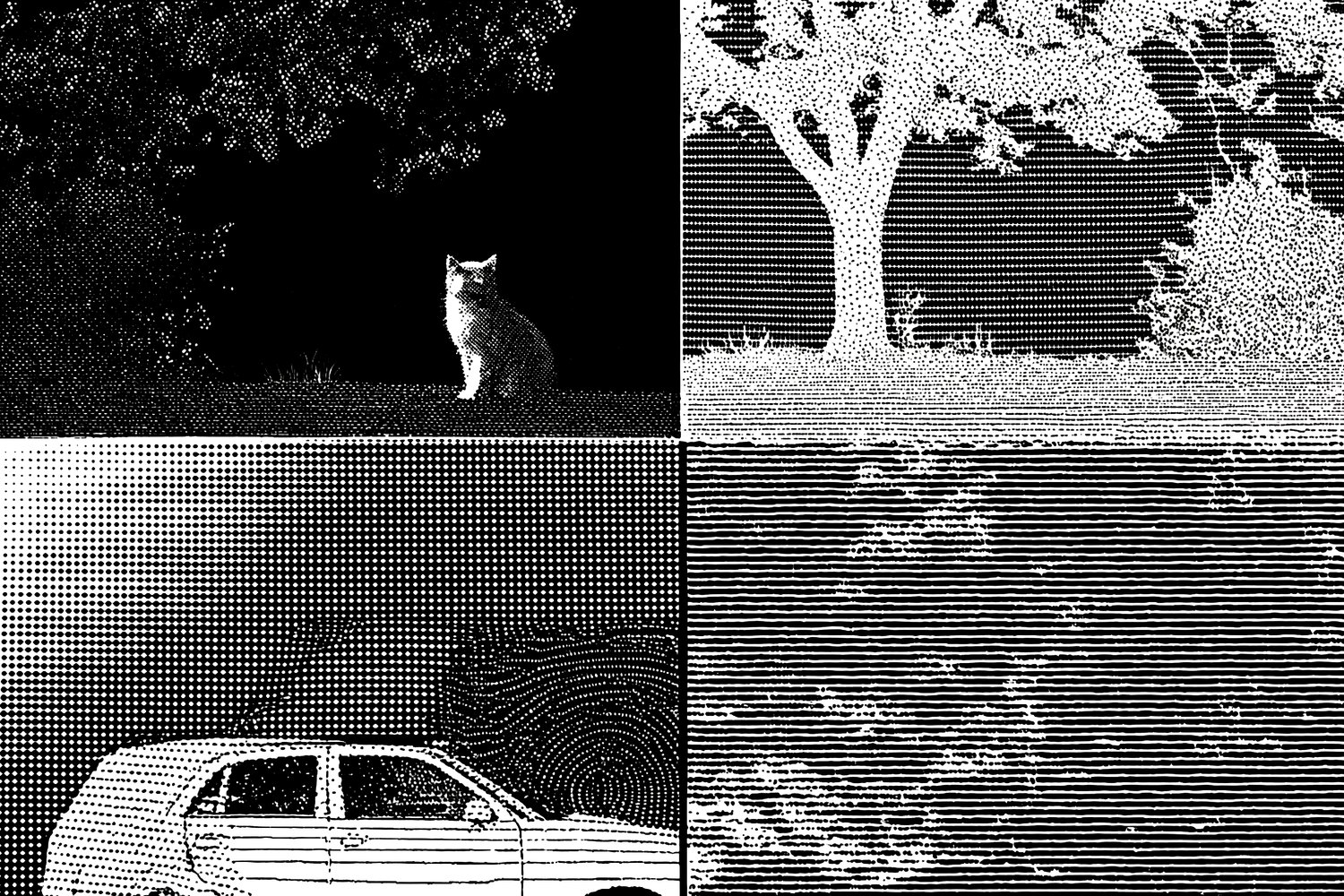

An image was requested, but the frog was found.

Alt: "Street scene with segmentation masks"

Caption: "Segmentation for lanes, vehicles, pedestrians, and other scene components"

Error type: missing path

satellite imagery and agriculture

Segmenting large-scale satellite or aerial images helps with:

- Land cover classification (e.g., farmland vs. forest vs. urban)

- Crop monitoring (e.g., analyzing vegetation health)

- Environmental conservation (e.g., tracking deforestation or water resources)

Challenges in satellite segmentation include extremely high-resolution imagery and the need to handle huge numbers of pixels. Methods that leverage multi-spectral data and domain adaptation for different sensors (e.g., visible vs. infrared) are commonly used.

interactive tools (photo editors, ar applications)

Many photo editing programs let users quickly select the foreground object or remove backgrounds. Behind the scenes, sophisticated segmentation algorithms are often at play. In augmented reality (AR), real-time segmentation can overlay or place virtual objects behind or in front of scene elements with correct occlusion relationships.

industrial inspection and robotics

Robot arms on manufacturing floors rely on segmentation to locate and manipulate parts accurately. In industrial inspection, segmentation might help localize defects on surfaces or detect anomalies in product assemblies. Given the potential variety of objects and the need for robust performance, fine-tuned segmentation models with domain knowledge are often deployed in such scenarios.

challenges and future directions

class imbalance and small object segmentation

Real-world data often has skewed distributions (e.g., large background areas, small foreground objects). To address this, researchers use specialized losses like focal loss, or data-level methods like oversampling minority classes. Online Hard Example Mining (OHEM) can also help by focusing training on pixels that are misclassified more often. Small object segmentation remains challenging, since these objects can be easily overlooked by CNNs that downsample the image too aggressively.

ethical considerations (data privacy, biased datasets)

Large segmentation datasets frequently include sensitive imagery, such as medical scans. Preserving patient privacy and complying with HIPAA or GDPR can become major concerns, requiring careful anonymization. Biased datasets can propagate or amplify unfair outcomes in real-world applications, such as mis-segmenting objects for certain demographics or incorrectly segmenting certain geographies in satellite data. Addressing these biases is a crucial step toward equitable AI systems.

large-scale datasets and computational constraints

With the rise of city-scale or planet-scale analysis (e.g., satellite imagery with billions of pixels), computational constraints become significant. Training a single epoch on such data might take days or weeks with naive approaches. Therefore, distributed training strategies and advanced data loading pipelines become essential, as does research into more efficient segmentation architectures.

emerging research areas (transformers for segmentation, 3d segmentation)

Transformers are increasingly used to capture global pixel relationships. We see new architectures — like Mask2Former, SegFormer, and variations on DeTR (Detection Transformer)-style modules — that unify detection and segmentation. Meanwhile, 3D or volumetric segmentation is taking off in medical imaging (e.g., for MRI or CT scans), plus 3D point cloud segmentation for self-driving or robotics. These tasks demand specialized architectures that incorporate 3D convolutions or point-based operators.

model interpretability and explainability

In high-stakes domains (e.g., healthcare), explaining why a segmentation model decided that certain pixels are part of a tumor boundary is vital. Techniques like Grad-CAM or integrated gradients for segmentation can help visualize which regions of the input contributed most to the network's prediction, thereby supporting transparency and trust in the model's outputs.

extra chapter: classical graph-based methods revisited

Although modern deep learning approaches dominate in accuracy and flexibility, classical graph-based segmentation and normalized cuts methods remain influential. They demonstrate fundamental principles of grouping pixels according to similarity and partitioning images into coherent regions.

graph-based segmentation

We represent each pixel as a node in a graph. An edge connects nodes if they are neighbors in the image grid (often including diagonal adjacency), and the edge weight captures the dissimilarity (or conversely, similarity) of those pixels — e.g., color intensity difference. A widely cited method introduced by Felzenszwalb and Huttenlocher (2004) merges regions by sorting edges in order of increasing weight (Kruskal's MST-based approach). Let be the difference measure for the edge . The internal difference of a region can be defined as:

The difference between two adjacent regions and can be defined as:

The algorithm merges two regions if . A common heuristic sets . Merging these regions iteratively yields a hierarchical segmentation. Although overshadowed by deep learning solutions for complex tasks, this method is computationally efficient () and can provide good initial over-segmentations (e.g., superpixels) for subsequent neural network processing.

normalized cuts

Normalized cuts focus on partitioning a graph into strongly connected subgraphs. The cut between subgraphs and is:

But we prefer to minimize the normalized cut:

where is the sum of connections between all nodes in and all nodes in . Minimizing this objective can be done via spectral graph theory, but at high computational cost if the graph is large. Multi-level or hierarchical approaches that coarsen the graph can reduce computational demands, leading to approximate solutions. While deep learning is more commonly used in production for semantic or instance segmentation tasks, classical normalized cuts remain an important building block in the theoretical foundations of image partitioning.

final remarks

Image object segmentation has come a long way from threshold-based heuristics and region-growing algorithms to modern end-to-end trainable architectures powered by convolutional layers, attention mechanisms, and even transformers. The journey has been enabled by big data, advanced GPUs, and the synergy of academic and industrial research. Today, segmentation is ubiquitous in fields as diverse as medical imaging, autonomous driving, satellite remote sensing, robotics, and interactive image editing. And with ongoing research in semi-supervised approaches, 3D segmentation, real-time methods for edge devices, and more, the field continues to expand.

The shift toward vision transformers, multi-task learning, and self-/unsupervised methods signals that segmentation's future will involve increasingly flexible and data-efficient solutions. Additionally, domain adaptation, privacy-preserving methods, and fairness considerations will shape how segmentation models are developed and deployed responsibly. For practitioners and researchers alike, staying abreast of these next-generation ideas while also mastering classical concepts remains key to pushing the boundaries of automated image understanding.

Overall, the pixel-level accuracy of segmentation stands as a true litmus test for how thoroughly a model "understands" an image. Whether it is to delineate a malignant region in a CT scan or to localize every curb, lane marking, and pedestrian in a bustling city scene, segmentation methods have proven to be some of the most impactful, nuanced, and rapidly evolving areas in modern computer vision. Through a firm grasp of the core principles, architectures, and future directions outlined here, data scientists and machine learning engineers can effectively tackle challenging segmentation problems and continue advancing the frontier of visual perception.

References cited (selection):

- Long and gang, "Fully Convolutional Networks for Semantic Segmentation", CVPR 2015

- Ronneberger and gang, "U-Net: Convolutional Networks for Biomedical Image Segmentation", MICCAI 2015

- Lin and gang, "Feature Pyramid Networks for Object Detection", CVPR 2017

- Chen and gang, "DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs", TPAMI 2018

- Strudel and gang, "Segmenter: Transformer for Semantic Segmentation", ICCV 2021

You can find many more specific references for each sub-topic in specialized literature. Explore them if you want a deeper dive into the theoretical underpinnings or to learn from the open-source code repositories that have shaped state-of-the-art segmentation research.