🎓 58/2

This post is a part of the Probabilistic models & Bayesian methods educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Gaussian mixture models (GMMs) are a core tool in modern statistics and machine learning for representing complex, multimodal data distributions using a superposition of multiple Gaussian (normal) density components. In essence, a GMM posits that each data point is generated from one of several latent Gaussian distributions, each having its own mean and covariance parameters, and the probability of sampling from any particular component is given by a set of mixture weights that sum to one.

The concept of mixture modeling is not limited to Gaussians, but Gaussian mixtures have proven especially popular due to their mathematical tractability, interpretability, and strong ties to the central limit theorem. Mixture of Gaussians also arises naturally in many real-world data scenarios where multiple underlying (approximately normal) processes combine to produce observed samples.

Although a single Gaussian distribution may fail to capture complicated shapes in data (for instance, if the distribution is clearly multimodal or strongly skewed), a mixture of Gaussians can approximate a large variety of densities. This adaptability and flexibility has led to widespread use of GMMs in clustering, density estimation, anomaly detection, computer vision, speech processing, and numerous other fields. The capacity to model multiple, potentially overlapping subpopulations within a dataset gives GMMs a strong advantage over simpler parametric approaches.

Early references to mixture models in general, and mixture-of-Gaussian approaches specifically, date back decades, but the concept soared in popularity in the statistical community in large part due to two major factors:

-

The expectation–maximization (EM) algorithm became recognized as a standard procedure for maximum likelihood parameter estimation in latent-variable models. While EM algorithms have been discovered many times in specialized settings, their general exposition in the classic paper by Dempster, Laird, and Rubin (1977) brought them into the mainstream. GMMs are perhaps the most famous example of an EM application, as the unknown membership of each data point to a particular mixture component can be treated as the latent variable.

-

Widespread computational resources became available, which significantly lowered the barrier to fitting computationally non-trivial models, including iterative algorithms like EM. With high-powered servers and frameworks, even large datasets can be processed efficiently, making GMM training feasible in many commercial and research settings.

1.1 The basics of mixture models

A mixture model is a probabilistic framework in which the data distribution is expressed as a finite or infinite weighted sum of component distributions:

where:

-

are the mixture weights or mixing coefficients, subject to constraints: for all , and

-

represents the -th component distribution (for instance, a Gaussian with parameters ).

Hence, to generate a data point , one would first choose a mixture component (indexed by ) according to probabilities , then draw from that component's distribution. This latent process is typically unknown to us, so we do not observe which component each point came from. The Gaussian mixture model is a special case in which each component is a Gaussian distribution with its own mean and covariance matrix.

1.2 Why use Gaussian mixture models?

Gaussian mixtures serve as a sort of universal approximator for continuous densities, especially if one allows a large number of mixture components. Even with a moderate number of components, GMMs can represent complicated shapes that would be difficult to capture with a single parametric family (like a single Gaussian).

Common applications of GMMs in machine learning include:

-

Clustering: GMM-based clustering can be seen as a soft or probabilistic alternative to -means, where each point is assigned membership probabilities for each cluster instead of a single discrete label. This allows for more nuanced cluster boundaries and can accommodate overlapping subpopulations.

-

Anomaly or outlier detection: Fitting a mixture to normal data and then looking for points that have low posterior membership in all mixture components is a practical approach in anomaly detection tasks.

-

Data generation and density estimation: GMMs can produce synthetic samples that mirror the distribution of real data (helpful in simulation, data augmentation, and other generative tasks). They are also used in iterative processes like some versions of the EM-based approaches for incomplete data.

-

Model-based clustering in high dimensions: When the covariance matrices of the mixture components are appropriately constrained (e.g., diagonal or spherical), GMMs may remain tractable in moderate or even high-dimensional problems.

1.3 A brief history of Gaussian distributions and mixture modeling

Gauss introduced the normal distribution in the context of astronomical observations. As the normal distribution took on a central role in statistics, it naturally began to appear in mixture contexts as well. Mixture modeling can be traced to Karl Pearson (1894), who attempted to fit a mixture of two normal distributions to biological data (the ratio of forehead-chin measurements, to be exact). Pearson's approach can be considered one of the earliest forms of mixture modeling.

The broader acceptance of mixture modeling came with the formal introduction and analysis of the EM algorithm. Arthur Dempster, Nan Laird, and Donald Rubin introduced EM in 1977 and demonstrated how to use it for maximum likelihood estimation in incomplete-data problems — including, as a showcase, mixture-of-Gaussian models. Since then, GMMs have become a textbook example for illustrating the EM procedure.

2. Mathematical foundations

To fully grasp GMMs and how to estimate their parameters, it's useful to recall fundamental ideas from probability and statistics. We'll highlight those relevant to mixture modeling in general, and to Gaussian mixture models in particular.

2.1 Refresher of related statistics concepts

-

Probability density function (PDF): A continuous random variable has a PDF if, for any region ,

In the mixture model context, is decomposed into a sum of component PDFs.

-

Multivariate Gaussian distribution: A random variable is normally distributed with mean vector and covariance matrix if

Gaussian mixture models make use of multiple such Gaussian distributions, each with potentially distinct and .

-

Log-likelihood: Given i.i.d. data , the likelihood of parameters is

and the log-likelihood is . The mixture model log-likelihood typically involves .

-

Expectation of a log-likelihood: In latent variable models, we often look at the expectation of the complete-data log-likelihood, computed under some distribution over the latent variables (in the GMM setting, the latent variable is the index of the component from which each point was drawn).

2.2 Components, means, and covariances in GMM

In a GMM, each component is specified by:

- A mean vector .

- A covariance matrix , which must be positive semi-definite (and typically assumed positive-definite in practice).

- A mixture weight such that .

Thus, the GMM can be written as:

This is the basis for modeling data with multiple subpopulations. It's easy to see that as , a mixture of Gaussians can approximate a very large family of densities (though in practice we choose by model selection criteria or domain knowledge).

2.2.1 Mixture weights and log-likelihood

Given a dataset , the log-likelihood of a GMM with parameters is:

Maximizing this log-likelihood w.r.t. is not straightforward because of the logarithm of a sum. The standard approach is to use the EM algorithm, which provides an efficient iterative solution.

2.2.2 Convergence criteria in mixture models

When one speaks of "convergence criteria," it can refer to:

- Convergence of the EM algorithm to a local maximum of the log-likelihood (or more generally to a stationary point).

- Stopping criteria used in practice, such as the relative change in log-likelihood between iterations, or a maximum number of iterations, or small changes in parameters, or the difference in posterior membership probabilities across iterations.

In many mixture modeling contexts, we typically define a tolerance such that we stop the iterations once

or a similar condition based on parameter changes or posterior membership changes.

3. Parameter estimation using the EM algorithm

The expectation–maximization (EM) algorithm is the canonical method for finding maximum likelihood or maximum a posteriori (MAP) estimates in latent variable models such as GMMs. EM iterates two main steps until convergence: an E-step (where we compute or update the distribution over latent variables) and an M-step (where we maximize with respect to parameters given the updated latent distribution).

3.1 Overview of the EM algorithm, relation to k-means

At a high level:

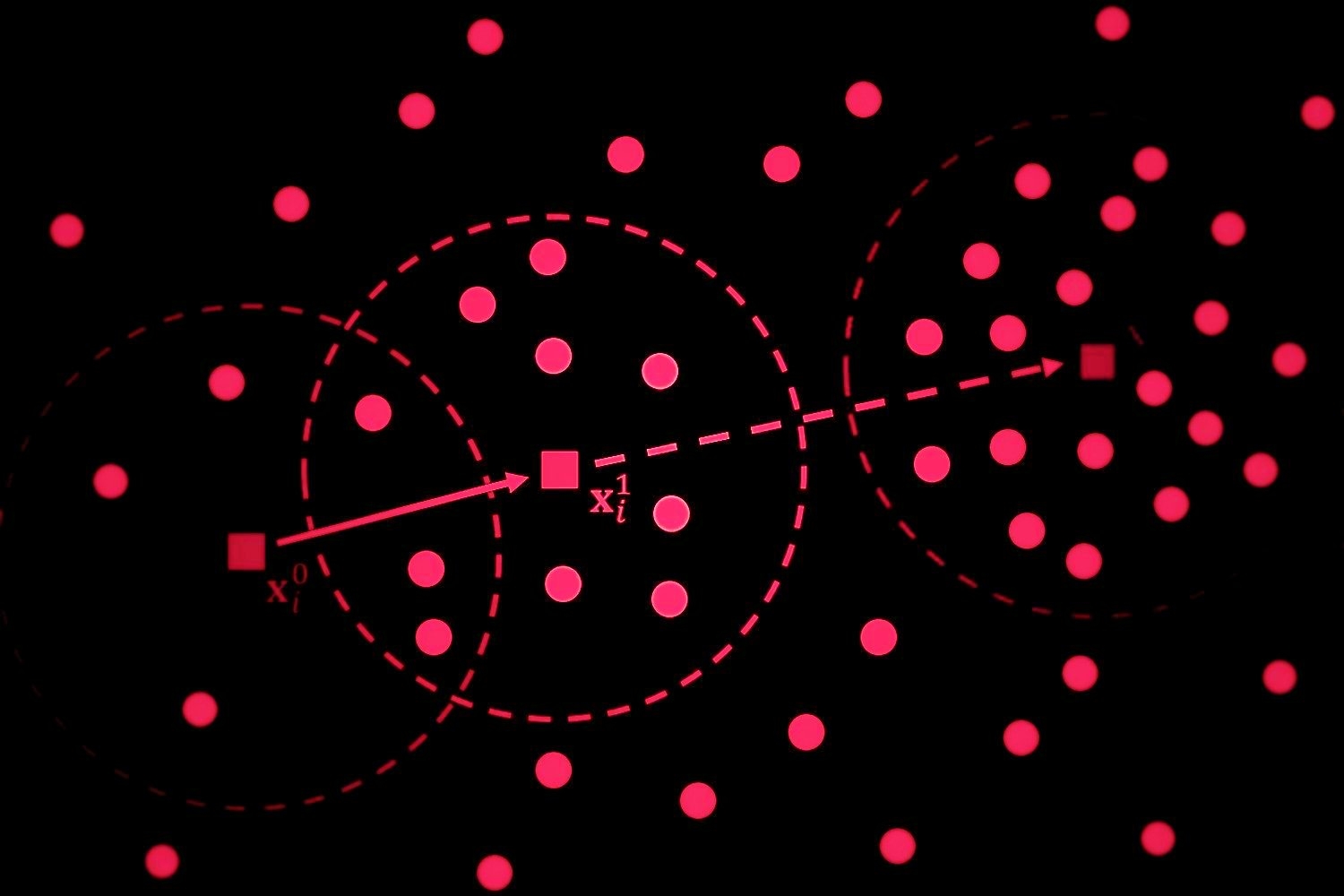

- E-step: Estimate the probability that each data point belongs to each component , given the current parameters. In GMM terms, this is the posterior probability of the latent variable : Concretely:

- M-step: Given those posterior probabilities (or membership responsibilities), update the parameters to maximize the expected complete-data log-likelihood. This yields:

This procedure is reminiscent of -means clustering in the sense that -means has two analogous steps: (1) assign each point to its nearest centroid; (2) recompute centroids as the mean of assigned points. However, in -means each point has a hard assignment to a cluster, while in GMM each point has a soft assignment (the probabilities). This tends to make GMM more flexible, though the computations are more expensive.

3.2 The expectation step

In more detail, let denote the current parameter estimates at iteration . The E-step sets:

These posterior membership probabilities are sometimes called responsibilities, because they quantify the "responsibility" that each component takes for generating the data point .

Conceptually:

- If is closer (in Mahalanobis distance) to and if is not too large, then will be relatively large.

- If is far from that component's center, or if the mixture weight is small, becomes smaller.

3.3 The maximization step

Next, the M-step uses these responsibilities to re-estimate the parameters. Consider the "expected complete-data log-likelihood" (sometimes called the Q-function in EM discussions). The solution for the new parameters is found by setting partial derivatives to zero, yielding:

-

Mixing coefficients:

This is effectively the fraction of data points for which component is responsible.

-

Means:

This is the weighted average of the data points, with weights .

-

Covariances:

This is the weighted sample covariance of the data assigned to component .

3.4 Convergence and stopping conditions

Each EM iteration is guaranteed not to decrease the observed-data log-likelihood, but the algorithm can get stuck in a local maximum or a saddle point. In practice, we repeat E- and M-steps until:

- The improvement in log-likelihood is below some threshold, or

- A maximum number of iterations is reached, or

- The parameter estimates are no longer changing significantly.

Because the log-likelihood for mixture models can have many local maxima, running EM multiple times from different randomized initial parameters is common. Then one chooses the best solution (highest log-likelihood) among the restarts, or uses a suitable model-selection approach.

3.5 Numerical stability considerations

When implementing GMMs and EM in numerical code, one must beware of potential instabilities:

- Log-sum-exp computations: The expression can lead to overflow or underflow for large or small values. A stable approach is to use the "log-sum-exp trick," which involves factoring out the maximum exponent inside the sum.

- Covariance singularities: It's possible for the EM algorithm to produce a near-singular covariance matrix if a component collapses onto a single data point. Various regularization strategies exist (e.g., adding a small diagonal term to each ).

- Empty or tiny clusters: If becomes extremely small, floating-point precision may cause that component's parameters to degrade. Some frameworks remove or merge such tiny clusters into others.

4. Model selection and evaluation

Determining an appropriate number of mixture components and assessing how well a fitted GMM describes the data can be just as important as the parameter estimation itself.

4.1 Choosing the number of components

A fundamental question in mixture modeling is how many components to use. Approaches include:

- Domain insight: In some applications, prior knowledge might indicate how many subpopulations are expected.

- Heuristics: One might train GMMs for a range of values and visually inspect solutions or measure cluster compactness, out-of-sample likelihood, etc.

- Automated model selection criteria: The Akaike information criterion (AIC) or Bayesian information criterion (BIC) are widely used to balance model fit with complexity.

4.2 AIC, BIC, and other criteria

-

AIC is given by

where is the number of free parameters in the model, and is the parameter estimate that maximizes the likelihood.

-

BIC is given by

with the number of data points.

BIC tends to penalize complex models (large ) more harshly than AIC, often favoring simpler models if the sample size is not large. In practice, BIC is popular for selecting for GMMs because it often yields good real-world performance and has a direct connection to a Bayesian viewpoint (approximation to the marginal likelihood).

4.3 Cross-validation for mixture models

Another approach for model selection is cross-validation (CV). The idea is to:

- Partition the data into training and validation sets.

- Fit a GMM with a certain on the training set.

- Evaluate the log-likelihood of the hold-out set under the fitted model.

- Repeat for multiple folds and multiple choices of .

- Choose that yields the best average validation log-likelihood (or some similar metric).

Although more computationally expensive than single-shot metrics like AIC/BIC, cross-validation is often more robust in evaluating predictive performance.

4.4 Initialization strategies and random restarts

GMM parameter estimation with EM is sensitive to initialization. Common strategies:

- -means-based initialization: Start by running -means clustering to get cluster assignments, then set to the cluster centroids, to the within-cluster covariance, and to cluster sizes / .

- Random initialization: Randomly choose from the data, maybe add random noise.

- Hierarchical or distance-based methods: If the dimension is manageable, we can do hierarchical clustering first, then pick centers to initialize the GMM.

Because of local maxima, it's common to run EM multiple times with different initializations, picking the solution with the best final log-likelihood or best BIC.

4.5 Avoiding overfitting and underfitting

- Overfitting can happen when is too large. The model can place components on small sets of points or even single points, artificially boosting the likelihood but not generalizing well.

- Underfitting is the opposite scenario: too few components hamper the model's representational power, leading to systematically poor fits.

- Regularization: You can constrain or regularize the covariance matrices, for instance, requiring them to be diagonal, or adding a penalty term. This mitigates overfitting in high-dimensional spaces.

5. Implementation

To illustrate a straightforward Python-based implementation, we can rely on libraries like NumPy for data manipulation and scikit-learn for GMM or we can craft a minimal EM from scratch. Below is a very simplified version of an EM for GMM, written in Python-like pseudocode (using the scikit-learn style as an example). Note that this is not production-level code. In real usage, it's better to rely on well-tested implementations such as GaussianMixture in scikit-learn.

<Code text={`

import numpy as np

def gaussian_pdf(x, mu, Sigma):

d = len(x)

det_Sigma = np.linalg.det(Sigma)

inv_Sigma = np.linalg.inv(Sigma)

norm_const = 1.0 / np.sqrt((2*np.pi)**d * det_Sigma)

diff = x - mu

return norm_const * np.exp(-0.5 * diff.T @ inv_Sigma @ diff)

class GMM_EM:

def __init__(self, n_components, max_iter=100, tol=1e-4):

self.n_components = n_components

self.max_iter = max_iter

self.tol = tol

def fit(self, X):

n, d = X.shape

# 1) Initialize mixture weights, means, and covariances

# Simple random init for demonstration

np.random.seed(42)

shuffle_idx = np.random.permutation(n)

self.means_ = X[shuffle_idx[:self.n_components]] # pick random points as means

self.weights_ = np.ones(self.n_components) / self.n_components

self.covs_ = np.array([np.cov(X, rowvar=False) for _ in range(self.n_components)])

log_likelihood_old = None

for iteration in range(self.max_iter):

# E-step: compute responsibilities

resp = np.zeros((n, self.n_components))

for i in range(n):

for k in range(self.n_components):

resp[i, k] = self.weights_[k] * gaussian_pdf(X[i], self.means_[k], self.covs_[k])

# Normalize row i

resp[i, :] /= np.sum(resp[i, :])

# M-step: update weights, means, and covariances

Nk = np.sum(resp, axis=0) # sum responsibilities per component

# Update weights

self.weights_ = Nk / n

# Update means

self.means_ = np.zeros((self.n_components, d))

for k in range(self.n_components):

for i in range(n):

self.means_[k] += resp[i, k] * X[i]

self.means_[k] /= Nk[k]

# Update covariances

self.covs_ = np.zeros((self.n_components, d, d))

for k in range(self.n_components):

for i in range(n):

diff = X[i] - self.means_[k]

self.covs_[k] += resp[i, k] * np.outer(diff, diff)

self.covs_[k] /= Nk[k]

# Check convergence via log-likelihood

log_likelihood = 0

for i in range(n):

val = 0

for k in range(self.n_components):

val += self.weights_[k] * gaussian_pdf(X[i], self.means_[k], self.covs_[k])

log_likelihood += np.log(val + 1e-15) # add small constant to avoid log(0)

if log_likelihood_old is not None:

if abs(log_likelihood - log_likelihood_old) < self.tol:

break

log_likelihood_old = log_likelihood

def predict_proba(self, X):

n, d = X.shape

resp = np.zeros((n, self.n_components))

for i in range(n):

for k in range(self.n_components):

resp[i, k] = self.weights_[k] * gaussian_pdf(X[i], self.means_[k], self.covs_[k])

resp[i, :] /= np.sum(resp[i, :])

return resp

def predict(self, X):

resp = self.predict_proba(X)

return np.argmax(resp, axis=1)

`}/>Example usage

To use the above class:

<Code text={`

import numpy as np

# Generate synthetic data from a mixture of 2 Gaussians

np.random.seed(0)

n = 300

mean1, mean2 = np.array([0, 0]), np.array([5, 5])

cov1 = np.eye(2)

cov2 = np.eye(2)

X1 = np.random.multivariate_normal(mean1, cov1, n//2)

X2 = np.random.multivariate_normal(mean2, cov2, n//2)

X = np.vstack([X1, X2])

# Fit GMM

model = GMM_EM(n_components=2, max_iter=100)

model.fit(X)

# Inspect fitted parameters

print("Fitted mixture weights:", model.weights_)

print("Fitted means:", model.means_)

print("Fitted covariances:", model.covs_)

# Predict membership

labels = model.predict(X)

print("Predicted cluster labels (first 10):", labels[:10])

`}/>In practice, scikit-learn's GaussianMixture is more robust and efficient, and includes advanced initialization, multiple covariance parameterization options, and built-in methods for BIC and AIC.

6. Advanced topics

While the "vanilla" GMM is widely used, many advanced variants and related ideas have been explored in recent research. We touch on some particularly interesting directions here.

6.1 Variational inference for GMMs

Variational inference (VI) is an alternative to EM for approximate posterior inference in Bayesian models. In a standard GMM context, EM yields the maximum likelihood (or MAP, if priors are introduced) parameters. If we want full posterior distributions over means, covariances, and mixing weights, VI methods can be employed to approximate the intractable posterior with a factorized distribution . Instead of the standard E and M steps, we optimize a variational lower bound. The result is often called a Variational Bayesian Gaussian mixture.

Key benefits of VI-based GMMs:

- We get a distribution over parameters, not just point estimates, which can capture parameter uncertainty.

- Automatic regularization from Bayesian priors can mitigate overfitting.

Although the details are more complex than classical EM, implementations can be found in popular frameworks, as VI has gained traction for large-scale Bayesian problems.

6.2 Bayesian Gaussian mixture models

A Bayesian Gaussian mixture typically places prior distributions on the mixture weights (e.g., a Dirichlet prior) and on each component's parameters . One might use a Normal–Inverse-Wishart prior or Normal–Inverse-Gamma prior, for example. The posterior distribution is then a complicated function. Markov Chain Monte Carlo (MCMC) methods or variational approximations are used to sample or approximate the posterior.

- Conjugate priors: If the prior is conjugate to the likelihood, some steps in MCMC or VI are analytically simpler.

- Practical usage: Bayesian GMM can automatically handle the complexity vs. fit trade-off by letting the posterior concentrate more mass on fewer components if the data do not support more. This can help with "automatic" model selection.

6.3 Infinite mixture models and Dirichlet processes

A "finite" GMM must specify a fixed . But one can define an infinite mixture model using a Dirichlet process Gaussian mixture (DP-GMM). Here, the DP is a prior on the mixing measures, allowing for a random number of components. In practice, algorithms like Gibbs sampling or Variational Inference for DP-GMMs can yield an effectively inferred number of active components, providing a solution to the "How many clusters?" question in an elegant Bayesian framework.

Dirichlet process mixture models have become a major field of research (e.g., Neal, 2000, and subsequent papers in top-tier conferences). They're quite popular in nonparametric Bayesian approaches to clustering.

6.4 Mixture models with non-Gaussian components

Though Gaussian mixtures are the most widely studied, the mixture modeling technique applies to any family of distributions:

- Mixture of Bernoulli distributions for binary data.

- Mixture of Poisson distributions for count data.

- Mixture of factor analyzers (each component is a factor analyzer).

- Mixture of exponentials, gamma distributions, or even mixture of t-distributions for heavy-tailed data.

In each case, the model is typically estimated by an EM-like procedure (or some other method if the log-likelihood is suitable). The mathematics is analogous, though the formula for each component's PDF changes.

6.5 Mixture of Bernoulli distributions

A "mixture of Bernoulli distributions" is relevant in binary data scenarios. Each dimension is a Bernoulli random variable with parameter in component . The mixture log-likelihood sums up . This approach is used in applications such as modeling presence/absence features or bag-of-words with binary indicators.

6.6 EM for Bayesian linear regression

Interestingly, the EM algorithm has broad usage beyond just mixture modeling. For instance, it can be applied to certain formulations of Bayesian linear regression where some parameters are integrated out or considered latent. The unstructured text in the prompt references that EM can solve multiple linear regression as well (Kwon and gang, 2020). However, in the standard GMM context, the usage of EM is more direct, focusing on the mixture membership as latent variables.

6.7 Handling missing data and outliers

-

Missing data: If some entries of are missing, EM can be adapted to marginalize them out. This is consistent with the original vision of EM: handle incomplete data by treating missing values as latent variables. The E-step then uses the conditional distribution of missing values given the observed part to fill in or weight the likelihood.

-

Outliers: GMM performance can degrade if outliers significantly shift the means and inflate the covariances. Various robust alternatives exist:

- Heavy-tailed mixtures (e.g., a mixture of Student-t distributions).

- Regularization or constraints on covariance estimates.

- Bayesian approaches that place robust priors on parameters, or outlier detection prior to GMM fitting.

An image was requested, but the frog was found.

Alt: "Illustration of Gaussian mixture components"

Caption: "A hypothetical 2D dataset fitted by a GMM with three elliptical Gaussian components. Each ellipse is a contour of one component's covariance."

Error type: missing path

Below, we present a thorough elaboration on each major concept introduced, ensuring that even advanced readers can deepen their understanding of the intricacies of GMMs. The next sections will dive deeper into theoretical aspects, additional computational strategies (like partial E and M steps), and more specialized references.

7. Further theoretical perspectives (extended discussion)

7.1 The complete-data likelihood and the Q-function

The central quantity behind the EM algorithm is the complete-data likelihood, which treats the cluster memberships as observed. If we define indicator variables

the complete-data log-likelihood for GMM is:

The Q-function is the expectation of this complete-data log-likelihood w.r.t. the conditional distribution of given the current parameter estimates :

Maximizing in the M-step leads precisely to the formulas described earlier.

7.2 Monotonicity and convergence proofs

It can be shown that each EM iteration will not decrease the observed-data log-likelihood:

C. F. Jeff Wu's 1983 proof established the convergence properties outside of the exponential family setting as well. However, the iteration can converge to local maxima or saddle points. Deterministic annealing or random restarts are common strategies to mitigate this issue.

7.3 Coordinate ascent viewpoint

EM can be interpreted as a two-block coordinate ascent on a certain objective function (the evidence lower bound, or ELBO), though it's a special case of the more general majorization–minimization (MM) algorithm. This viewpoint clarifies how partial or incremental updates can still improve or maintain a lower bound on the log-likelihood.

8. Broader research context and advanced references

- Robust mixtures: Various works (e.g., "Robust Clustering Methods" in JMLR, 2018) adapt GMMs to be robust to outliers by employing heavier-tailed distributions or trimming strategies.

- High-dimensional data: Methods that impose structure on (like factor analysis, diagonal covariance, or shared covariance) help GMMs scale to high-dimensional data. In this domain, see "Parsimonious Gaussian mixture models" by Celeux and Govaert, Journal of Classification (1995).

- Online/Incremental EM: For streaming data, one can use incremental or online versions of the EM algorithm that update the parameters in small batches or single points at a time. This is especially relevant for big data.

- Parallelization: To handle large datasets, parallel and distributed variants of EM for GMM have been proposed (for instance, "Accelerating Expectation–Maximization with Frequent Updates" in Cluster 2012).

- Statistical theory: The asymptotic properties of EM estimates in mixture models, such as consistency and rate of convergence, can be subtle. Researchers have studied the identifiability conditions under which mixture parameters can be uniquely identified.

9. Practical tips and pitfalls

-

Cluster degeneracy: If you see that one or more covariance matrices are collapsing (becoming singular) during training, you might fix it by:

- Setting a lower bound on the determinant, effectively .

- Re-initializing that component's parameters if it degenerates too severely.

-

Interpretability: GMM is sometimes used for clustering, but the mixture components do not necessarily correspond to "well-separated" clusters. Overlaps can be large. Evaluate membership probabilities carefully.

-

Scaling and standardization: In many real datasets, different dimensions of vary at different scales. Standardizing or normalizing the data is often recommended before GMM fitting, to avoid numerical or interpretational confusion in the covariance estimates.

-

Dimensionality: With dimension , each covariance matrix has parameters, which can become large for bigger . You might reduce dimensionality with PCA or adopt diagonal/spherical covariances.

-

Hyperparameter tuning: Model selection via BIC or cross-validation is generally recommended. If your final solution ends up with many very small mixture weights, try a smaller .

10. Additional code example: scikit-learn usage

Here is a short snippet using scikit-learn's GaussianMixture class for demonstration:

<Code text={`

import numpy as np

from sklearn.mixture import GaussianMixture

import matplotlib.pyplot as plt

# Synthetic data

np.random.seed(123)

n_samples = 500

mean_a = [2, 2]

cov_a = [[1, 0.2], [0.2, 1]]

data_a = np.random.multivariate_normal(mean_a, cov_a, n_samples//2)

mean_b = [-2, -3]

cov_b = [[1, -0.1], [-0.1, 1]]

data_b = np.random.multivariate_normal(mean_b, cov_b, n_samples//2)

X = np.vstack((data_a, data_b))

# Fit GMM

gmm = GaussianMixture(n_components=2, covariance_type='full', random_state=42)

gmm.fit(X)

print("Means:")

print(gmm.means_)

print("Covariances:")

print(gmm.covariances_)

print("Weights:", gmm.weights_)

# Predict cluster membership

labels = gmm.predict(X)

# Plot

plt.figure(figsize=(6,6))

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='viridis', s=30)

plt.title("GMM Clustering with scikit-learn")

plt.show()

`}/>This snippet trains a GMM with two components and then plots the data points with color-coded cluster assignments (based on the maximum posterior responsibility). Notice how easy it is to retrieve the model's means, covariances, and weights.

11. Final remarks (optional)

Gaussian mixture models, while conceptually straightforward, continue to be a mainstay in unsupervised learning and distribution modeling tasks. They are a natural extension of the single Gaussian approach and can handle multi-modal, overlapping distributions elegantly. Despite potential challenges such as local maxima, cluster degeneracy, or dimensional explosion, GMMs remain favored due to their flexibility, interpretability, and a long history of theoretical grounding.

With the advent of advanced Bayesian and nonparametric approaches (like Dirichlet process mixtures) and faster optimization methods (like stochastic or parallel EM), GMMs are more capable than ever of scaling to large datasets. On the experimental side, scikit-learn's GaussianMixture or similarly robust libraries in languages like R (mclust) or Julia (Clustering.jl) can be used for quick and reliable modeling.

I encourage the reader to experiment with real data, tune the number of components, and get a feel for how GMMs partition data in a soft, probabilistic manner. The synergy of GMMs with other areas — like hidden Markov models for time series, mixture of experts for advanced regression tasks, or as building blocks in generative adversarial networks — further highlights their central role in modern data science.

Below are extended references and short notes on specialized topics, providing a launching point for further study.

12. Extra references and advanced reading

- Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1), 1–38.

- McLachlan, G., & Peel, D. (2000). Finite Mixture Models. Wiley. A comprehensive treatment, including theoretical and practical aspects.

- Bishop, C. M. (2006). Pattern Recognition and Machine Learning. Springer. Chapter on mixture models, EM, and related ideas.

- Neal, R. M. (1992). Bayesian mixture modeling for the Dirichlet process. Journal of the Royal Statistical Society: Series B, conceptual introduction to infinite mixture models.

- Fraley, C., & Raftery, A. E. (2002). Model-based clustering, discriminant analysis, and density estimation. Journal of the American Statistical Association, 97(458), 611–631.

- Stephens, M. (2000). Dealing with label switching in mixture models. Journal of the Royal Statistical Society: Series B (Statistical Methodology). On dealing with the label ambiguity in mixture components.

I hope this detailed exploration of Gaussian mixture models will help you see why they remain a fundamental building block of advanced machine learning and data science pipelines, especially in unsupervised or semi-supervised contexts. Whether you use them for clustering, density estimation, or as submodules in more elaborate pipelines, GMMs will likely continue to be relevant for years to come.