🎓 44/2

This post is a part of the Working with data educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Hey look buddy, I'm an engineer. That means I solve problems, not problems like "What is beauty?"... (c)

Data engineering serves as the backbone for modern data-intensive applications, ensuring that raw data is effectively captured, stored, transformed, and made accessible to downstream systems such as analytics platforms, machine learning pipelines, and business intelligence applications. Although it often operates "behind the scenes", data engineering provides the essential infrastructure that powers many of the advanced machine learning solutions we see in production today.

Data engineering has grown in prominence due to the explosive increase in the volume, velocity, and variety of data generated across industries. From retail to healthcare, from social networks to IoT devices, the explosion of data sources calls for sophisticated mechanisms to handle and process information efficiently. Data engineers build these mechanisms — commonly referred to as "pipelines" — that enable reliable data ingestion, transformation, cleansing, and storage at scale. These pipelines and their surrounding ecosystem form the "data supply chain" that data scientists, analysts, and machine learning practitioners rely on.

The role of data engineering in machine learning and data science

Data engineering bridges the gap between the raw data that originates from numerous sources and the curated, high-quality data that data scientists and analysts need in order to glean insights or build predictive models. Without well-designed data pipelines and storage solutions, data-driven workflows risk being bogged down by poor data quality, inconsistent data schemas, incomplete records, or insufficient compute resources for large-scale processing.

Data scientists typically focus on model development, feature engineering, algorithmic exploration, and analytics; data engineers focus on the design, construction, and maintenance of systems that ensure those specialists have consistent, accurate, and timely data to work with. As the lines between roles can blur in agile or smaller teams, many professionals have come to appreciate at least a working knowledge of data engineering fundamentals, making them more effective at collaborating across data-related functions.

Key responsibilities and skill sets of a data engineer

Broadly speaking, data engineers are responsible for:

- Data acquisition and ingestion: Designing and implementing mechanisms to pull data from various sources (e.g., relational databases, logs, APIs, web scraping, sensor networks) into a centralized system or pipeline entry point.

- Data transformation: Using a variety of tools (e.g., SQL, Spark, Python scripts) to clean and reshape the data, manage schemas, and merge or aggregate data as required for downstream tasks.

- Data storage: Choosing and implementing appropriate storage solutions, such as data warehouses, data lakes, or specialized NoSQL databases, ensuring they are reliable and performant.

- Infrastructure and tooling: Working with distributed systems, containerization, virtualization, and cluster management solutions to maintain a robust data platform that scales.

- Orchestration: Coordinating complex workflows, scheduling jobs, and setting up automated data pipelines with tools like Apache Airflow, Luigi, or other orchestrators, ensuring timely data availability and reliability.

- Security and governance: Implementing authentication, authorization, encryption, data cataloging, compliance checks, and relevant data governance policies (e.g., GDPR).

- Monitoring and logging: Setting up logs, alerts, and dashboards to keep track of pipeline performance, detect anomalies, and ensure SLAs are met.

Data engineers combine proficiency in programming (often Python, Java, or Scala) with database knowledge (SQL, NoSQL), distributed processing frameworks (Hadoop, Spark), containerization (Docker, Kubernetes), workflow orchestration (Airflow), and cloud platforms (AWS, GCP, Azure). They also need a thorough understanding of networking, file formats (Parquet, Avro, JSON), and system design principles to build solutions that can handle modern data workloads.

Overview of essential data engineering concepts

Before diving deeper into core aspects such as OLTP, OLAP, data warehouses (DWH), and the design of data pipelines, it is useful to have a mental map of the field:

- Relational vs. non-relational data stores: Traditional SQL-based systems remain ubiquitous, but specialized NoSQL stores (e.g., document, key-value, wide-column, graph) are key to handling unstructured or semi-structured data.

- Batch vs. streaming: In some scenarios, companies can process data in micro-batches or large daily loads, while others need near-real-time pipelines for immediate insights or trigger-based events.

- Data lakes vs. data warehouses: A data lake can store data in raw or minimal-processed form, whereas a data warehouse typically holds data in a structured format optimized for analytics. Hybrid or "lakehouse" architectures combine properties of both.

- Automation and orchestration: Essential for production-grade data engineering, enabling highly automated, continuously running data pipelines with minimal human intervention.

In the following chapters, I will cover these areas in detail, touching on best practices, advanced frameworks, and emerging trends in data engineering as well as referencing leading-edge research when relevant.

2. Understanding OLTP, OLAP and DWH

One of the foundational areas in data engineering involves understanding how different types of systems are designed for different data workloads. This typically falls into two broad categories: OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing). Data warehouses (DWH) often form the backbone of OLAP workloads. Let's unpack these concepts.

OLTP: Online transaction processing

OLTP systems are built primarily to handle large numbers of short, simple, and frequent transactions. Typical operations include inserting, updating, or deleting small amounts of data. Common examples include e-commerce transaction databases, inventory management systems, and banking transaction systems. The main design considerations for OLTP systems are:

- High concurrency: Supporting hundreds or thousands of concurrent users or processes transacting data simultaneously.

- Fast reads and writes: Latencies are typically measured in milliseconds or microseconds.

- Normalized schema design: OLTP databases are often normalized (in the sense of database normalization forms) to avoid redundant data, reduce update anomalies, and maintain data integrity.

A classic example is a relational database using a design modeled around entity-relationship diagrams (ERD). For instance, a typical e-commerce system might have tables like customers, orders, and order_items. The emphasis is on efficient transactions and ensuring data integrity via ACID (Atomicity, Consistency, Isolation, Durability) properties.

OLAP: Online analytical processing

OLAP systems focus on analytical queries often involving large amounts of historical data. These queries might be aggregations, roll-ups, or computations across billions of rows to extract insights or generate business intelligence dashboards. The main design considerations for OLAP systems are:

- Complex queries: Possibly scanning large swaths of data to produce insights, e.g., monthly sales aggregates, multi-dimensional analysis, or advanced statistical computations.

- High read throughput: Prioritizing query performance for aggregated data retrieval.

- Denormalized schema: OLAP systems often employ star or snowflake schemas to reduce the complexity of joins and speed up queries.

The technology choices here include specialized data warehouse platforms like Snowflake, Amazon Redshift, Google BigQuery, or on-premise solutions like PostgreSQL-based data warehouses or columnar stores (e.g., Apache Parquet, Apache ORC).

Data warehouse (DWH)

A data warehouse is a central repository that consolidates data from various sources — typically OLTP systems and other data streams — in a format optimized for analytical queries. It typically follows these principles:

- Subject-oriented: Data is organized by subjects or business domains (e.g., sales, finance, marketing).

- Integrated: Data from heterogeneous sources is cleaned, transformed, and integrated into a consistent schema.

- Time-variant: Data within a warehouse is almost always associated with time stamps and can hold historical snapshots, enabling trend analysis.

- Non-volatile: Once loaded into the warehouse, data is not typically changed. Instead, historical data is retained, appended, or archived to maintain audit trails.

Data warehouses might be built on top of relational technology but use specialized columnar storage and indexing, or they can be entirely cloud-based services. They are the heart of many organizations' business intelligence (BI) solutions, fueling dashboards, reports, and advanced analytics.

Research highlight: In the late 1990s and early 2000s, prominent database researchers such as Michael Stonebraker (Stonebraker and gang, VLDB Journal) introduced specialized column-store concepts that led to a new era of data warehousing solutions. Column-oriented databases significantly improved query performance for analytical workloads where entire columns must be scanned.

Understanding the differences between OLTP, OLAP, and how data warehouses structure data is crucial. As a data engineer, you need to design appropriate solutions for data ingestion from OLTP sources, transform them suitably, and load them into OLAP-optimized warehouses.

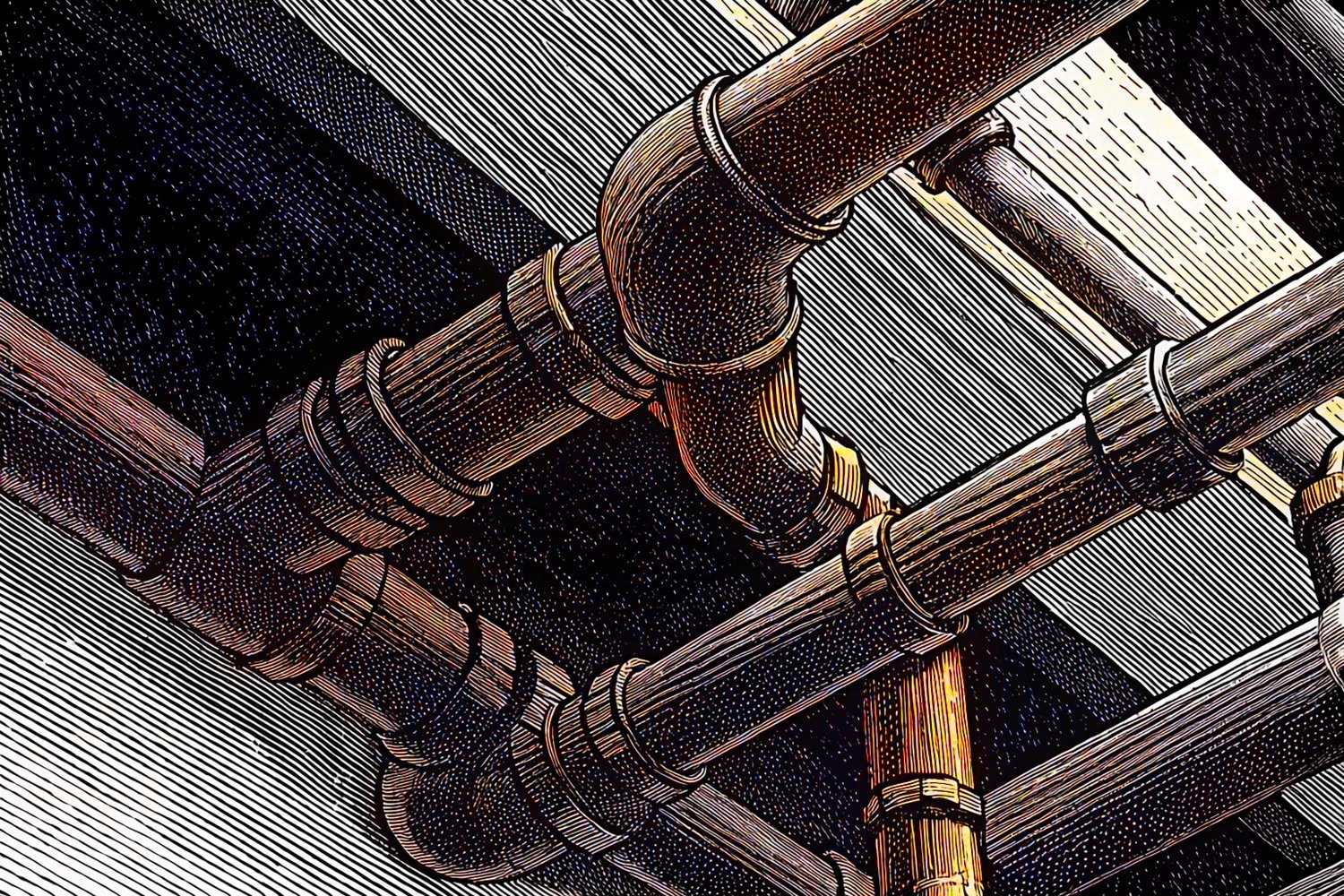

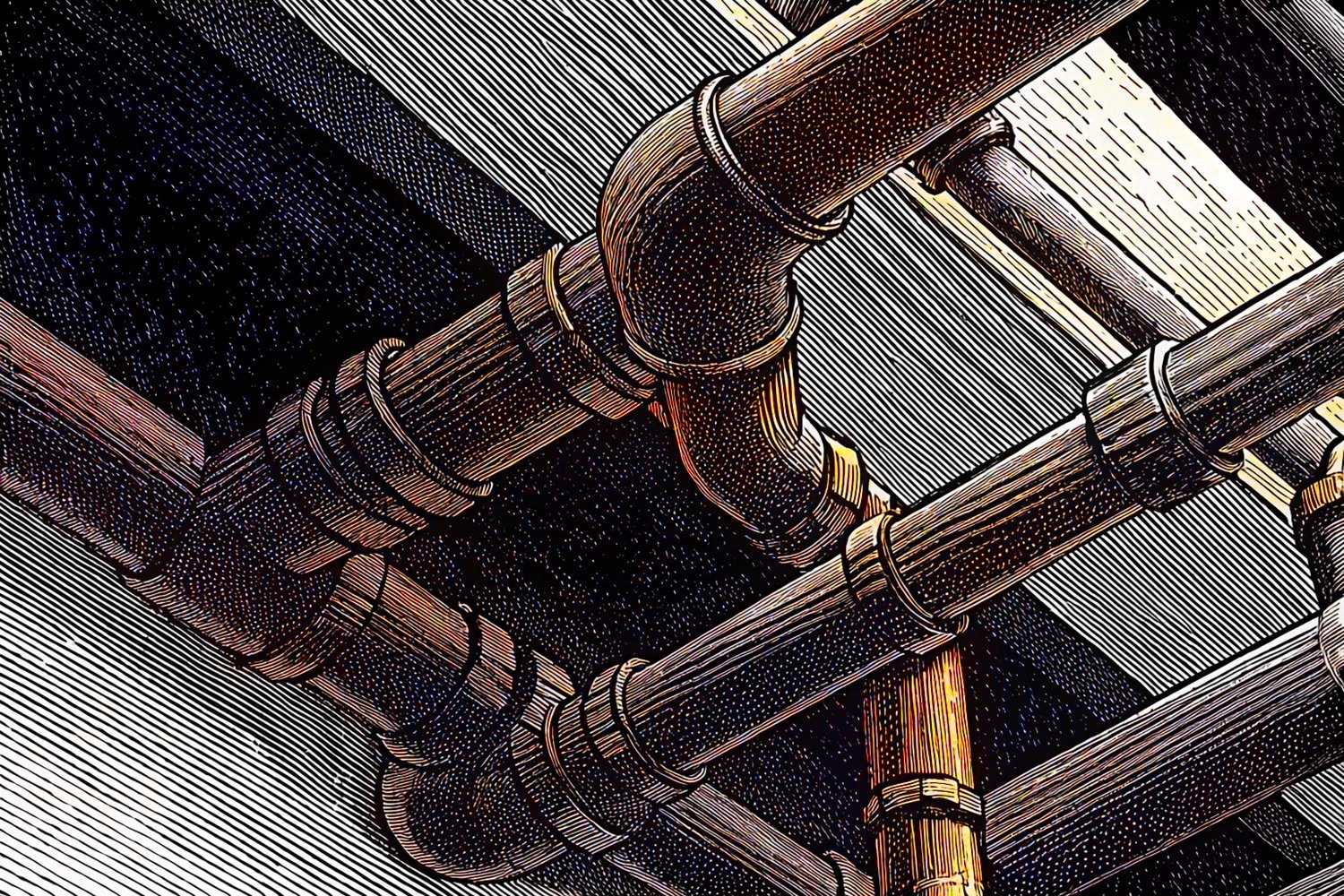

3. Data pipelines

A data pipeline is a series of steps in which raw or semi-structured data is ingested from source systems, processed or transformed in one or more stages, and then delivered to a target system (e.g., a data lake, data warehouse, or data mart). Data pipelines can range from relatively simple scripts to highly complex enterprise workflows orchestrating dozens (or even hundreds) of interdependent tasks.

Definition and importance of data pipelines

Data pipelines are the lifeblood of data-centric organizations; they automate the flow of information so that insights can be delivered reliably and in a timely manner. By adopting a pipeline-oriented mindset, teams can focus on building modular data transformations that can be tested, versioned, and reused. This fosters better collaboration and reduces operational overhead, since changes in one part of the pipeline can be managed and propagated systematically without breaking the rest of the system.

Common challenges that data pipelines address include:

- Scalability: Handling increases in data volume or velocity without major re-engineering.

- Maintainability: Making it simpler to debug failures, update transformations, and add new data sources.

- Data quality: Ensuring data is cleaned, validated, and curated before it reaches downstream applications.

- Reliability: Reducing downtime and ensuring data is delivered on time.

Components of a typical data pipeline

- Data ingestion: The entry point where data is collected from various sources (databases, logs, APIs, streaming systems).

- Transformation/processing: A series of transformations, such as parsing, cleaning, joining, aggregating, feature engineering (for ML), or enrichment with external data. This could be done using frameworks like Apache Spark, Beam, Flink, or even lightweight Python scripts.

- Storage: Data is written to a target system, which could be a data lake, data warehouse, or specialized analytical store.

- Orchestration: Pipelines are often scheduled, monitored, retried upon failure, and have dependencies managed by an orchestrator like Apache Airflow, Luigi, or a cloud-based service like AWS Step Functions or GCP Cloud Composer.

Types of data pipelines (batch, real-time, hybrid)

- Batch pipelines: Aggregate and process data in chunks at scheduled intervals (e.g., daily or hourly). Tools like Apache Hadoop or Spark are commonly used.

- Real-time (streaming) pipelines: Process data on the fly, delivering near-instant updates to downstream systems. Apache Kafka and Spark Streaming are typical technologies in this domain.

- Hybrid (lambda or kappa): Combine batch and streaming for a best-of-both-worlds approach. The lambda architecture uses a batch layer for accuracy and a streaming layer for low latency, whereas the kappa architecture streamlines the pipeline to a single streaming framework for both real-time and reprocessing tasks.

Best practices for designing scalable data pipelines

- Modular design: Break transformations into discrete tasks that can be developed, tested, and replaced without affecting unrelated pipeline components.

- Idempotence: Ensure tasks can be safely retried without causing duplicates or inconsistent states.

- Monitoring and alerting: Use logs, metrics, dashboards, and alerting systems to detect anomalies or failures promptly.

- Data partitioning: Partition data by time or other relevant dimensions to speed queries and reduce concurrency conflicts.

- Schema versioning: Manage schema evolution explicitly. Tools like Schema Registry (in the Kafka ecosystem) help ensure producers and consumers remain compatible over time.

- Security and compliance: Consider data privacy, encryption, PII removal or masking, and compliance requirements (e.g., GDPR, HIPAA).

In the next chapter, I will dive into the processes known as ETL (extract, transform, load) and ELT (extract, load, transform), which form the backbone of many data pipelines.

4. ETL

ETL (Extract, Transform, Load) is one of the most essential concepts in data engineering, describing the typical workflow of ingesting data from source systems, transforming it to meet the requirements of the target system, and loading it into that system. Despite being an older term, it's still highly relevant. Alongside ETL, newer paradigms such as ELT (extract, load, transform) have gained traction, especially with the rise of cloud data warehouses.

What is ETL and why it matters

The primary goal of ETL is to enable robust and reliable data movement from multiple sources into a unified data store (e.g., a data warehouse). By applying transformations during the pipeline, data engineers ensure that analytics or machine learning models can be run on well-structured, clean, and curated data. Key reasons why ETL matters:

- Data quality: By cleaning data during the transformation step, organizations can be more confident in the correctness of the data that arrives in the warehouse.

- Performance optimization: Data can be aggregated, indexed, or partitioned before loading, making queries more efficient for analytical workloads.

- Single source of truth: ETL fosters the creation of a centralized repository (e.g., a data warehouse or data mart) that unifies data across different systems with consistent transformations applied.

ETL vs. ELT

In ELT workflows, the load step occurs before the transform step. This difference is crucial in modern cloud data warehouse environments, where it can be more efficient to quickly load data in its raw form into a powerful data warehouse or data lake, then transform it using SQL or other compute engines within that environment. The key reasons some teams prefer ELT over ETL:

- Scalability: Cloud-based data warehouses (like Snowflake, BigQuery, Redshift) can scale horizontally and handle large transformations internally.

- Flexibility: You can choose to transform only the data you need, or re-transform the data without reloading from the source.

- Lower friction: Data scientists and analysts can directly manipulate raw data using SQL or other query languages within the warehouse.

Both ETL and ELT approaches can coexist in a data engineering ecosystem; the choice often depends on requirements around data latency, tool preference, cost, and architectural constraints.

Common ETL tools and technologies

A variety of tools exist to implement ETL workflows. Some popular ones include:

- Apache NiFi: A dataflow tool that provides a visual interface for building data pipelines and transformations.

- AWS Glue: A serverless ETL service offered by Amazon Web Services with crawlers, job scheduling, and integration with other AWS services.

- Informatica PowerCenter: A commercial enterprise-grade data integration platform.

- Talend: An open-source enterprise integration platform featuring drag-and-drop job design.

- Pentaho Data Integration (Kettle): An open-source suite offering ETL, data modeling, and analytics tools.

- dbt (data build tool): Not strictly ETL in the traditional sense, but widely used for transformations in modern ELT workflows within cloud data warehouses.

Building the best ETL processes

Crafting robust ETL processes involves meticulous design and best practices, including:

- Metadata management: Keep track of data lineage, schema versions, and transformations applied, ensuring visibility and traceability.

- Error handling and retries: ETL processes can fail for various reasons (source downtime, schema changes). Plan for graceful retries, partial reprocessing, and rollback mechanisms.

- Test-driven development (TDD): Write automated tests that verify transformation correctness and data integrity.

- Performance tuning: Use distributed processing frameworks (e.g., Spark) or push-down optimization (e.g., using the database's internal SQL engine) to handle transformations on big data efficiently.

- Documentation: Publish concise yet thorough pipeline documentation for maintainability.

Below is a short Python-based snippet (using a pseudo-library) to illustrate an ETL approach for reading data from a CSV file, performing transformations, and loading it into a database table:

<Code text={`

import csv

import psycopg2

from datetime import datetime

def extract_data(csv_file_path):

with open(csv_file_path, mode='r', encoding='utf-8') as f:

reader = csv.DictReader(f)

records = list(reader)

return records

def transform_record(record):

# Simple transform: parse date and convert to consistent format

record['date_field'] = datetime.strptime(record['date_field'], '%m/%d/%Y').strftime('%Y-%m-%d')

# Additional transforms like field name normalization or type casting

return record

def load_data(records, connection_params):

conn = psycopg2.connect(**connection_params)

with conn, conn.cursor() as cur:

for rec in records:

cur.execute("""

INSERT INTO target_table (col1, col2, date_field)

VALUES (%s, %s, %s)

""", (rec['col1'], rec['col2'], rec['date_field']))

conn.close()

def run_etl(csv_file_path, connection_params):

data = extract_data(csv_file_path)

transformed = [transform_record(r) for r in data]

load_data(transformed, connection_params)

print("ETL complete.")

`}/>In a real-world setting, the transformation logic might be more complex, possibly requiring distributed processing. But this snippet captures the general structure of an ETL script.

5. Apache Airflow

Apache Airflow is one of the most popular open-source tools for orchestrating workflows in data engineering. Originally developed by Airbnb, it provides a platform to define data pipelines as DAGs (Directed Acyclic Graphs) and schedule them. Airflow has become a de facto standard for orchestration due to its flexibility, extensible plugin system, and broad ecosystem of operators.

Building and orchestrating workflows with DAGs

In Airflow, a DAG is a conceptual representation of the tasks (nodes) and their dependencies (edges). Each task could be an ETL job, a Spark job, a Python function, a Bash script, or any other form of data-processing step. You can define dependencies explicitly, which helps ensure tasks run in the correct sequence.

Common design principles for Airflow DAGs include:

- Idempotent tasks: Make your tasks safe to rerun without duplicating data or causing system inconsistencies.

- Task atomicity: Keep tasks as small as possible, focusing on a single well-defined function.

- Version control: Airflow pipelines are Python files, so they can be tracked in Git or other version control systems to manage changes and code reviews.

A minimal example DAG could look like:

<Code text={`

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'data_engineer',

'depends_on_past': False,

'start_date': datetime(2025, 3, 1),

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

with DAG('sample_dag', default_args=default_args, schedule_interval='@daily') as dag:

task_extract = BashOperator(

task_id='extract_data',

bash_command='python /path/to/extract_script.py'

)

task_transform = BashOperator(

task_id='transform_data',

bash_command='python /path/to/transform_script.py'

)

task_load = BashOperator(

task_id='load_data',

bash_command='python /path/to/load_script.py'

)

task_extract >> task_transform >> task_load

`}/>Each operator references a specific action (in this example, a simple Bash command that runs a Python script). Airflow's UI provides a clear visual representation of this DAG, enabling you to monitor task statuses, logs, and retries.

Monitoring and troubleshooting Airflow pipelines

Airflow provides a web interface with built-in monitoring features:

- Graph view: See the structure of your DAG visually.

- Tree view: Review runs of your DAG over time.

- Task logs: Check the output or error messages from each task's execution.

- Retries: Configure automatic retries. If a task fails, Airflow can re-run it after a specified delay.

Common Airflow issues include dependencies not being met (due to upstream failures), environment mismatches, or concurrency limitations. Configuring concurrency parameters and pool sizes for tasks is a best practice when scaling out.

6. Workflow orchestration and automation

Workflow orchestration deals with coordinating the execution of multiple tasks or data flows in an organized, scheduled, and fault-tolerant manner. By orchestrating tasks, data engineers can ensure that complex pipelines run consistently and that any issues are caught promptly and handled gracefully.

Scheduling, dependency management and alerting

Core features of workflow orchestrators such as Airflow, Luigi, and Kubernetes-native tools (e.g., Argo) include:

- Scheduling: Triggering jobs at specified times (cron-like schedules) or event-based triggers (e.g., new file arrivals).

- Dependency management: Defining the order in which tasks should run. If a dependent task fails, the orchestrator usually prevents downstream tasks from running.

- Alerting: Sending notifications or automated triggers (e.g., Slack messages, emails, PagerDuty alerts) when tasks fail or exceed timeouts.

Robust orchestration systems allow for complex dependencies, parallel execution, branching logic, and event-driven triggers. They often integrate with container platforms, enabling tasks to run in isolated, reproducible environments.

Automation strategies for reliability and scalability

While orchestration is about managing tasks, automation is about removing manual interventions. Some proven automation strategies in data engineering include:

- Automated testing: Test transformations using both unit and integration tests. This ensures each pipeline step is valid before it becomes part of a production workflow.

- Configuration management: Use environment variables or configuration files to handle credentials, database connections, or environment-specific paths. Tools like HashiCorp Vault or AWS Secrets Manager ensure sensitive information is not hard-coded.

- CI/CD for pipelines: Adopting a DevOps mindset, data pipelines can have automated build and deployment processes, allowing changes to flow from development to production after passing standardized checks.

Workflow orchestration tools help data engineers seamlessly integrate these automation principles, eventually forming robust, self-maintaining systems with minimal downtime.

7. Distributed data processing frameworks

With the rise of big data, a single machine or server often cannot handle the volume and velocity of data on its own. Distributed data processing frameworks such as those in the Hadoop ecosystem revolutionized the field by enabling large-scale processing across clusters of commodity hardware. While Hadoop MapReduce was an early game-changer, it has largely been supplanted by more sophisticated frameworks like Spark and Flink.

The Hadoop ecosystem

Initially introduced by Doug Cutting and Mike Cafarella (inspired by Google's MapReduce paper), Apache Hadoop combined a distributed file system (HDFS) with a programming model (MapReduce). Although MapReduce was revolutionary, its batch-oriented and disk-based approach can be relatively slow for certain use cases. Nevertheless, components of the Hadoop ecosystem remain highly relevant:

- HDFS: A distributed file system designed to store large data sets reliably across clusters.

- YARN: A resource-management layer that schedules tasks across the cluster.

- Hive: A SQL-like interface for Hadoop, enabling data analysts to query large datasets.

- Pig: A scripting platform for data processing tasks.

- HBase: A NoSQL database built on top of HDFS for real-time read/write access to large tables.

Apache Spark

Apache Spark brought in-memory processing to mainstream big data, enabling orders-of-magnitude faster computations for many workloads than traditional MapReduce. Spark's unified engine covers batch, streaming, machine learning, and graph analytics. Its key components include:

- Spark SQL: Query data using an SQL-like interface.

- Spark Streaming: Process real-time data in micro-batches (extensions like Structured Streaming further refine this).

- MLlib: A scalable machine learning library for Spark, covering classification, regression, clustering, etc.

- GraphX: A graph processing library built on Spark's RDD model.

Spark integrates with various cluster managers (YARN, Mesos, Kubernetes) and can read from a wide variety of data sources (HDFS, S3, local files, JDBC). It's often used for large-scale ETL pipelines, machine learning workflows, and real-time analytics.

Apache Flink

Apache Flink is another state-of-the-art, distributed processing framework focused on stream processing with low latency and exactly-once semantics. It also supports batch processing, but its real-time streaming capability and event-driven approach make it increasingly popular for applications requiring immediate data analysis and complex event processing.

Choosing the right framework

The choice between Spark, Flink, or older Hadoop-based solutions often depends on use cases and organizational constraints:

- Spark: Great for unified batch and streaming use cases, large ML pipelines, or iterative workloads.

- Flink: Excellent for real-time, low-latency streaming, and stateful event processing.

- Hadoop MapReduce: Rarely used for new projects, but many legacy pipelines still rely on it.

- Cloud services: AWS EMR, GCP DataProc, Azure HDInsight, and Databricks simplify cluster management and often provide additional optimizations and integrations.

Modern data engineers should be comfortable with at least one distributed framework, typically Spark or Flink, due to the widespread adoption and industry demand.

8. Streaming technologies

When data must be ingested and processed with minimal latency (e.g., for real-time analytics, immediate fraud detection, or user personalization systems), streaming technologies become paramount. Real-time pipelines bring unique challenges around state management, fault tolerance, scalability, and consistent data flow.

Apache Kafka

Originally developed by LinkedIn, Apache Kafka has become the industry standard for streaming data ingestion, distribution, and storage. Kafka can handle millions of messages per second with horizontal scalability and high fault tolerance, and it is often used to decouple data producers (e.g., application logs, IoT devices) and consumers (e.g., Spark Streaming jobs, microservices).

Key Kafka concepts:

- Topics: Named channels where messages are published.

- Partitions: Each topic is split into multiple partitions, enabling concurrency and scalability.

- Producers: Send messages to Kafka topics.

- Consumers: Subscribe to topics and read messages in order.

- Brokers: Kafka servers that store messages in a fault-tolerant manner.

Streaming frameworks

To process data as it arrives, streaming frameworks such as Spark Streaming (or Structured Streaming), Apache Flink, and Apache Beam are commonly used. These frameworks integrate seamlessly with Kafka or other message queues (e.g., RabbitMQ). They allow developers to define transformations on unbounded data streams, including windowing operations (grouping data within time intervals) and aggregations.

Use cases for streaming

- Real-time analytics: Dashboards that display near-instant metrics on user behavior, system usage, or sales data.

- Event-driven microservices: Using Kafka or other brokers as the backbone for microservices that react to specific events (e.g., new user sign-up triggers a welcome email).

- Fraud detection: Quickly identifying suspicious transactions by analyzing real-time streams of financial data.

- Monitoring and anomaly detection: Spot anomalies in logs or sensor readings as soon as they occur.

By combining streaming technologies like Kafka with distributed stream processors like Flink or Spark, data engineers can build pipelines that not only ingest data in real time but also transform and deliver it to other systems (e.g., a data lake or data warehouse) with minimal delay.

9. Containerization and cluster management

Previously in the course, Docker and containerization fundamentals were introduced. Here, let's expand into how data engineering pipelines benefit from Kubernetes and other cluster management solutions, which help orchestrate containers at scale, ensuring that data processing tasks are robust and scalable.

Kubernetes fundamentals for data engineers

Kubernetes is a container orchestration platform originally developed by Google. Its building blocks include:

- Pods: The smallest deployable units in Kubernetes, typically containing one or more tightly coupled containers.

- Services: A stable endpoint and load-balancing mechanism for a set of Pods.

- Deployments: Specifications describing how Pods should be replicated and updated over time.

- StatefulSets: Similar to deployments but designed for stateful applications requiring stable network IDs and persistent storage.

In data engineering, containerization and Kubernetes can be used for:

- Running distributed frameworks (e.g., Spark on Kubernetes, Flink on Kubernetes).

- Orchestrating microservices that handle ingestion or transformation tasks.

- Isolating environment dependencies to avoid version conflicts between pipeline components.

Other cluster management tools

While Kubernetes is the dominant player, there are other solutions:

- Mesos: An older platform used in some organizations, also capable of running Spark or other big data tools.

- Nomad: HashiCorp's container orchestrator, which is sometimes preferred for simplicity.

Best practices for container orchestration

- Resource allocation: Properly configure CPU, memory, and ephemeral storage for each container, ensuring stable performance without resource contention.

- Autoscaling: Use Horizontal Pod Autoscalers or custom controllers to scale up or down based on CPU usage, memory usage, or custom metrics like queue length.

- Logging and monitoring: Integrate with systems like Prometheus and Grafana (for metrics) and EFK stack (Elasticsearch, Fluentd, Kibana) or Loki for logs.

- Security: Apply pod security policies, network policies, and secrets management to safeguard credentials, certificates, or tokens used by data pipelines.

By leveraging Kubernetes (or a similar solution), data engineers can create flexible, scalable environments that handle large volumes of data processing jobs in a containerized, modular way.

10. Data integration and transformation platforms

Beyond traditional ETL tools, many modern data integration and transformation platforms have emerged to simplify the complexity of building and maintaining robust pipelines. These platforms often abstract away some of the lower-level tasks, providing a UI or DSL (domain-specific language) for composing pipelines.

Managed cloud services

Cloud providers offer fully managed solutions for data integration:

- AWS Glue: A serverless ETL service that automatically crawls data sources, infers schemas, and provides integrated transformations via Spark.

- Azure Data Factory: A data integration service for creating ETL and ELT pipelines in the Azure environment.

- Google Cloud Dataflow: Uses the Apache Beam model for both batch and streaming jobs, integrated seamlessly with GCP services such as BigQuery and Pub/Sub.

Low-code / No-code platforms

Some platforms (e.g., Matillion, Alooma, Fivetran) provide GUIs and drag-and-drop interfaces, enabling teams to build pipelines without writing extensive code. This can be especially helpful for smaller organizations or teams that need to stand up data integrations quickly.

dbt (data build tool)

Although dbt is often considered part of the analytics engineering stack, it has gained prominence for doing transformations inside the data warehouse. dbt's approach is reminiscent of software engineering best practices (e.g., version control, testing, modular design), bridging the gap between data engineering and data analytics.

By harnessing these platforms, data engineers can speed up pipeline development, reduce the burden of infrastructure management, and focus on adding business value rather than wrestling with low-level technical details.

11. Designing data architecture for analytics

Data architecture describes how data is acquired, stored, processed, managed, and delivered across an organization. Good data architecture is crucial for efficient analytics, clear governance, and cost effectiveness. Data engineers play a vital role in designing these architectures.

Data lake vs. data warehouse

Many organizations wrestle with the choice (or combination) of data lakes and data warehouses. A data lake is a storage repository that holds raw data in its native format, usually in a flat, scalable storage system (e.g., Amazon S3, HDFS). The main advantages of data lakes include:

- Flexibility: Store data of all structures (structured, semi-structured, unstructured).

- Low cost: Typically cheaper than a data warehouse.

- Schema-on-read: Data can be interpreted at query time, allowing multiple data consumers with different needs to parse data differently.

A data warehouse, on the other hand, is a structured, integrated repository typically built on a columnar engine designed for fast SQL-based queries. By applying transformations upfront (ETL) or inside the warehouse (ELT), organizations ensure data conforms to a consistent schema. The trade-offs revolve around upfront data modeling (warehouse) vs. flexible storage (lake).

Lakehouse patterns combine both approaches, offering the structured querying performance of data warehouses on top of a data lake's raw storage paradigm. Open formats like Apache Parquet and new table formats like Delta Lake (Databricks) or Apache Iceberg allow ACID transactions and time travel on top of data lake storage, bridging the traditional gap between data lakes and warehouses.

Real-time vs. batch processing approaches

Real-time processing addresses low-latency requirements (e.g., real-time dashboards, user-facing analytics, or triggers). Batch processing is often used for large-scale transformations or machine learning training that can happen daily or hourly. A robust architecture might combine both:

- Lambda architecture: Splits data into a batch layer (for full historical data) and a speed layer (for real-time updates). A serving layer combines results for end-users.

- Kappa architecture: Simplifies processing by treating both historical and real-time data in the same streaming pipeline, reprocessing old data if needed.

Hybrid architectures and lambda/kappa patterns

Lambda is conceptually more complex because data is processed twice (batch and stream), but it can provide the best of both worlds. Kappa reduces complexity by only having one processing layer, but full reprocessing might be more challenging. The choice depends on the organization's needs for completeness vs. simplicity, the size of historical data, and tolerance for rewriting large data sets.

Balancing performance, scalability and cost

Designing an analytics data architecture requires trade-offs:

- Performance: Achieving low query latency, especially for interactive dashboards.

- Scalability: Handling spikes in data volume or concurrency.

- Cost: Cloud-based solutions typically charge for both storage and compute; on-premises solutions have hardware and operational expenses.

Modern approaches often rely on serverless or autoscaling compute resources, ephemeral clusters, and pay-as-you-go models to contain costs while meeting performance demands.

12. Advanced topics and innovations

Data engineering is a constantly evolving field, with new patterns, frameworks, and best practices emerging rapidly. Some advanced or emerging topics include:

- DataOps: An extension of DevOps concepts applied to data pipelines, focusing on continuous integration, continuous delivery, and automated testing of data transformations.

- ML-driven orchestration: Leveraging machine learning to optimize job scheduling, resource allocation, or anomaly detection in pipeline performance.

- Data Observability: Tools (e.g., Monte Carlo, Databand) that monitor data quality, metadata, and lineage in real-time, spotting anomalies or breakages before they impact downstream analytics.

- Lakehouse platforms: Tools like Databricks, Apache Hudi, Apache Iceberg, and Delta Lake all aim to unify the data lake and data warehouse paradigms, adding ACID transactions, schema evolution, and performance optimizations.

- Metadata and cataloging: Building robust data catalogs using solutions like Apache Atlas, Alation, or DataHub helps large enterprises keep track of data lineage, ownership, usage, and compliance statuses.

- Data virtualization: Instead of physically moving data from multiple sources, virtualization platforms allow you to query them collectively via a single abstraction layer, reducing overhead.

Research highlight: There is a growing body of research and conference papers (e.g., at VLDB, SIGMOD) focusing on incremental and streaming data systems, hybrid transactional/analytical processing (HTAP), and cloud-native warehouse optimization. As data continues to grow in volume and complexity, the future of data engineering hinges on efficient distributed algorithms, hardware acceleration (e.g., GPUs for database queries), and more intelligent automation throughout the pipeline.

13. Code snippets for each chapter

Below, I provide a collection of concise code snippets and short examples relevant to many of the chapters above, showcasing typical or illustrative tasks. Use them as a starting point for deeper practice, or adapt them to your production environment.

13.1. OLTP vs. OLAP: Table creation in PostgreSQL

<Code text={`

-- OLTP table (normalized design for high transactional throughput)

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

customer_id INT NOT NULL,

order_date TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

total_amount NUMERIC(10,2) NOT NULL

);

-- OLAP table (denormalized star schema, storing aggregated data for analytics)

CREATE TABLE fact_sales (

sale_id BIGSERIAL PRIMARY KEY,

date_key INT,

product_key INT,

store_key INT,

sale_amount NUMERIC(10,2),

sale_quantity INT

);

-- Example dimension table

CREATE TABLE dim_date (

date_key INT PRIMARY KEY,

date_value DATE,

day_of_week VARCHAR(10),

month_of_year INT,

quarter_of_year INT,

year INT

);

`}/>13.2. Simple batch data pipeline (using Python + Spark)

<Code text={`

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName("SimpleBatchPipeline") \

.getOrCreate()

# 1. Ingest data

input_df = spark.read.option("header", True).csv("/path/to/input_data.csv")

# 2. Transform data

transformed_df = input_df \

.filter("value IS NOT NULL") \

.withColumnRenamed("old_column_name", "new_column_name")

# 3. Load data

transformed_df.write.mode("overwrite").parquet("/path/to/output_parquet")

spark.stop()

`}/>13.3. ETL: Simple incremental load

<Code text={`

import psycopg2

import json

def incremental_etl(source_data, last_run_time, connection_params):

# Filter new data based on timestamp

new_records = [r for r in source_data if r['timestamp'] > last_run_time]

conn = psycopg2.connect(**connection_params)

with conn, conn.cursor() as cur:

for record in new_records:

cur.execute("""

INSERT INTO target_table (data_col, created_at)

VALUES (%s, %s)

""", (json.dumps(record['data']), record['timestamp']))

conn.close()

`}/>13.4. Airflow DAG snippet

<Code text={`

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

def etl_function(**kwargs):

# Implement your ETL logic here

pass

default_args = {

'owner': 'data_engineer',

'start_date': datetime(2025, 3, 1),

}

with DAG('etl_dag', default_args=default_args, schedule_interval='@daily') as dag:

etl_task = PythonOperator(

task_id='run_etl',

python_callable=etl_function,

)

`}/>13.5. Workflow orchestration: Simple CLI-based job scheduling (cron job)

<Code text={`

# In a Linux crontab, schedule the job to run daily at 2 AM

0 2 * * * /usr/bin/python /path/to/etl_script.py

`}/>13.6. Using Spark on Kubernetes

<Code text={`

# Example spark-submit command for K8s cluster

spark-submit \

--master k8s://https://kubernetes.default.svc \

--deploy-mode cluster \

--name spark-k8s-example \

--conf spark.executor.instances=3 \

--conf spark.kubernetes.container.image=myrepo/spark-image:latest \

local:///opt/spark/examples/src/main/python/wordcount.py s3a://my-bucket/input.txt

`}/>13.7. Kafka streaming with Python (producer and consumer)

<Code text={`

from kafka import KafkaProducer, KafkaConsumer

producer = KafkaProducer(bootstrap_servers='localhost:9092')

producer.send('my_topic', b'This is a message')

consumer = KafkaConsumer('my_topic', bootstrap_servers='localhost:9092')

for msg in consumer:

print(f"Received: {msg.value}")

`}/>13.8. Data lake creation (AWS example)

<Code text={`

# Using AWS CLI to create an S3 bucket for a data lake

aws s3 mb s3://my-data-lake-bucket

# Copy local data to S3

aws s3 cp local_data/ s3://my-data-lake-bucket/raw/ --recursive

`}/>13.9. dbt-based transformation snippet

<Code text={`

-- Example dbt model (transform_customers.sql)

WITH raw_customers AS (

SELECT

id,

UPPER(name) AS customer_name,

created_at,

updated_at

FROM {{ source('raw_schema', 'customers') }}

)

SELECT

id,

customer_name,

created_at,

updated_at

FROM raw_customers

WHERE created_at >= '2025-01-01';

`}/>13.10. Data Architecture Demo (Lambda architecture with pseudo-code)

<Code text={`

# Pseudo-code demonstrating a lambda architecture approach

# Batch layer: daily batch job

def batch_layer(input_data, historical_store):

# Combine new data with historical data

merged_data = historical_store + input_data

# Compute batch views (aggregations)

batch_view = compute_aggregations(merged_data)

return batch_view

# Speed layer: streaming job for real-time updates

def speed_layer(stream):

real_time_view = []

for record in stream:

# Update real-time aggregations

real_time_view = update_in_memory_aggregates(real_time_view, record)

return real_time_view

# Serving layer: merges batch_view and real_time_view

def serving_layer(batch_view, real_time_view):

# Combine data to provide final query results

final_result = merge_views(batch_view, real_time_view)

return final_result

`}/>These snippets illustrate how data engineering code can vary in style and complexity across different stages and technologies. In production, you'd employ robust error handling, logging, monitoring, containerization, and testing frameworks to ensure reliability and maintainability.

An image was requested, but the frog was found.

Alt: "High-level data pipeline architecture"

Caption: "An illustration depicting the flow of data through ingestion, transformation, storage, and consumption layers"

Error type: missing path

I have now presented a comprehensive tour of data engineering, from the fundamentals of OLTP/OLAP systems and data warehouse design, through the construction of data pipelines, ETL frameworks, workflow orchestration with Apache Airflow, distributed computing with Spark and Kafka, container orchestration with Kubernetes, modern integration platforms, and advanced architectural considerations like data lakehouses. The evolving landscape of data engineering continues to be shaped by new tools and paradigms. By mastering these pillars and staying informed about emerging trends, you can build scalable, efficient, and robust data systems that power innovative analytics and machine learning solutions.