🎓 70/2

This post is a part of the Fundamental NN architectures educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Convolutional neural networks, often referred to as CNNs or ConvNets, have gained remarkable prominence in the field of machine learning and deep learning in recent decades. At their core, CNNs are biologically inspired models—researchers were originally motivated by discoveries in neuroscience that certain cells in the visual cortex respond strongly to localized regions of a stimulus. As such, convolutional neural networks are largely inspired by the visual processing system and have demonstrated an uncanny ability to learn hierarchical representations from images, audio signals, and other high-dimensional data sources. In this chapter, I want to dive into the rationale behind CNNs, their historical background, and the reasons for their enduring popularity. I will highlight the road from early neural network attempts to the watershed moment when they took center stage in computer vision challenges.

The conceptual underpinnings of convolution-based processing in neural architectures can be traced back to the 1960s, when Hubel and Wiesel discovered the existence of simple and complex cells in the visual cortex of the cat's brain. These specialized cells responded selectively to edges or oriented lines in specific regions of the visual field. This finding laid the neurological foundation for the idea of localized receptive fields and hierarchical feature extraction.

Fast-forward to the 1980s, when researchers like Yann LeCun started applying backpropagation-based training to feedforward neural networks with convolutional layers for image classification tasks. One of the earliest successes was LeCun's LeNet-5 architecture (LeCun and gang, 1989), designed primarily for digit recognition (for example, on the MNIST dataset). LeNet-5 used convolutional layers interspersed with subsampling (pooling) layers and fully connected layers to classify handwritten digits with unprecedented accuracy. At the time, limited computational resources and insufficient data restricted the widespread use of CNNs to more demanding tasks.

Then, a key milestone occurred in 2012, when AlexNet (Krizhevsky, Sutskever, and Hinton, NeurIPS 2012) won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) by a significant margin. AlexNet demonstrated that, given a large dataset (ImageNet) and the computational power of GPUs, deep convolutional neural networks could outperform traditional computer vision methods by a wide margin. This triumph catalyzed a wave of research interest in CNNs, leading to subsequent improved architectures such as VGG, GoogLeNet, ResNet, DenseNet, and more. Each iteration introduced novel methods for deeper and more efficient network designs, fueling the sustained success of convolutional neural networks in numerous tasks.

Key advantages and reasons for popularity

CNNs have become nearly synonymous with image classification, object detection, and other vision tasks because they exploit the spatial structure and local correlations in data. Convolutional filters can detect local features such as edges, corners, or textures, which then combine hierarchically to detect more global and abstract concepts. Here are some highlights of why CNNs rose to prominence:

- Localized Receptive Fields: By only connecting each neuron to a local region of the input, convolutional filters reduce the number of parameters and exploit spatially local correlations—this is less prone to overfitting compared to a fully connected structure for high-dimensional data.

- Parameter Sharing: In convolutional layers, filters are applied across different spatial positions, which reuses the same set of weights. This drastically reduces the parameter count, making the model more data-efficient and allowing deeper architectures.

- Hierarchical Feature Extraction: Stacking multiple convolutional layers yields a hierarchy of progressively more abstract and semantic features. Early layers capture simple edges and shapes, while deeper layers can capture specific object parts or entire objects.

- Translation Equivariance: Convolution naturally preserves the notion of translation invariance—or more precisely, translation equivariance. A feature learned in one part of the image can be recognized in another location thanks to the weight sharing mechanism.

- Hardware Optimization: Modern GPU architectures (and more recent specialized hardware like TPUs) are well-suited to performing large numbers of parallel operations on arrays (tensors). Convolutions map neatly onto GPU operations, giving CNNs a massive computational advantage.

- Transfer Learning: CNNs trained on large-scale data like ImageNet can be fine-tuned easily for new tasks with fewer data, thanks to feature generality in early to mid layers, improving performance on domain-specific tasks dramatically.

These advantages, combined with large labeled datasets, improved computing power, and algorithmic advances (like better weight initializations and normalization techniques), propelled CNNs to become the de facto approach for many computer vision tasks—and indeed a building block in many other areas, including speech recognition, text classification (via character embeddings), and beyond.

2. Core concepts

In this section, I'll step through the fundamental ideas that make convolutional neural networks unique. The essence is the convolution operation, which can be understood as a small filter scanning across an input (such as an image) to detect features. Then we'll examine hyperparameters like stride and padding that significantly influence the network's behavior and dimension transformations. We'll cover pooling operations, activation functions, and also get into the mechanics of how backpropagation is applied to CNN layers. Altogether, these core concepts form the bedrock on which modern CNN architectures stand.

Convolution operation and feature maps

In standard fully connected neural networks, every neuron is connected to all elements of the previous layer. By contrast, in CNNs, the fundamental building block is the convolutional layer, where a set of learnable filters (or kernels) is convolved with the input. If we consider a single filter of size applied to a 2D input (like a grayscale image of size ), the convolution operation can be expressed as follows:

Where:

- is the input (an image or an activation map from a previous layer).

- is the filter or kernel of size .

- are spatial coordinates in the output feature map.

- index the kernel's rows and columns.

This summation is done element-wise, and a bias term is often added at the end. Each filter in a convolutional layer typically slides (convolves) across the entire input volume, producing a 2D feature map capturing the presence of that filter's learned pattern at each location. If the input has multiple channels (for instance, an RGB image has three channels), the filter extends across all channels, and a similar summation is performed along that channel dimension. The output of applying multiple filters is stacked channel-wise, forming a 3D volume known as the output feature map.

Importantly, when the network is trained, these filters are updated via gradient descent (or related optimization algorithms), so they adapt to detect features that minimize the overall loss function. After convolution, a non-linear activation function is usually applied, and the result is fed forward to subsequent layers.

Strides, padding, and other hyperparameters

In implementing a convolutional layer, several hyperparameters govern the behavior of the convolution operation:

-

Kernel size (): Defines the spatial size of the convolution filter. Common choices include 3×3, 5×5, or 7×7 for image tasks. Smaller filters typically capture fine-grained features and require fewer parameters. Larger filters capture more context but can be more expensive in terms of parameters and computation.

-

Stride (): Dictates how far the filter moves each time it is applied. A stride of 1 means the filter is applied at every adjacent position; a stride of 2 means the filter hops over positions, effectively halving the spatial dimension of the output (approximately). A larger stride leads to a smaller output size and can reduce computational cost but also might skip potentially important details.

-

Padding: Often the input is padded with zeros (or reflections, in some cases) to preserve spatial dimensions during convolution. For a filter, a typical choice of padding preserves the input size, making the output dimension equal to the input dimension, especially with a stride of 1. Padding ensures that edge pixels get convolved the same number of times as central pixels, preventing shrinkage of the output volume as the network goes deeper.

-

Dilation: Dilation (or atrous convolution) effectively spaces out the kernel elements to capture a larger receptive field without increasing the number of parameters. It is sometimes used in tasks requiring large receptive fields (e.g., semantic segmentation).

These hyperparameters have a direct impact on the shape of the output feature maps, the computational cost, and the network's capacity to capture details at different scales.

Pooling mechanisms (max, average, global)

Pooling layers are typically interleaved between convolutional layers to reduce spatial dimensions, thereby summarizing regions of the feature maps. This not only helps to lower the computational burden and number of parameters but also provides some translational invariance by aggregating features within local neighborhoods.

- Max pooling: Takes the maximum value within each pooling region. For instance, a 2×2 max pooling operation with stride 2 returns the maximum value of every 2�×2 block in the input.

- Average pooling: Computes the average value within each pooling region. Historically, average pooling was common in some early architectures (e.g., LeNet-5), but max pooling tends to perform better in many modern CNNs for tasks like image classification.

- Global pooling: A special case that aggregates over the entire spatial dimension, resulting in a single feature vector per channel. Global average pooling is sometimes used in place of fully connected layers at the end of a CNN (e.g., in certain architectures like Network in Network, or in modern classification networks). This drastically reduces parameter count and can help mitigate overfitting.

Pooling helps the network focus on the most salient features by discarding less relevant details. However, the choice between max and average pooling (and whether or not to even include pooling) depends on the specific application. Some advanced architectures rely on strided convolutions instead of pooling or adopt a combination of multiple approaches.

Activation functions in case of CNN

After the convolution and pooling steps, we need a non-linear activation function to ensure that the network can learn complex, non-linear mappings. In the early days, (\mathrm{tanh}) or sigmoid activations were used, but these have largely been superseded by the ReLU (Rectified Linear Unit) family:

- ReLU: , which sets negative values to zero. ReLU addresses the vanishing gradient problem encountered with sigmoid or tanh for deeper networks, enabling training of much deeper models.

- Leaky ReLU: attempts to mitigate the so-called "dying ReLU" problem by allowing a small, non-zero gradient for negative values.

- ELU, SELU, GELU, etc.: Numerous variations have been proposed, each with theoretical or empirical advantages in certain contexts. SELU (Scaled Exponential Linear Unit) is designed for self-normalizing neural networks. GELU (Gaussian Error Linear Unit) is sometimes popular in transformers, although it can be used in CNNs as well.

The choice of activation can affect training stability, convergence speed, and final accuracy, but ReLU and its variants remain some of the most widely used in CNN applications.

How backpropagation and parameter updating work in case of CNN

The backpropagation algorithm in CNNs is conceptually the same as in fully connected networks but with the additional complexity of weight sharing and local connectivity. During the forward pass:

- Each filter is convolved over the input.

- An activation function is applied to each element of the resulting feature maps.

- Downstream layers (pooling, additional convolutions, fully connected layers, etc.) transform this output.

- The final layer typically produces a prediction such as a probability distribution over classes.

During the backward pass:

- The gradient of the loss with respect to the output of each layer is computed (using chain rule).

- At the convolutional layer, these gradients are aggregated with respect to each filter and position where the filter was applied. Since the same filter is slid across the input (weight sharing), the gradient for the filter is accumulated over all spatial locations.

- The filters' weights and biases are updated accordingly, typically using optimizers like stochastic gradient descent (SGD), Adam, RMSProp, etc.

In code, frameworks like TensorFlow, PyTorch, and Keras handle this process automatically under the hood, so you only need to define the forward pass, the loss function, and the optimizer. However, understanding how backprop works is crucial for diagnosing problems like exploding or vanishing gradients, especially in deeper CNN architectures.

Etc.

Beyond the above fundamentals, some additional topics often interwoven with the CNN architecture discussion include:

- Padding modes: Zero-padding is the most common, but reflection or replicate padding can be used to reduce boundary artifacts.

- Weight initialization: He initialization or Glorot (Xavier) initialization is typically recommended for ReLU-based or linear-based CNNs, respectively, which helps control signal variance across layers.

- Regularization: CNNs have a large number of parameters; hence, techniques like weight decay (L2 regularization), dropout, or data augmentation are often employed to reduce overfitting.

- Normalization: Normalizing activations can speed up training and improve stability, which leads into our next chapter that covers batch normalization in detail.

3. Building CNN

Having established the core ideas, let's look at the main building blocks in detail. A typical CNN can be thought of as a combination of multiple stages of convolutional + (optionally) pooling + normalization layers, culminating in a few fully connected layers. We will also discuss batch normalization and dropout for better training stability and generalization. Finally, we'll walk through a simple example in TensorFlow/Keras that illustrates how to build a CNN step by step.

Convolutional layer in detail

A convolutional layer is characterized by the following parameters:

- Number of filters (): The layer will learn distinct filters that each produce a distinct 2D feature map output. These feature maps are stacked depth-wise, forming the output volume with shape .

- Filter size (): Determines the spatial region in which the layer will scan. As mentioned, 3×3 filters are extremely common in modern architectures.

- Stride (), Padding (), and other hyperparameters as discussed.

- Non-linear activation: Usually applied right after the convolution.

In some architectures, you might find grouped convolutions (splitting channels into groups processed separately) or depthwise separable convolutions (factorizing standard convolution into depthwise and pointwise convolutions). These advanced techniques reduce computational burden and can help the network learn more efficiently.

When designing a CNN from scratch, I usually begin with a small set of filters in early layers. Deeper into the network, the number of channels typically increases (e.g., doubling with each major block in some architectures). This pattern recognizes the fact that deeper layers can abstract more complex features but also need more representational capacity.

Pooling layer in detail

The pooling layer is relatively simple. For max pooling, for instance, consider a region :

Where:

- is the input feature map.

- is the stride for pooling.

- is the pooling kernel size.

The effect is a reduction in the spatial dimension. Pooling drastically lowers the number of activations that flow into subsequent layers, thereby improving computational efficiency and offering local translational invariance.

Fully connected layer

Typically, near the network's output, the spatial dimension becomes sufficiently small that the feature maps can be "flattened" into a single vector. That vector then feeds into one or more fully connected layers (also called dense layers in frameworks like Keras). For classification, the final layer might be a fully connected layer with outputs, where is the number of classes, followed by a softmax. In older CNN architectures (like AlexNet or VGG), these fully connected layers often contain the majority of network parameters. Modern architectures sometimes replace the last fully connected layer with global average pooling and a smaller classifier to reduce overfitting.

Batch normalization for CNN

Batch normalization (Ioffe and Szegedy, 2015) was a breakthrough technique that normalizes intermediate activations. Conceptually, it helps avoid the problem of "internal covariate shift" by normalizing mini-batch statistics, thus stabilizing and speeding up training. Mathematically, for each activation channel in a layer, batch normalization computes:

Where:

- is the pre-activation value of channel .

- and are the mean and variance of channel computed over the mini-batch.

- and are learnable parameters that scale and shift the normalized value.

- is a small constant to avoid division by zero.

Batch normalization is typically inserted after the convolutional operation and before the non-linear activation (although some variants place it after activation). It provides a more stable gradient flow and often allows for higher learning rates, reducing the sensitivity to weight initialization.

Dropout for regularization

Dropout is another important strategy used in CNNs to address overfitting. The idea is to "drop" (i.e., zero out) a random subset of activations during training, preventing the network from relying too heavily on any particular set of co-adapted features. In fully connected layers, dropout is often placed right before or after the layer. Convolutional dropout can also be applied, though its usage patterns can differ. The standard dropout formula for an activation is:

Where is the dropout rate. This means a fraction of the neurons is set to zero. During inference, the activations are scaled accordingly, so the final predictions remain consistent.

Step-by-step example with code snippets in TensorFlow/Keras

Let's walk through a straightforward example of constructing a CNN in TensorFlow/Keras for image classification (e.g., MNIST). This is a relatively simple dataset, but the mechanics can be extended to more complex tasks.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# 1. Load dataset

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

# MNIST images are 28x28, grayscale. Reshape for a channel dimension.

x_train = x_train.reshape((-1, 28, 28, 1)).astype("float32") / 255.0

x_test = x_test.reshape((-1, 28, 28, 1)).astype("float32") / 255.0

# 2. Define a simple CNN model

model = keras.Sequential([

layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu',

input_shape=(28, 28, 1)),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Flatten(),

layers.Dense(128, activation='relu'),

layers.Dropout(0.5),

layers.Dense(10, activation='softmax')

])

# 3. Compile the model

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

# 4. Train the model

model.fit(x_train, y_train, batch_size=64, epochs=5, validation_split=0.1)

# 5. Evaluate on test set

test_loss, test_acc = model.evaluate(x_test, y_test)

print(f"Test Accuracy: {test_acc}")

Here's the step-by-step breakdown:

- Dataset: We load the MNIST dataset from Keras. Each image is 28×28 pixels, and there is a single channel (grayscale). We normalize pixel values to the range [0, 1] by dividing by 255.

- Model Architecture:

- Conv2D(32 filters, 3×3, ReLU): The first convolutional layer takes the input (28×28×1) and outputs 32 feature maps. The kernel size is 3×3.

- MaxPooling2D(2×2): Reduces each spatial dimension by a factor of 2.

- Conv2D(64 filters, 3×3, ReLU): A second convolutional layer with more filters.

- MaxPooling2D(2×2): Further dimensionality reduction.

- Flatten: Flattens the 2D feature maps to a 1D vector.

- Dense(128, ReLU): A fully connected layer of 128 hidden units.

- Dropout(0.5): Randomly drops 50% of the units to mitigate overfitting.

- Dense(10, softmax): Outputs probabilities for 10 classes.

- Compilation: We use Adam optimizer and the standard cross-entropy loss for multi-class classification. We also track accuracy as a metric.

- Training: We train for 5 epochs with a batch size of 64 and a validation split of 10%.

- Evaluation: Finally, we evaluate on the held-out test set.

Hyperparameter tuning

Designing CNN architectures involves many hyperparameters and design choices. Here are some you might experiment with:

- Number of filters per layer and how this number grows or remains constant across layers.

- Filter size: Trying different kernel sizes (e.g., 3×3 vs. 5×5).

- Pooling strategy: Using max pooling vs. average pooling vs. strided convolutions, plus the size and stride of pooling.

- Network depth: The number of convolutional + pooling layers. Deeper networks can learn more features but are more prone to overfitting or vanishing gradients if not properly regularized and normalized.

- Learning rate: Possibly scheduling or decaying the learning rate as training progresses, or using advanced optimizers such as Adam, SGD with momentum, RMSProp, etc.

- Batch size: Larger batch sizes may require careful tuning of the learning rate. Smaller batches can cause noisy gradients but can improve generalization in some scenarios.

- Regularization: Varying dropout rates, weight decay, or data augmentation strategies.

In practice, systematic hyperparameter optimization (grid search, random search, Bayesian optimization, or more sophisticated methods) can be employed to find the best combination for a given dataset and problem setup.

4. Transfer learning in CNNs

Transfer learning is a technique that leverages a model pre-trained on a large dataset (e.g., ImageNet) and adapts it to a different target task, typically with fewer data. This approach is extremely powerful in domains where gathering a sufficiently large labeled dataset from scratch is challenging or impossible. The intuition is that the early layers of a CNN learn general features (e.g., edges, corners, textures), which can be reused across various image-recognition tasks, while the later layers learn more domain-specific features.

The general steps are:

- Select a pre-trained model: Popular choices include VGG16, ResNet50, Inception, etc., all pre-trained on ImageNet.

- Freeze early layers: The first few layers are left unmodified (their weights are "frozen") to preserve their learned representations.

- Replace and fine-tune later layers: Remove the old classification layer(s) and add a new output layer (with the number of classes for the new task). Optionally, unfreeze some top layers if deeper fine-tuning is needed.

- Train on the new data: Since fewer parameters are learned from scratch, the risk of overfitting is reduced.

Here's a simplified code snippet demonstrating how you might fine-tune a pre-trained model from Keras:

base_model = keras.applications.VGG16(

weights='imagenet',

include_top=False, # exclude the ImageNet classifier

input_shape=(224, 224, 3)

)

# Freeze base_model

for layer in base_model.layers:

layer.trainable = False

# Add new trainable layers on top

x = layers.Flatten()(base_model.output)

x = layers.Dense(256, activation='relu')(x)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(num_classes, activation='softmax')(x)

model = keras.Model(inputs=base_model.input, outputs=outputs)

# Compile and train

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(new_data, new_labels, epochs=10, batch_size=32)

This simple strategy allows one to benefit from powerful feature representations learned on large-scale data. It's particularly common in practice for tasks in medical imaging, fine-grained object recognition, and more, where data is either rare or expensive to label.

5. Motivation for more advanced CNN architectures (transition to "CNN architecture, part 2")

To wrap up this introduction to CNN architectures, it's worth mentioning that the straightforward feedforward pattern we've discussed—convolution, pooling, normalization, dropout, and fully connected layers—serves as the foundation of more advanced architectures. Over time, researchers have sought ways to make CNNs deeper, more parameter-efficient, and better regularized. Innovations like residual connections (ResNet), inception modules, dense connections (DenseNet), and various attention mechanisms have significantly boosted performance and training stability in tasks ranging from simple classification to object detection, instance segmentation, and beyond.

In "CNN architecture, pt. 2", I'll dive into these more complex patterns. We'll explore how skip connections mitigate vanishing gradients in deep networks, how inception modules help the network look at multiple filter sizes in parallel, how depthwise separable convolutions can drastically reduce computational overhead, and other state-of-the-art ideas that push the boundaries of CNN performance.

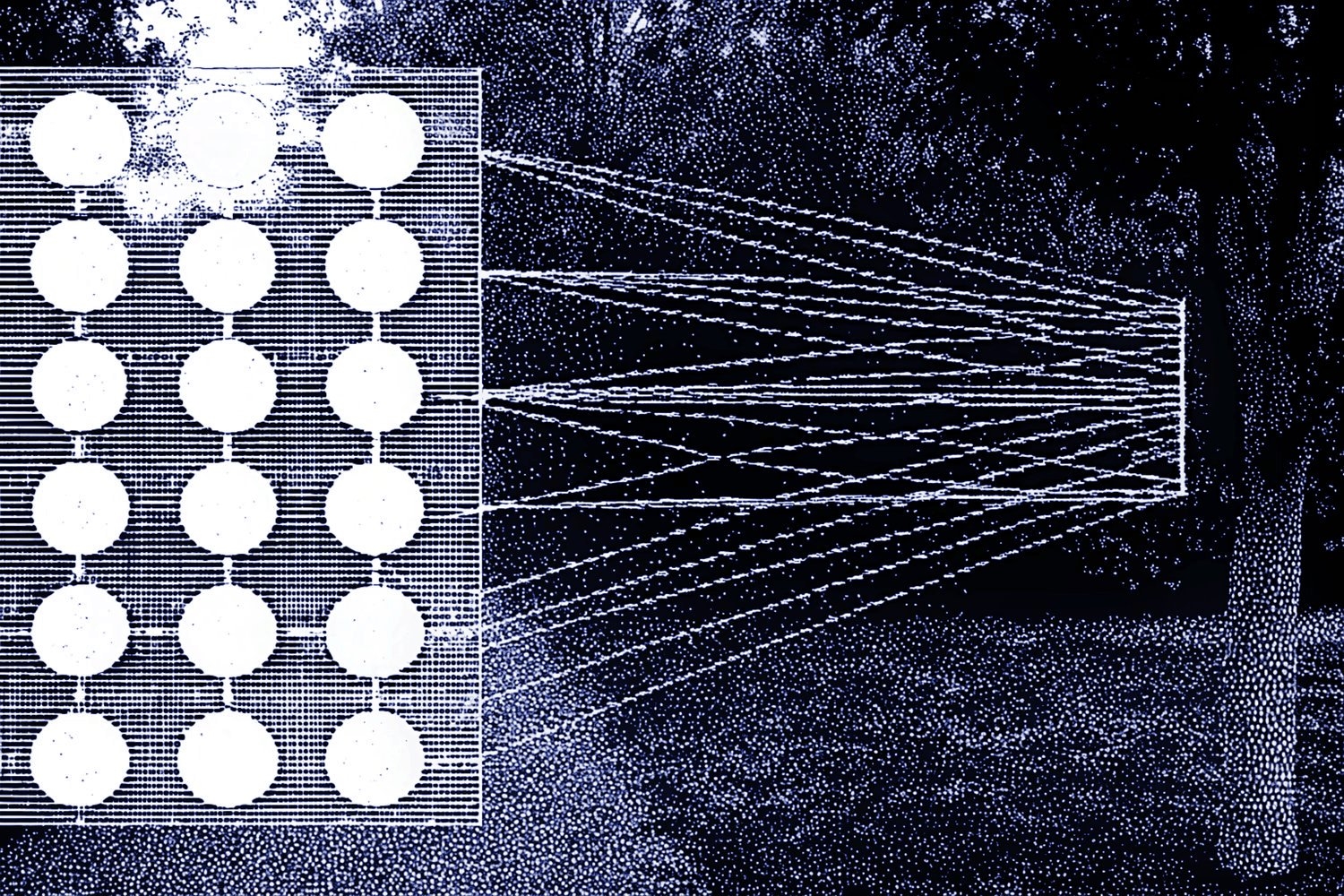

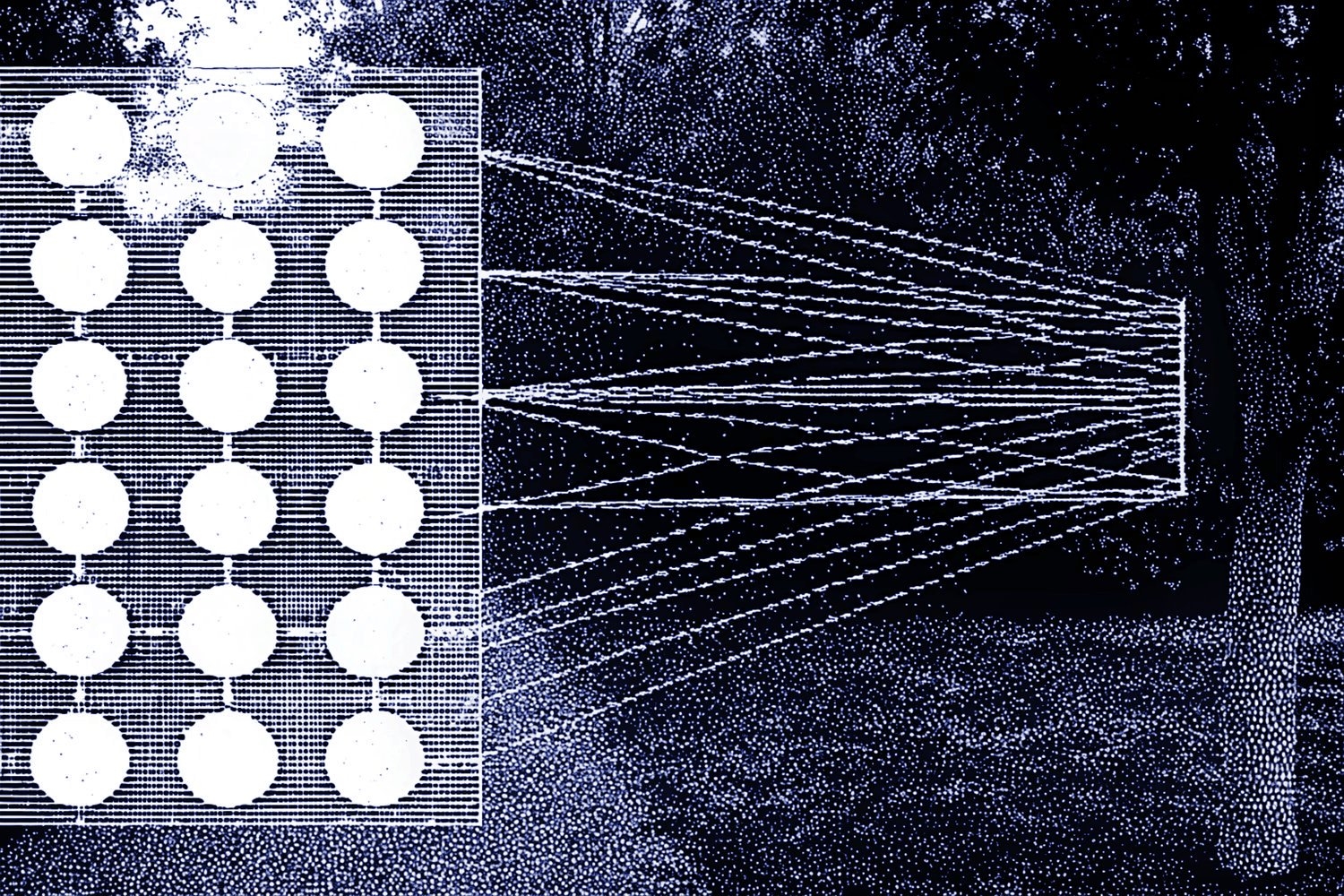

An image was requested, but the frog was found.

Alt: "Typical CNN diagram"

Caption: "An illustrative diagram of a simple CNN with convolution, activation, pooling, and fully connected layers."

Error type: missing path

Ultimately, understanding these advanced architectures is paramount if your work involves state-of-the-art image tasks, specialized object detection systems, or more efficient CNN designs for real-time processing on devices with limited computational resources. The core principles remain the same—localized feature extraction via convolution, dimensionality reduction via pooling, progressive abstraction in deeper layers—but the structural variations have proved to be game changers.

I believe this thorough overview provides the necessary building blocks for you to fully grasp modern convolutional neural networks, not only as black-box function approximators but as architectures that elegantly exploit the spatial correlations in their input. Equipped with knowledge of the convolution operation, pooling strategies, activation functions, backpropagation mechanics, and regularization techniques, you are now ready to tackle more intricate designs and push the frontiers of CNN applications.