🎓 11/2

This post is a part of the Mathematics educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Calculus lies at the heart of modern data science and machine learning, offering a rigorous toolkit for understanding how models change in response to their parameters. Whenever you hear about gradient descent, backpropagation, or continuous optimization methods, you are seeing calculus in action. In deep learning, for instance, the entire training process hinges on taking derivatives of the loss function with respect to millions (or billions) of parameters. Even in simpler machine learning methods, we often rely on partial derivatives to update parameters, evaluate sensitivities, or find maxima and minima. Consequently, a solid understanding of calculus provides both intuitive and formal perspectives on why certain ML methods work.

Throughout this article, we explore the fundamentals of single-variable and multivariate calculus, connect them to probabilities and expectations, then conclude with a deep dive into how automatic differentiation is implemented in popular libraries. We also discuss more advanced topics like differential geometry and partial differential equations, highlighting their significance in specialized areas of machine learning. Although we will dive into some sophisticated details, the goal is to make these ideas accessible, focusing on clarity, concrete examples, and a guided tour of key concepts.

quick refreshment of non-related fundamentals of calculus

Many data scientists learn single-variable calculus early in their studies, but it's easy to lose sight of its direct connections to machine learning applications. Below is a refresher on fundamental topics:

limits and continuity

A limit asks, "What value does approach as approaches ?" Continuity means a function has no abrupt jumps or holes. Formally, a function is continuous at if its limit at exists and equals . Continuity and limits underlie the definitions of derivatives and integrals; in machine learning, we often implicitly assume continuity for the functions we differentiate (like loss functions) to avoid pathological behavior.

differentiation and the chain rule

A derivative measures how fast a function changes when its input changes. If we have a single-variable function , its derivative is:

In machine learning, derivatives help us pinpoint the direction and rate of steepest change of a model's error function. Minimizing that error is the driving force in gradient descent.

The chain rule is crucial: if , the derivative is given by . This simple principle generalizes elegantly to many-layered functions — an idea at the core of backpropagation. If you have a neural network with multiple layers, you apply the chain rule iteratively from the final output layer all the way back to the initial inputs.

finding extrema

Machine learning often revolves around finding extrema of objective functions — minima when training a model, maxima in certain other scenarios (e.g., maximum likelihood). To find a local minimum or maximum of a single-variable function, you set its derivative to zero:

From there, you check second derivatives or other methods to confirm whether it's indeed a minimum, maximum, or saddle point.

taylor series

A Taylor expansion approximates functions near a point . For a function with sufficient derivatives, its Taylor series around is:

In machine learning, Taylor expansions help analyze local behavior of loss functions or approximate them near optimum points. This local approximation viewpoint can offer insights into optimization landscapes, convexity, and numerical stability.

convex and non-convex functions

A function is convex if, informally, it "curves upward" everywhere. In single-variable terms, a convex function has a nonnegative second derivative (). Convexity in higher dimensions is more nuanced, but the single-variable idea generalizes. Convex optimization problems (e.g., linear regression with L2 regularization) are easier to handle; we can guarantee global minima. Non-convex problems (e.g., deep neural networks, certain clustering algorithms) may have multiple local minima or saddle points, making them trickier to optimize.

integration

The integral of a function from to is:

In data science, integrals appear whenever we compute area under curves (e.g., analyzing probability densities or cumulative distributions). Integrals help define the expectation of random variables, which is a fundamental operation in statistical analysis. For instance, the expected value of a continuous random variable with density is:

Line integrals, surface integrals, or multidimensional integrals arise in more advanced applications such as computing probabilities over multiple variables or dealing with certain PDE-based image processing techniques. Even though linear algebra and probability theory are covered in separate parts of this course, it's valuable to see how integrals tie in with gradient methods and expectations in ML.

multivariate calculus

In real-world machine learning scenarios, we almost always deal with functions of multiple variables — think of a neural network's parameters . The shift from single-variable calculus to multivariate calculus is a leap into higher dimensions, but it follows familiar principles.

partial derivatives

A partial derivative with respect to means differentiating the function while keeping all other variables constant. For a function :

Each partial derivative measures how sensitive the function is to changes in a specific direction. Summing these partial derivatives into a vector yields the gradient.

gradient vectors

The gradient is the vector of partial derivatives:

Geometrically, the gradient points in the direction of steepest ascent of the function. In machine learning, especially in gradient-based optimization, we are interested in the negative gradient, which indicates the steepest descent direction.

An image was requested, but the frog was found.

Alt: "Illustration of gradient vector"

Caption: "Visualizing how the gradient points in the direction of steepest ascent"

Error type: missing path

directional derivatives

A directional derivative measures the instantaneous rate of change of in a specific direction. Formally, if is a unit vector in the direction we care about, then

You can show that (the dot product). A key insight is that the gradient is the direction that maximizes the directional derivative — hence why gradient-based algorithms move in that direction to climb or descend the function as needed.

chain rule in multiple dimensions

Consider a multivariate function . You can generalize the chain rule:

where and . For a deep neural network, this extends to many layers, each with multiple inputs and outputs. We apply the chain rule systematically to compute all partial derivatives, step by step. This systematic approach is exactly what frameworks like PyTorch or TensorFlow do automatically when they perform backpropagation.

jacobians and hessians

The Jacobian is a matrix containing all first-order partial derivatives of a vector-valued function. If we have , then the Jacobian is an matrix:

When (i.e., a scalar output, such as a loss function), the Jacobian reduces to the row vector of partial derivatives — just another way to talk about the gradient.

The Hessian is the second-order partial derivative matrix of a scalar function. If , its Hessian is an matrix:

The Hessian helps us analyze curvature and is integral to second-order optimization methods (e.g., Newton's method). Although exact Hessians can be expensive to compute in high dimensions, approximations (like those in L-BFGS) see use in advanced ML scenarios.

vector and matrix calculus

As machine learning models scale, vector and matrix notation becomes essential for representing large parameter spaces and data sets efficiently. Here are some highlights of vector and matrix calculus:

vector fields, divergence, and curl

- Vector fields: A function that outputs a vector for each point in space (e.g., the velocity field of fluid flow). In ML contexts, we may see vector fields for multi-output transformations or embeddings.

- Divergence measures how much a vector field expands or contracts at a point (like "outflow" in fluid mechanics).

- Curl measures the twisting or rotational component of the field.

These operations come up in more specialized ML topics (e.g., certain PDE-based methods for images, or physically-inspired approaches to data transformations).

vectorization of operations

In practice, data scientists vectorize computations to leverage optimized linear algebra routines (e.g., BLAS, CUDA kernels). Rather than computing element-wise operations with loops, we express them as matrix multiplications, vector additions, and so forth. This speeds up training by orders of magnitude, especially for large-scale models. Frameworks like NumPy, PyTorch, and TensorFlow handle these vectorized operations under the hood.

matrix and tensor derivatives

A typical example in deep learning is computing where . Knowing matrix calculus shortcuts drastically reduces the complexity of deriving these expressions by hand. Common identities you might see:

- (with appropriate dimension ordering).

- .

In more advanced networks, parameters are structured as tensors, and the same rules generalize. Automatic differentiation frameworks also rely heavily on well-optimized matrix calculus routines.

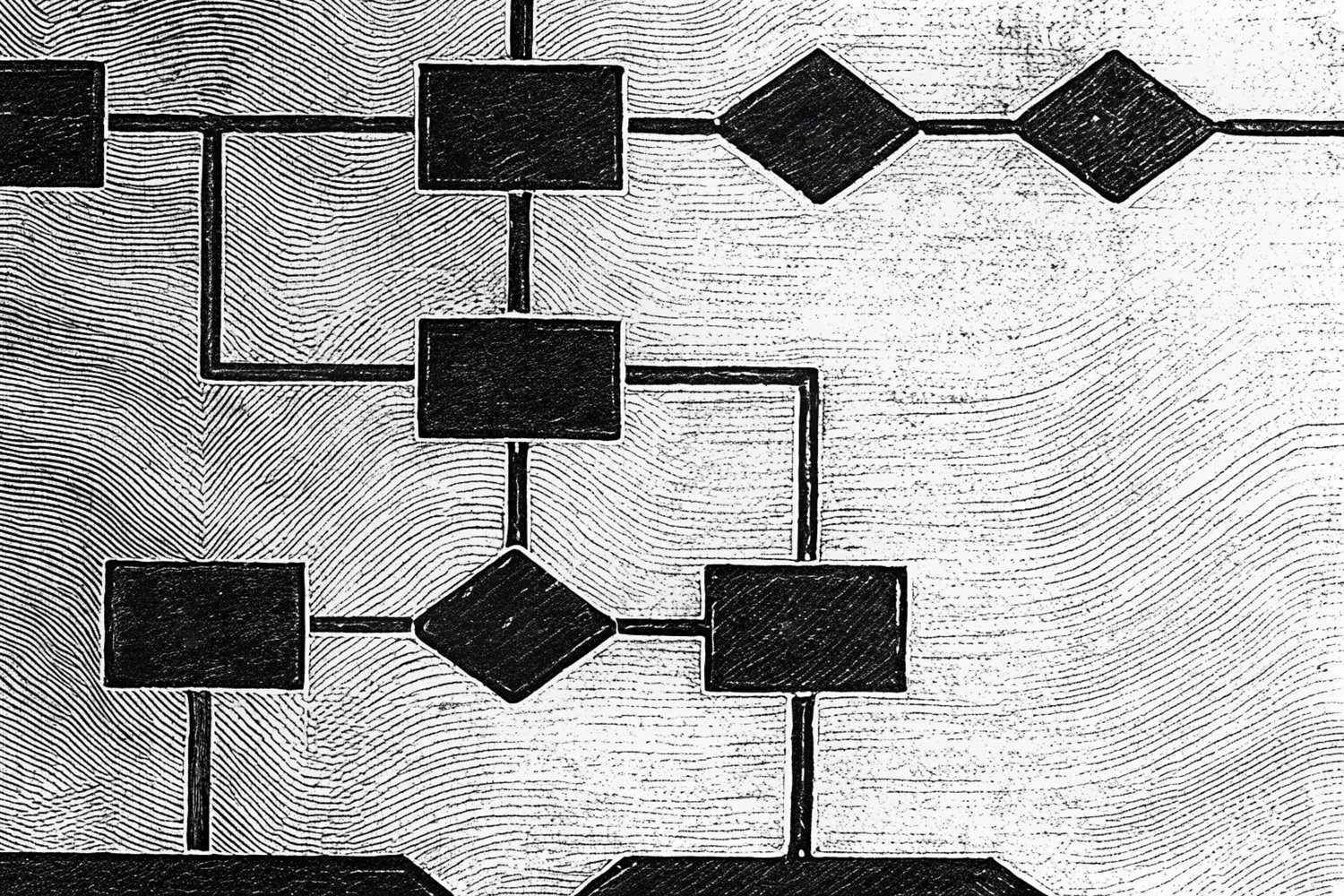

An image was requested, but the frog was found.

Alt: "Matrix calculus concept diagram"

Caption: "Representing gradients in matrix/tensor form allows for efficient computation"

Error type: missing path

a small cheat-sheet example

- Derivative of a scalar w.r.t a vector: Yields a row vector (gradient).

- Derivative of a vector w.r.t a scalar: Yields a vector.

- Derivative of a vector w.r.t a vector: Yields a Jacobian matrix.

When dealing with neural networks, each parameter can be seen as an element in a large vector of weights . The network outputs might be a scalar (e.g., a loss) or a vector (e.g., multi-class predictions), so you decide whether to form gradients or Jacobians accordingly.

relation of calculus to probability and expectation

Calculus and probability interweave closely in machine learning, especially in areas like maximum likelihood estimation, Bayesian inference, and information theory.

cross-entropy, likelihoods, and derivatives

Cross-entropy is a primary loss function in classification tasks:

When we differentiate cross-entropy with respect to the parameters of , we get updates that push to match . In practice, is a model's predicted probability distribution (often parameterized by a neural network). This approach ties directly into the notion of maximum likelihood. Suppose you have a likelihood function ; you often take the log-likelihood and find the maximum by solving:

.

integrals in probability densities and cdfs

The probability density function (PDF) of a continuous variable must integrate to 1 over its domain. Likewise, the cumulative distribution function (CDF) is the integral of the PDF. Whenever you see expectations, moments, or marginal probabilities, you are performing integrals:

Moments — like mean, variance, skewness — are specific integrals of the density with different powers of the variable.

derivatives of likelihood and log-likelihood

For a parameterized model with density over data :

To find the maximum likelihood estimate, you take the derivative of w.r.t. and set it to zero. In practice, we might do this via gradient-based iterative methods rather than solving analytically (especially for complex models).

expectation, moments, and gradients

The expectation of a function under distribution is:

Sometimes we differentiate these integrals w.r.t. parameters inside or . This is key in methods like the "score function" estimator (used in policy gradients or reinforcement learning) and reparameterization tricks (used in variational autoencoders).

bayesian inference and variational methods

Bayesian inference often boils down to computing a posterior distribution:

Because these posteriors can be intractable, we resort to variational approaches that approximate the posterior with a more tractable distribution . We define an objective called the Evidence Lower BOund (ELBO) and optimize it via gradient methods. The derivatives of the ELBO w.r.t. the variational parameters are computed — again employing calculus:

We then take to update . In advanced methods like Hamiltonian Monte Carlo (Neal, 2011), we also use gradient information of the posterior to draw efficient samples.

automatic differentiation and implementation

For large-scale models, computing all the necessary partial derivatives by hand is impractical. Automatic differentiation (AD) frameworks let us specify a model in code, then obtain accurate derivatives automatically.

forward-mode vs. reverse-mode differentiation

- Forward-mode AD: Propagates derivatives from inputs forward to outputs. Good for functions with many inputs and few outputs.

- Reverse-mode AD: Propagates from outputs backward to inputs. Perfect when you have fewer outputs (e.g., a single scalar loss) but many inputs (model parameters). Hence, reverse-mode AD is standard in machine learning libraries.

symbolic vs. numeric differentiation

- Symbolic differentiation: Tools like Sympy parse an expression symbolically and differentiate it exactly. This is useful for small, closed-form expressions, but can be slow or unwieldy for large, dynamic computational graphs.

- Numeric differentiation: Uses finite differences to approximate the derivative. It's straightforward but can suffer from numerical instability and requires multiple function evaluations.

- Automatic differentiation (AD): Executes the program and compiles a graph of operations, applying the chain rule meticulously. This approach yields high accuracy with moderate overhead and is the backbone of modern ML frameworks.

code example: computing derivatives in python

Below is a short example using Python's sympy for symbolic differentiation. Although symbolic differentiation is not how deep learning frameworks typically run, it demonstrates the principle:

import sympy as sp

# Define a symbolic variable

x = sp.Symbol('x', real=True)

# Define a function

f = x**3 + 2*x - 5

# Compute its derivative

df = sp.diff(f, x)

print("f(x) =", f)

print("df/dx =", df)

You would see as the output for the derivative. In a framework like PyTorch, you'd define a computational graph for a function and call .backward() on a final scalar, which uses reverse-mode AD to compute all needed gradients.

implementation in ml frameworks

- PyTorch's autograd: Wraps tensors in a structure that records operations. When you call

.backward(), it traverses that recorded graph in reverse to compute gradients. - TensorFlow's computational graph: Builds a static or dynamic graph of ops, then uses reverse-mode AD.

- JAX's functional approach: Stages transformations as pure functions and relies on composable derivatives like

grad,vmap, orjit.

All these frameworks implement graph optimizations: they prune subgraphs not needed for computing the final gradient, merge common operations, and more, improving speed and memory usage.

further topics, specialized applications, optional stuff

We end with a brief look at advanced ideas that connect calculus to specialized domains in machine learning.

differential geometry in ml

In many advanced methods, parameters live on manifolds — curved spaces that require Riemannian geometry to handle derivatives properly (Amari, 1998). For example, the natural gradient method uses the Fisher Information Matrix to perform gradient descent in a more "geometry-aware" way. This approach can lead to faster convergence for certain models, notably in Bayesian parameter estimation and large-scale logistic regression.

partial differential equations (pdes) in ml

Partial differential equations appear in image denoising or in physically-based models. For instance, the Rudin-Osher-Fatemi model (Rudin and gang, 1992) uses PDEs for total variation-based image denoising. In ML, PDE-based approaches can incorporate domain knowledge or physically consistent constraints. They also show up in advanced generative modeling or neural PDE solvers for simulating complex phenomena.

automatic mixed precision and gradient scaling

With the explosion of GPU-based training, we often train with half-precision (float16) or bfloat16 to speed up computations. This sometimes introduces numerical issues (e.g., gradients becoming too small to represent). Techniques like gradient scaling or mixed-precision training compensate by scaling loss and gradient values to remain within valid ranges, then scaling them back at the appropriate stage.

stochastic calculus and continuous-time models

Some areas of ML, particularly those that involve finance or advanced time-series modeling, bring in stochastic calculus. Here, random noise is introduced in continuous-time processes:

- Brownian motion: A continuous-time stochastic process often used for modeling.

- Itô integrals and stochastic differential equations (SDEs): Extend the idea of ordinary derivatives to handle random, time-dependent terms.

- Euler-Maruyama, Milstein methods: Numerical approximations for SDEs.

These methods are cutting-edge in certain reinforcement learning scenarios, or in continuous-time models that unify PDEs and dynamics under uncertainty.

Whether you are performing simple linear regression or diving into advanced neural network optimization, the foundations of calculus illuminate the underlying mechanics of how model parameters are updated. This grounding in single-variable and multivariate derivatives, integrals, and matrix calculus paves the way for deeper exploration of optimization algorithms, Bayesian inference, and beyond. As you progress through this course, keep in mind how calculus permeates nearly every algorithmic strategy in modern data science and machine learning — knowing the "why" behind the math can guide you in building more robust and insightful models.