🎓 162/2

This post is a part of the Reinforcement learning educational series from my free course. Please keep in mind that the correct sequence of posts is outlined on the course page, while it can be arbitrary in Research.

I'm also happy to announce that I've started working on standalone paid courses, so you could support my work and get cheap educational material. These courses will be of completely different quality, with more theoretical depth and niche focus, and will feature challenging projects, quizzes, exercises, video lectures and supplementary stuff. Stay tuned!

Artificial intelligence has become a cornerstone of modern navigation systems, permeating numerous industries — from autonomous vehicles and aerial drones to mobile robots in warehouses and even advanced robotic systems in manufacturing or medical procedures. At its core, AI-driven navigation is about endowing machines with the ability to perceive their environment, predict possible future states of that environment (including other agents), and plan safe, optimal routes for their own motion. The concept of navigation here spans straightforward tasks, such as following a road or corridor, as well as more complex maneuvers in cluttered or dynamic spaces.

I find that AI-driven navigation encapsulates multiple tightly intertwined disciplines: perception (how sensors capture and interpret data), prediction (how the system anticipates future movement of objects or agents), and planning (how the agent itself generates a safe, collision-free, and efficient path). Each of these components, on its own, is a vast research area, with subfields in computer vision, sensor fusion, trajectory prediction, control theory, and motion planning. Moreover, the synergy among these components is what makes a navigation system truly "intelligent." For example, strong perception feeds accurate prediction, and robust prediction influences the complexity of planning algorithms.

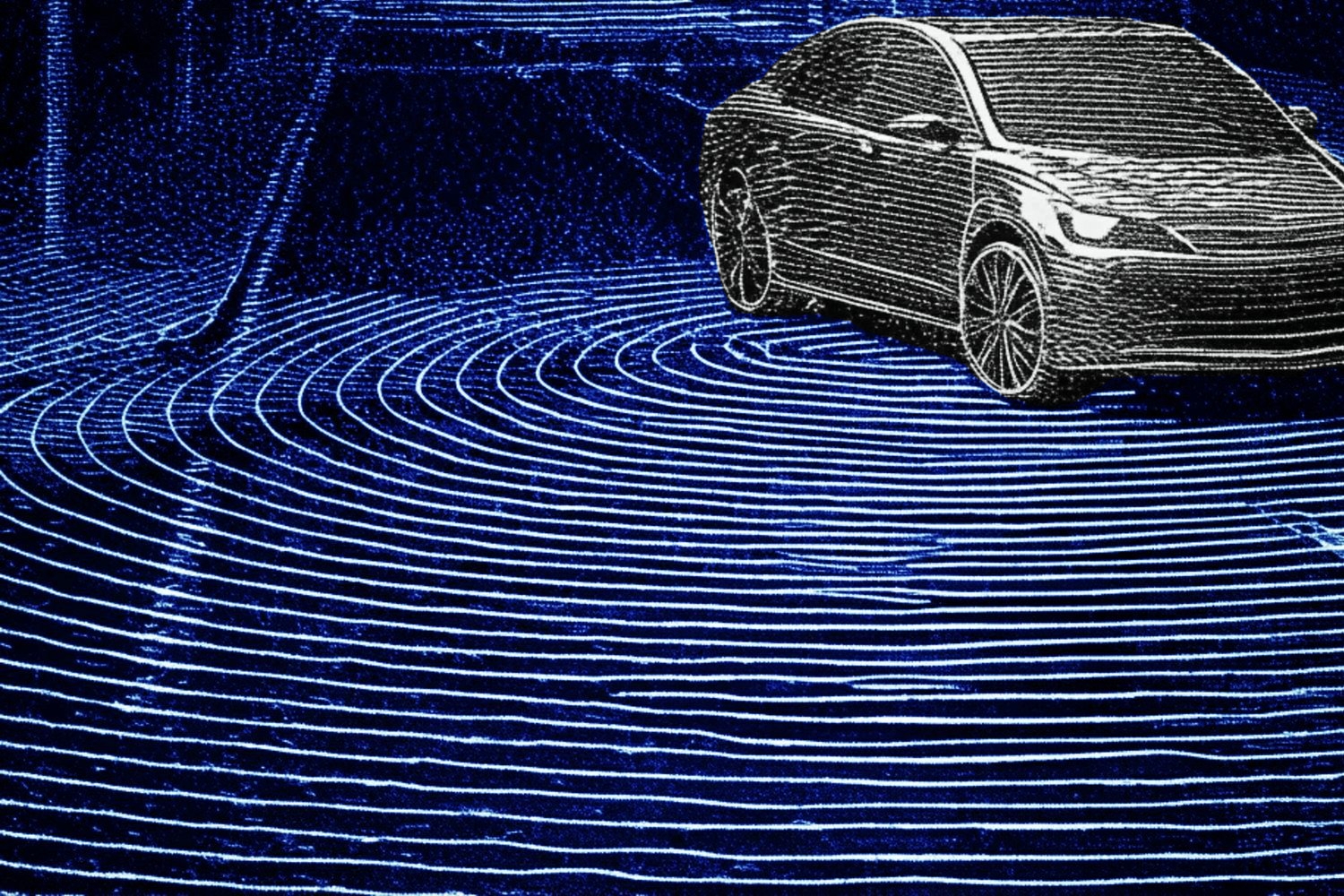

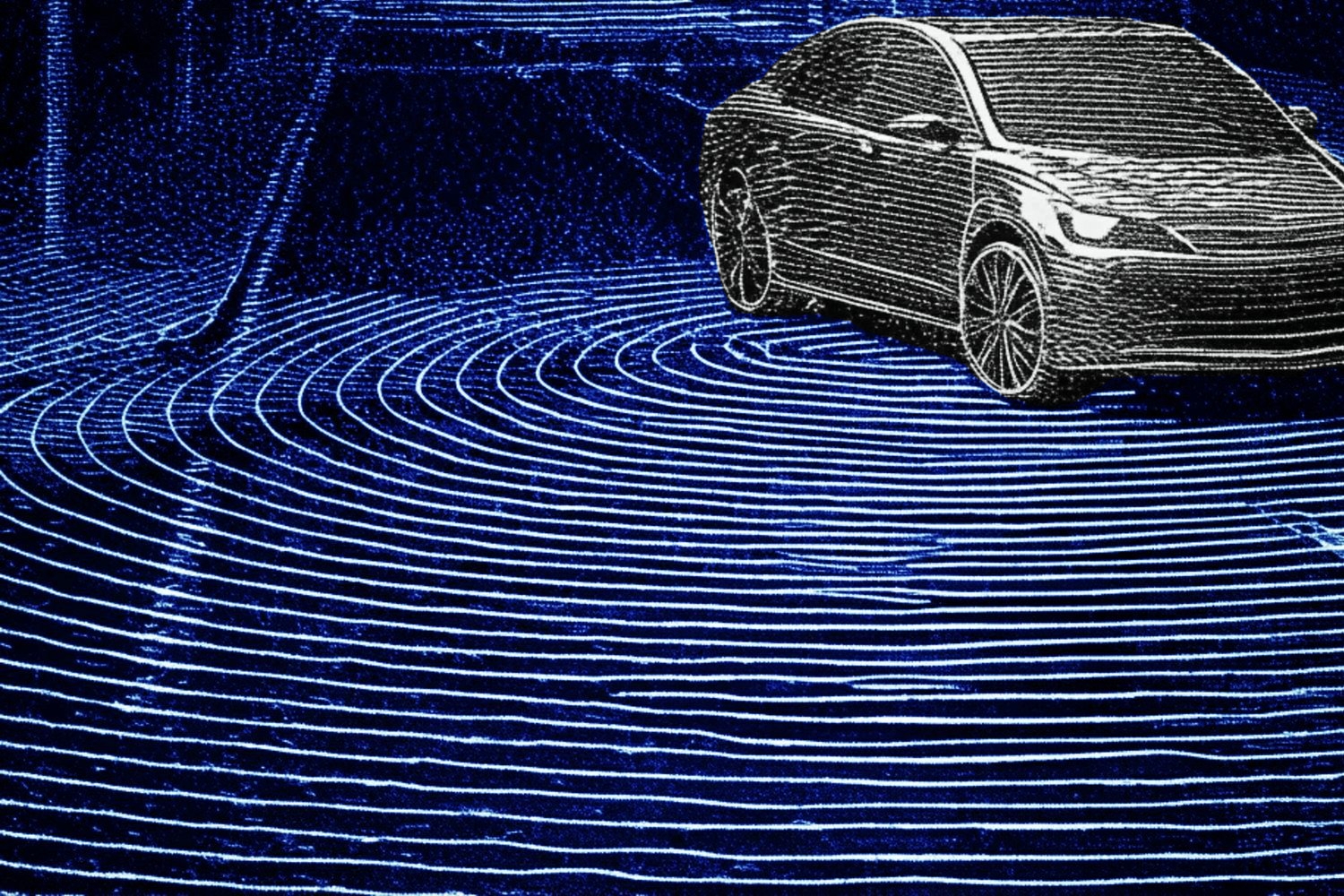

In today's world, AI-driven navigation is vital not only for driver-assist and self-driving cars, but also for aerial and ground-based robots that deliver goods, map hazardous regions, or perform search and rescue. The impetus for AI in navigation can be traced back to the need for robust decision-making under uncertainty, large-scale data integration from sensors (e.g., LiDAR, radar, cameras, inertial measurement units), and real-time path optimization in dynamic, unpredictable settings.

Below, I will dive into the fundamental building blocks of AI-driven navigation, highlight the role of perception, explain how movement prediction methods evolved from classical probabilistic approaches to modern deep learning, discuss the intricacies of planning algorithms, explore self-driving cars as a key use case, review a wide range of AI algorithms for navigation, and round off with a look at emerging trends in 5G, IoT, multi-agent collaboration, and advanced robotic platforms. Throughout, I will incorporate references to cutting-edge research to provide additional perspectives on where this exciting field is heading.

perception in ai navigation

definition and role of perception in navigation

Perception forms the bedrock of any autonomous navigation system. Without a clear understanding of the environment, even the most sophisticated planning algorithms cannot ensure safe or effective routing. Perception, in essence, is the collection of processes and technologies that interpret raw sensor data — for example, pixel data from cameras, three-dimensional point clouds from LiDARs, or reflectance profiles from radar. The purpose is to detect obstacles, localize the agent within a map, gauge the velocities of nearby objects, and construct a consistent, real-time representation of the environment.

In advanced AI-driven navigation scenarios, perception must be robust against multiple sources of uncertainty. Lighting changes, weather conditions, sensor noise, partial occlusions, and fast-moving objects can complicate the perception pipeline. Furthermore, perception is not solely about detection and mapping — in many cases, it also includes a semantic understanding of the environment (e.g., identifying traffic signals, lane boundaries, pedestrians, or vehicles of different classes). In the realm of autonomous vehicles, e.g. self-driving cars, robust perception is critical for safely executing maneuvers at scale.

sensors and data acquisition

lidar and radar

Light Detection and Ranging (LiDAR) and radio detection and ranging (radar) have emerged as cornerstone sensing modalities for autonomous navigation. LiDAR systems emit pulsed lasers and measure the time-of-flight to calculate distances, building a dense, accurate 3D map of surrounding objects. Radar, by contrast, uses radio waves and is adept at detecting objects at longer ranges, even in adverse weather conditions like rain, fog, or snow. By using both LiDAR and radar, a navigation system can achieve redundancy in sensing and robust coverage across a variety of environmental conditions.

LiDAR data, typically represented as point clouds, can be processed with specialized machine learning pipelines (e.g., 3D convolutional neural networks, graph neural networks, or voxel-based segmentation networks) to identify objects, free space, and navigable regions. Radar data, while less spatially precise than LiDAR, provides valuable velocity and range information that can augment or confirm LiDAR-based detections. Researchers at NeurIPS 2021 (Chen and gang, 2021) experimented with sensor fusion frameworks that combine LiDAR and radar data to improve detection accuracy for objects at various ranges, demonstrating a statistically significant reduction in false positives in congested traffic scenes.

cameras and computer vision

Cameras deliver rich visual context, allowing an AI-driven system to identify the semantic class of objects (cars, pedestrians, bicyclists, traffic signs, etc.) and interpret 2D geometry. Modern deep learning approaches, particularly convolutional neural networks (CNNs), excel at extracting meaningful features from camera images. Tasks such as object detection, semantic segmentation, and instance segmentation are crucial for an autonomous agent's situational awareness. For example, a CNN-based pipeline might detect bounding boxes around cars and pedestrians, while a separate segmentation network highlights lane markings.

Thanks to the popularity of large-scale datasets (e.g., the Waymo Open Dataset, nuScenes, or KITTI), there is a rich ecosystem of pretrained models that can be adapted or fine-tuned for specific navigation tasks. Some frameworks (e.g., Detectron2, YOLO) help accelerate real-time inference, ensuring that the perception pipeline remains responsive enough for high-speed motion planning.

gps and inertial measurement units

Global Positioning System (GPS) receivers, combined with inertial measurement units (IMUs), are widely used for high-level localization and for providing approximate velocity and orientation estimates. GPS data can be fused with other sensor data — e.g., wheel encoders, IMU readings, camera-based visual odometry — to achieve accurate global positioning. In urban settings, however, GPS signals can be degraded by multipath reflections and tall buildings. Accordingly, advanced localization systems often use simultaneous localization and mapping (SLAM) techniques. SLAM algorithms fuse multiple sensor inputs in real time to build a local map and pinpoint the agent's location in that map. This synergy between classical estimation methods (e.g., the extended Kalman filter) and modern deep learning (for visual odometry) exemplifies the complexity and interdisciplinary nature of AI-driven navigation.

data preprocessing and fusion techniques

Sensor data fusion often involves aligning heterogeneous data streams — LiDAR point clouds, camera frames, radar signals, IMU outputs — into a single, consistent space-time frame. This alignment is non-trivial because each sensor has different data rates, fields of view, noise characteristics, and latencies. A typical approach is to timestamp every measurement, transform it into a unified coordinate system (e.g., the vehicle coordinate frame), and use interpolation or filtering to handle small timing discrepancies. Advanced multi-sensor fusion systems also weigh sensor reliability dynamically; for instance, if a LiDAR is blocked by heavy snow or dirt, the system might lean more on radar or camera data for obstacle detection until the blockage is resolved.

After spatiotemporal alignment, algorithms such as the Kalman filter or more general Bayesian filters update the fused estimates of the vehicle state (position, orientation, velocity), as well as track objects in the environment. Techniques like gating or robust M-estimators can handle outliers in sensor data. In complex scenarios, a more advanced approach is to utilize factor graphs or graph-based SLAM, in which each sensor measurement is treated as a constraint in a global optimization problem.

challenges in real-time perception

Real-time perception for AI-driven navigation involves tackling enormous computational loads. Many modern vehicles contain specialized hardware accelerators (GPUs or TPUs) to run advanced neural networks at frame rates required for safe driving. In addition, perception must be resilient to distribution shifts (for instance, day vs. night, summer vs. winter), sensor degradations, and the presence of unexpected or novel objects. Some emergent research directions explore online domain adaptation, active learning, and self-supervised learning strategies that allow the perception module to adapt to unseen conditions with minimal human supervision.

Furthermore, scaling from lab prototypes to real-world deployments demands robust testing over billions of simulated or real miles. Simulation platforms such as CARLA or LGSVL provide realistic 3D worlds to test perception. However, even with simulation, bridging the sim-to-real gap can be difficult, which is why real-world data collection remains essential to refine perception pipelines. Consequently, perception in AI navigation remains an active research area, with constant innovations in sensor architectures, neural network model compression, and domain adaptation.

movement prediction in ai-driven systems

introduction to movement prediction

Movement prediction — often referred to as trajectory prediction or motion forecasting — is the step whereby an AI-driven system anticipates how other agents in the environment (vehicles, cyclists, pedestrians, etc.) are likely to move in the near future. This capability is essential for safe route planning, especially in crowded environments or complex traffic scenarios. The system must handle uncertainty and partial observability: not only can sensor noise distort current measurements of speed and direction, but the intentions of other agents can also change unexpectedly.

By modeling potential future trajectories of surrounding agents, an AI-driven navigation platform can proactively avoid collisions, yield right-of-way where appropriate, and even predict merges or lane changes before they happen. Researchers have long recognized that accurate movement prediction significantly reduces accidents and fosters smooth, human-like driving behavior.

techniques for predicting motion

trajectory prediction models

Early approaches to movement prediction often assumed simple kinematic models. For instance, a constant-velocity or constant-acceleration model might suffice for short-term predictions. While computationally cheap, such models cannot capture abrupt changes or more sophisticated maneuvers, like a pedestrian deciding to jaywalk or a vehicle making a sudden lane change.

A step up from naive kinematic assumptions is the use of Markov decision processes (MDPs) or partially observable MDPs (POMDPs). These models allow the environment to be represented with a set of discrete states and transitions, potentially capturing the uncertainty in an agent's decision-making more effectively than a purely kinematic approach.

probabilistic methods (e.g., kalman filters, bayesian networks)

Probabilistic filtering has long been a mainstay in motion prediction. The Kalman filter and its nonlinear variants (Extended Kalman Filter, Unscented Kalman Filter) are used to estimate the future state of a target object by iteratively updating a belief distribution over that object's state. For objects that exhibit multiple types of motion, Interacting Multiple Model approach (IMM) can be employed, maintaining a probability distribution over different motion hypotheses (e.g., turning, accelerating).

Here, might represent the position and velocity at time ; is a control input (if known); and are transition matrices, and is process noise. The filter's measurement update might incorporate LiDAR or camera observations to refine the predicted location, factoring in a measurement noise . While this approach is elegant, it can struggle with highly nonlinear and context-dependent behaviors that arise in, say, dense urban environments.

Bayesian networks provide another framework for motion prediction. They model the conditional dependencies among variables (e.g., velocity, acceleration, lane occupancy), potentially capturing more complex interactions. For instance, a dynamic Bayesian network can represent typical traffic patterns and reason about uncertain events.

deep learning approaches for motion prediction

The surge of deep learning has radically transformed trajectory prediction. Recurrent neural networks (RNNs), especially LSTM (Long Short-Term Memory) or GRU (Gated Recurrent Unit) variants, effectively capture temporal dependencies in sequence data, making them natural choices for modeling trajectory time series. In practice, an LSTM-based network might take the last positions of a vehicle and output a distribution over likely positions in the next few seconds.

CNN-based approaches also emerged for motion prediction when the environment is discretized into grid or occupancy maps. More recently, Graph Neural Networks (GNNs) and Transformers have proven effective at capturing the interactions among multiple agents in a scene. Some advanced frameworks incorporate social pooling or relational reasoning modules that account for how the presence of neighboring vehicles or pedestrians influences an agent's future trajectory.

Many of the state-of-the-art systems (e.g., Waymo's motion forecasting challenge winners) combine these neural architectures with auxiliary inputs such as high-definition maps (HD maps). By encoding lane geometry, speed limits, and traffic rules, the system can produce more realistic and context-aware trajectories. Djuric and gang (arXiv 2020) introduced a deep kinematic model that fused CNN-based road context with LSTM-based agent motion history, producing more plausible predictions than purely kinematic or purely neural approaches.

importance of accurate movement prediction in navigation

Accurate movement prediction is critical for collision avoidance, occupant comfort, and regulatory compliance. A navigation system that overestimates how quickly a pedestrian will cross the road might result in unnecessary slowdowns or abrupt braking, degrading user experience. Conversely, underestimating an aggressive driver's lane change intentions could lead to collisions or near-misses.

Moreover, movement prediction also influences long-horizon planning. If an autonomous car can anticipate congested scenarios or certain conflict points, it can take steps earlier to maneuver into safer or less congested lanes. Emerging research is also investigating ways to unify prediction and planning, allowing the vehicle to re-predict as it plans, forming a tight feedback loop. This integrated approach can help the vehicle adapt quickly to new cues from the environment.

case studies: pedestrian and vehicle movement prediction

Pedestrian motion has proven challenging due to abrupt changes in speed or direction, as well as social norms (e.g., crossing only at crosswalks vs. crossing in mid-block). Several recent works have utilized LSTM or Transformer-based models with visual attention to monitor pedestrian posture, orientation, and environmental cues. This helps the system infer whether a pedestrian is about to step off the curb.

Vehicle movement prediction is another rich domain, requiring integration of traffic rules, lane geometry, and multi-agent interactions. Djuric and gang (NeurIPS 2018) introduced an uncertainty-aware short-term motion prediction model that uses a mixture density network to capture multiple plausible future paths for each agent. This mixture-based approach not only yields a most-likely trajectory but also quantifies the uncertainty around that trajectory.

planning in ai navigation systems

definition and importance of planning

Whereas perception and prediction focus on the external environment and how it might evolve, planning is all about the agent's own actions. Planning seeks to determine a feasible, safe, and optimal (or near-optimal) route from a start state to a goal state. This might include selecting waypoints in free space, computing velocity profiles, or generating explicit steering and acceleration commands.

Planning in AI-driven navigation generally proceeds at two levels: global planning addresses route selection over large spatial and temporal scales (e.g., which highway or set of roads to take), while local planning refines how to maneuver within a lane, handle merges, or make left turns at intersections.

types of planning algorithms

path planning vs. motion planning

Path planning focuses on finding a geometric path in space from a start pose to a goal pose. It frequently assumes a simplified kinematic model (e.g., a point or circle in 2D space) and disregards the vehicle's dynamics or acceleration limits. By contrast, motion planning weaves in dynamic and kinematic constraints, ensuring that the generated path can actually be executed. For instance, a car can't teleport sideways; it must follow a trajectory that respects turning radius, velocity bounds, and acceleration constraints.

global vs. local planning

Global planning involves computing a route at the scale of an entire city or map. It might use graph-based search algorithms on a road network (e.g., a standard Dijkstra or A* search) to figure out which sequence of roads is best for traveling from location A to B. In contrast, local planning is narrower in scope but higher in detail — it decides how to navigate lanes, when to change lanes, how to avoid a sudden obstacle, or which precise trajectory to follow over the next few seconds.

common planning algorithms

a* and dijkstra's algorithms

A* and Dijkstra's are classical graph search algorithms widely used for path planning in discrete or discretized spaces. Dijkstra's algorithm systematically explores the graph from a start node to find the shortest path to all other nodes, guaranteeing an optimal solution in the absence of negative edge weights. A*, on the other hand, introduces heuristics to guide the search, often making it more efficient. When the heuristic is admissible (never overestimates the true cost to the goal), A* still provides an optimal solution.

In AI-driven navigation, a typical approach is to represent the free space as a grid or occupancy map and run A* to find a geometric path around obstacles. However, naïve grid-based path planning might produce solutions that disregard kinematic or dynamic constraints. As a result, additional smoothing or post-processing is typically required to generate a feasible trajectory.

sampling-based methods (e.g., rrt, prm)

Sampling-based algorithms have gained prominence in motion planning because of their scalability to high-dimensional configuration spaces. Rapidly-exploring Random Trees (RRT) iteratively sample random states from the search space and connect them to the nearest existing node in the tree, thereby exploring the space. Variants like RRT* refine the tree to produce near-optimal solutions over time.

Similarly, the Probabilistic Roadmap (PRM) approach randomly samples free configurations in the space, connecting them to form a graph or roadmap. Queries can then be answered by searching on this roadmap. These methods are particularly effective for complex robotic arms or aerial drones with many degrees of freedom, where classical grid-based methods become intractable.

An image was requested, but the frog was found.

Alt: "Conceptual diagram of an RRT search tree"

Caption: "RRT rapidly explores a random configuration space to find feasible paths."

Error type: missing path

optimization-based planning methods

In an optimization-based approach, the planning problem is recast as minimizing a cost functional that might penalize collisions, large accelerations, or abrupt turning maneuvers, subject to constraints (e.g., vehicle dynamics, non-penetration of obstacles). One typical formulation is:

where is the parameterization of the vehicle's position over time, and is a cost function capturing the objectives (smooth driving, collision avoidance). Gradient-based methods can then iteratively improve a candidate solution until a local minimum is found. Although these methods can produce smooth, dynamically feasible trajectories, they may get stuck in local optima, and they require that costs and constraints be at least partially differentiable.

ai-driven decision-making for planning

Increasingly, planning is informed not just by explicit search or optimization but also by machine learning modules. For example, inverse reinforcement learning (IRL) can derive a cost function by observing human driving behaviors, thereby capturing subtle preferences such as social etiquette at 4-way stops. Imitation learning approaches train a policy that directly outputs control commands given sensor data, effectively learning an end-to-end mapping from perception to actuation. However, pure end-to-end approaches sometimes lack transparency and can require immense labeled data. Consequently, many real-world systems adopt a hybrid approach, combining carefully designed planning algorithms with data-driven modules for tasks like cost modeling or maneuver classification.

integration of planning with perception and movement prediction

True autonomy demands continuous integration of planning with the outputs of perception and prediction. A planner might reroute if it detects a newly parked truck or re-check feasible motion if a predicted pedestrian path suggests an impending crossing. Tight integration ensures that changes in the environment are propagated into planning decisions in near real-time. For instance, a multi-layer architecture might run a global path planner at a slower rate while a local, model-predictive control (MPC) loop adjusts commands at a higher frequency. This layering enables the system to be reactive and robust, particularly in dynamic, unpredictable contexts such as crowded urban streets.

applications of ai-driven navigation in self-driving cars

overview of autonomous vehicles

Among the most prominent showcases of AI-driven navigation are autonomous vehicles (AVs). Self-driving cars rely on a suite of sensors (LiDAR, radar, cameras), powerful onboard computers, and sophisticated AI algorithms to handle complex traffic situations. The Society of Automotive Engineers (SAE) defines multiple levels of driving automation, ranging from advanced driver-assistance systems (ADAS) at Level 2 to fully autonomous vehicles at Level 5. At higher levels, the AI navigation system is expected to handle everything from lane keeping and obstacle avoidance to route planning and compliance with local traffic laws.

the role of ai in self-driving cars

AI technologies play a role in virtually every subsystem of a self-driving car. Deep learning-based perception systems detect drivable space, traffic signals, and vulnerable road users. Prediction networks anticipate lane changes or pedestrian crossings. Planning and control modules decide the best trajectory and steering angle, adjusting vehicle speed accordingly. Finally, onboard management systems log sensor data, identify edge cases, and trigger fallback maneuvers when needed.

key components of navigation in autonomous vehicles

perception systems

Self-driving cars fuse data from multiple sensors to create a 3D, real-time representation of the environment. Vision-based algorithms identify road geometry, traffic signals, and objects, while LiDAR-based segmentation pinpoints free space, obstacles, and curb edges. Radar complements these with robust velocity measurements. The output is often stored in dynamic occupancy grids or object lists, ready for use by prediction and planning.

prediction and planning modules

An AV's prediction module forecasts the possible movements of other road users. Next, the planning module uses these forecasts — along with global map data — to choose a safe trajectory that complies with traffic rules. Because city driving is highly dynamic, planning modules must continuously update their trajectories as new sensor data arrives.

An image was requested, but the frog was found.

Alt: "Visualization of a self-driving car's pipeline"

Caption: "Typical architecture: from sensors to perception, prediction, and planning."

Error type: missing path

real-world examples of ai in self-driving car navigation

- Waymo: Early pioneer in LiDAR-centric approaches, featuring advanced sensor fusion and an extensive system for storing HD maps of cities.

- Tesla: Emphasizes camera-based perception and neural networks running on specialized hardware. Over-the-air updates and data collection from a large fleet have enabled rapid iteration.

- Uber ATG / Aurora: Combined LiDAR, radar, and cameras with advanced motion prediction and planning. They contributed open-source libraries for simulation and large-scale data management.

- GM Cruise: Focuses on fully driverless taxi services in urban areas, employing real-time SLAM for localization and robust trajectory planning.

ethical and legal challenges in ai navigation for self-driving cars

AI-driven navigation in self-driving cars also faces regulatory and ethical quandaries. Determining liability in accidents involving AI decisions, ensuring transparency in how the system prioritizes safety vs. speed, and dealing with privacy concerns around massive sensor data collection are just a few examples. There is also the question of moral and social acceptance. Some scenarios involve ethical choices about risk distribution: how does an AV weigh the safety of its passengers against that of pedestrians? Although these issues extend beyond the scope of purely technical considerations, they shape how AI-driven navigation systems are designed, tested, and deployed.

types of algorithms used in ai-driven navigation

classical algorithms

rule-based systems

In the early days of AI-driven navigation, many systems relied primarily on rule-based logic. Such systems might encode a set of if-then statements that define how the agent should respond to specific environmental cues (e.g., if the lane is blocked, then merge left). While straightforward to implement and interpret, rule-based approaches become brittle and unmanageable in complex or unpredictable conditions, as the number of rules grows exponentially.

heuristic-based approaches

Heuristic methods like potential fields or cost maps have also been popular for certain navigation tasks. A potential field approach assigns each obstacle a repulsive potential and the goal a positive potential, with the agent "descending" the combined field toward the goal. This technique is conceptually simple but can suffer from local minima and fails to account properly for dynamic constraints in many real-world scenarios.

machine learning algorithms

supervised learning in navigation

Many tasks in AI navigation — from object detection to lane classification or traffic light recognition — leverage supervised learning. In navigation pipelines, supervised techniques can help classify road types, identify drivable areas, or estimate object bounding boxes in sensor data. However, supervised learning requires large labeled datasets, and it generally does not learn how to make decisions over time unless combined with sequential modeling techniques.

unsupervised learning for clustering navigation paths

Unsupervised learning can cluster typical driving patterns, identify frequent routes, or discover anomalies. For instance, if an AV records a region of the environment where many drivers slow down unexpectedly, clustering can reveal this pattern without explicit labeling. Clustering might also help detect out-of-distribution behaviors in other vehicles or identify new drivable areas in an unstructured environment.

reinforcement learning for dynamic environments

Reinforcement learning (RL) has gained traction for decision-making in continuous, dynamic environments. The agent learns a policy — a mapping from states to actions — by maximizing a cumulative reward signal that encodes driving objectives (e.g., safety, comfort, speed). While RL can, in principle, discover complex driving policies, in practice it can be difficult to ensure reliability and interpretability in safety-critical applications. Nevertheless, advanced RL-based approaches such as hierarchical RL or safe RL incorporate constraints (e.g., never exceed a certain crash probability), making them more suitable for real-world deployment.

deep learning algorithms

convolutional neural networks (cnn) for visual perception

Convolutional neural networks are widely adopted in the perception module of AI-driven navigation systems, excelling in 2D image tasks like object detection (SSD, YOLO), semantic segmentation (U-Net, SegNet), and instance segmentation (Mask R-CNN). CNN-based methods can also be extended to 3D data — e.g., 3D CNNs for LiDAR voxel grids or range images. The power of CNNs in extracting hierarchical features from raw pixels helps the agent interpret complex scenes with high accuracy.

recurrent neural networks (rnn) for sequential decision-making

RNNs (particularly LSTM and GRU variants) are well-suited for capturing temporal dependencies in sensor or state data. For example, an RNN-based policy in a self-driving car might continuously process a stream of environmental observations and output steering and acceleration commands that account for the short-term history of the environment. In some advanced systems, RNN-based models are used in the prediction module to forecast the motion of surrounding agents, while a separate planning module incorporates these forecasts into trajectory generation.

hybrid approaches combining multiple methods

A major trend is the development of hybrid approaches that fuse classical search or optimization with machine learning sub-modules. For example, an AI-driven system might leverage RRT to generate a broad set of candidate trajectories and then apply a learned cost function — trained via inverse reinforcement learning — to rank and select the best trajectory. This mixture of classical algorithms (which provide guarantees about feasibility and safety) and learned functions (which incorporate data-driven insights) often outperforms purely classical or purely learned approaches.

Below is a simplified Python code snippet to illustrate how a learned cost function might be applied to rank candidate paths from an RRT planner:

import numpy as np

def learned_cost_function(path, model):

"""

Evaluate the cost of a given path using a trained ML model.

path: List of waypoints (x, y)

model: Trained machine learning model for cost prediction

Returns: float

"""

# Convert path to a feature vector (example: flatten x, y)

features = np.array(path).flatten()

cost = model.predict(features.reshape(1, -1))

return cost[0]

def select_best_path(candidate_paths, model):

best_path = None

best_cost = float('inf')

for path in candidate_paths:

cost = learned_cost_function(path, model)

if cost < best_cost:

best_cost = cost

best_path = path

return best_path

# Example usage:

# candidate_paths = rrt_generate_paths(start, goal, obstacles) # hypothetical RRT planner

# best = select_best_path(candidate_paths, trained_ml_model)

# print("Chosen path:", best)

While this snippet only illustrates an abstract workflow, in real systems the model might consider safety metrics, comfort metrics, traffic rules, and predictions of other agents' motions, which can be encoded as a high-dimensional feature vector. This example highlights how traditional path search (RRT) merges with a machine learning-based cost evaluation to produce a more robust navigation pipeline.

emerging trends and future directions

ai advancements driving next-generation navigation

As AI continues to evolve, it opens new horizons for navigation. Techniques like Transformers — which have revolutionized natural language processing — are being repurposed for trajectory prediction and spatiotemporal reasoning in large-scale road networks. Self-supervised learning approaches provide ways to leverage vast amounts of unlabeled sensor data, improving perception without costly annotation. Meanwhile, foundation models for multimodal data are starting to appear, bridging vision, language, and LiDAR signals in a unified representation that potentially leads to more robust and generalizable navigation.

role of 5g and iot in navigation systems

5G networks, with their high bandwidth and low latency, are poised to revolutionize connectivity in AI-driven navigation. Real-time V2X (vehicle-to-everything) communication enables cars to exchange sensor data, share local maps, and coordinate maneuvers. This collaborative paradigm might help reduce collisions at blind intersections, where direct line-of-sight is not possible. In addition, the Internet of Things (IoT) extends connectivity to infrastructure sensors, such as smart traffic lights or roadside cameras, which can feed autonomous agents with valuable context (e.g., pedestrian flow data, road closures, or upcoming hazards).

autonomous drones and ai-driven navigation

Beyond ground vehicles, autonomous drones are another frontier for AI-driven navigation. Drones typically operate in 3D space with more degrees of freedom, necessitating advanced planning techniques like RRT* or MPC-based trajectory optimization. They also rely heavily on onboard sensors, such as cameras or depth sensors, for real-time obstacle avoidance. Research in drone navigation places special emphasis on hardware constraints, such as limited payload capacity for sensors and processors, requiring ultra-lightweight, efficient AI algorithms. Drone fleets can also coordinate in multi-agent contexts, collectively mapping unknown environments or delivering goods in large-scale logistic operations.

collaborative navigation and multi-agent systems

Multi-agent navigation is a rapidly maturing field. In many scenarios, multiple robots or vehicles operate in the same space and must coordinate to avoid collisions and optimize group objectives (like coverage or throughput). This can be approached using distributed algorithms, game-theoretic methods, or multi-agent reinforcement learning (MARL). A typical challenge is scaling to large numbers of agents while maintaining stable, coordinated behavior, as well as handling communication constraints and partial observability. Some advanced work (e.g., in ICML 2022) introduced graph-based MARL frameworks that handle thousands of interacting agents, demonstrating potential applications in traffic management, swarm robotics, and large-scale automated warehouses.

challenges and opportunities in ai-driven navigation

Despite the breathtaking progress, numerous challenges remain:

- Safety and reliability: Guaranteeing that an AI-driven navigation system behaves reliably in all corner cases is an ongoing concern. Methods like formal verification, scenario testing, and simulation are being actively developed and integrated.

- Explainability: Deep learning methods can be opaque. Regulators and stakeholders often demand interpretability in high-stakes domains, so bridging the black box of neural networks with transparent reasoning is essential.

- Scalability: Many advanced solutions excel in controlled environments or small-scale demos. Scaling them to city-wide or country-wide deployments introduces issues of throughput, cost, and infrastructure readiness.

- Data privacy and ethics: A system that continuously records sensor data can inadvertently capture personal information, raising privacy concerns. Meanwhile, ethical frameworks for AI-driven navigation are still evolving.

- Regulatory frameworks: Different regions adopt different standards for autonomous vehicles, drones, or robotics. Harmonizing these regulations is critical for global commercial deployment.

Yet these very challenges present opportunities for groundbreaking research and real-world impact. Researchers continue to push the boundaries, investigating new sensor modalities, more powerful predictive models, adaptive planning algorithms that incorporate nuanced risk preferences, and synergy with next-generation connectivity. By weaving together insights from classical robotics, control theory, optimization, and advanced machine learning, AI-driven navigation will likely remain one of the most vibrant and impactful areas in data science and machine learning for years to come.

An image was requested, but the frog was found.

Alt: "Drone swarm in a warehouse environment"

Caption: "Multi-agent or swarm-based navigation is a new frontier, leveraging advanced AI coordination."

Error type: missing path

Below is a further illustrative Python code snippet. It shows a simplified version of a state estimation plus trajectory planning pipeline that might be used in a small-scale navigation robot. Note that in practice, each step would be significantly more complex, and sensor data might arrive asynchronously.

import numpy as np

class KalmanFilter:

def __init__(self, A, B, H, Q, R):

"""

A: State transition matrix

B: Control input matrix

H: Observation matrix

Q: Process noise covariance

R: Measurement noise covariance

"""

self.A = A

self.B = B

self.H = H

self.Q = Q

self.R = R

self.x = None # state estimate

self.P = None # state covariance

def initialize(self, x0, P0):

self.x = x0

self.P = P0

def predict(self, u):

# x(k+1) = A*x(k) + B*u(k)

self.x = np.dot(self.A, self.x) + np.dot(self.B, u)

# P(k+1) = A*P(k)*A^T + Q

self.P = self.A @ self.P @ self.A.T + self.Q

def update(self, z):

# y = z - H*x

y = z - np.dot(self.H, self.x)

# S = H*P*H^T + R

S = self.H @ self.P @ self.H.T + self.R

# K = P*H^T*S^-1

K = self.P @ self.H.T @ np.linalg.inv(S)

# x = x + K*y

self.x = self.x + np.dot(K, y)

# P = (I - K*H)*P

I = np.eye(self.P.shape[0])

self.P = (I - K @ self.H) @ self.P

def plan_trajectory(current_state, goal_state, obstacles):

"""

Very simplified trajectory planning in 2D.

obstacles: list of obstacle positions

Returns: list of waypoints from current_state to goal_state

"""

# This is a naive approach: we just move in a straight line if no obstacles.

# Real world planning would be more complex, using RRT, A*, etc.

path = []

steps = 50

dx = (goal_state[0] - current_state[0]) / steps

dy = (goal_state[1] - current_state[1]) / steps

for i in range(steps):

x = current_state[0] + dx*i

y = current_state[1] + dy*i

# collision check

for obs in obstacles:

if np.linalg.norm(np.array([x, y]) - np.array(obs)) < 0.2:

# if too close, you would normally replan

pass

path.append((x, y))

return path

# Example usage:

# Initialize a 4D state: [pos_x, pos_y, vel_x, vel_y]

A = np.array([[1, 0, 1, 0],

[0, 1, 0, 1],

[0, 0, 1, 0],

[0, 0, 0, 1]])

B = np.eye(4)

H = np.eye(4)

Q = 0.01*np.eye(4)

R = 0.05*np.eye(4)

kf = KalmanFilter(A, B, H, Q, R)

kf.initialize(x0=np.array([0., 0., 0., 0.]), P0=0.1*np.eye(4))

# Suppose we get a measurement from sensors

measurement = np.array([0.05, 0.02, 0.0, 0.0])

control_input = np.array([0., 0., 0.1, 0.1])

kf.predict(control_input)

kf.update(measurement)

# Plan a naive trajectory

goal_state = np.array([2.0, 2.0])

obstacles = [(1.0, 1.0)]

trajectory = plan_trajectory(kf.x[:2], goal_state, obstacles)

print("Planned trajectory:", trajectory[:5], "... (truncated)")

This example, albeit simplistic, highlights how state estimation and naive planning can be integrated. In more advanced AI-driven navigation systems, the planning block would be replaced with sophisticated motion planning methods — such as a sampling-based planner augmented by learned cost functions — and the Kalman filter might be replaced (or supplemented) by more sophisticated Bayesian filtering or sensor fusion frameworks capable of handling multiple sensor modalities.

I hope this comprehensive exploration of AI-driven navigation elucidates the intricate interplay between perception, prediction, and planning, and how these pillars converge into robust, real-time systems. As research continues to advance, I anticipate ever more capable and intelligent navigation solutions, from self-driving cars to fleets of coordinated drones, all the way to complex robots operating autonomously in unstructured, human-inhabited spaces. The future indeed looks bright for AI-based navigation.